Automatic Differentiation, Explainable AI and #JuliaLang.

Open source person: adrianhill.de/projects

Unfortunately, this convolution is computationally infeasible in high dimensions. Naive Monte Carlo approximation results in the popular SmoothGrad method.

Unfortunately, this convolution is computationally infeasible in high dimensions. Naive Monte Carlo approximation results in the popular SmoothGrad method.

Unfortunately, gradients of deep NN resemble white noise, rendering them uninformative:

Unfortunately, gradients of deep NN resemble white noise, rendering them uninformative:

🧵1/6

🧵1/6

![Proof of concept for a Typst poster with a two-column layout and the entire code that produces it:

```typst

// Poster Template

#set page("a0", flipped: true,

columns: 2,

margin: 3cm,

background: rect(fill: blue, width: 100%, height: 100%),

)

#set columns(gutter: 2cm)

#set par(spacing: 2cm)

#set text(font: "Helvetica", size: 90pt)

#set rect(width: 100%, inset: 1cm, fill: white)

// Content

#place(top, scope: "parent", float: true, clearance: 2cm,

rect(height: 8cm)[Title],

)

#rect(height: 1fr)[Panel 1]

#rect(height: 1fr)[Panel 2]

#rect(height: 1fr)[Panel 3]

#colbreak()

#rect(height: 3fr)[Panel 4]

#rect(height: 2fr)[Panel 5]

#place(bottom, scope: "parent", float: true, clearance: 2cm,

rect(height: 5cm)[Footer],

)

```](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:itr23nhumbwtts2cwfcoj747/bafkreiak5x5jbtq634oinzjb4dwolnjw6xyfxoavdfdk5gqa7lmjojn2ye@jpeg)

github.com/JuliaPlots/U...

github.com/JuliaPlots/U...

info.arxiv.org/help/submit_...

info.arxiv.org/help/submit_...

📖: github.com/adrhill/juli...

📖: github.com/adrhill/juli...

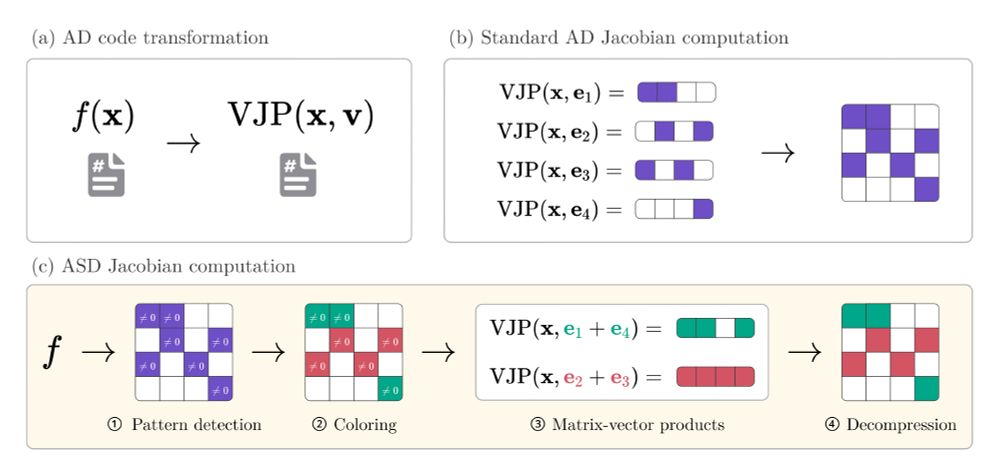

arxiv.org/abs/2501.17737

🧵1/8

arxiv.org/abs/2501.17737

🧵1/8