www.theguardian.com/global-devel...

The fact that people attack EVs - and *by consequence, defend oil* - for environmental and moral standards, is frankly, patently absurd.

www.theguardian.com/global-devel...

The fact that people attack EVs - and *by consequence, defend oil* - for environmental and moral standards, is frankly, patently absurd.

- To make it possible to use the similarities of multiple vector pairs to determine where attention must focus, instead of just single vector pair.

- They add convolutions for keys, queries, and attention heads to allow conditioning on neighboring tokens!

- To make it possible to use the similarities of multiple vector pairs to determine where attention must focus, instead of just single vector pair.

- They add convolutions for keys, queries, and attention heads to allow conditioning on neighboring tokens!

www.youtube.com/shorts/x2grE...

www.youtube.com/shorts/x2grE...

transformer-circuits.pub/2025/attribu...

... and while nothing should shock those who follow the research on how LLMs "think", it does such a good job laying it out that deserves to be highlighted.

Let's dig in (🧵)!

transformer-circuits.pub/2025/attribu...

... and while nothing should shock those who follow the research on how LLMs "think", it does such a good job laying it out that deserves to be highlighted.

Let's dig in (🧵)!

Just as a demonstration, here's Claude answering a logic question I made up on the spot, about fictional concepts, with distraction sentences, and ordering shuffled.

Just as a demonstration, here's Claude answering a logic question I made up on the spot, about fictional concepts, with distraction sentences, and ordering shuffled.

huggingface.co/1bitLLM/bitn...

huggingface.co/1bitLLM/bitn...

1. Prompt

2. ChatGPT

3. Bard

1. Prompt

2. ChatGPT

3. Bard

Basically, rather than storing images, they learn what concepts look "like". What sort of shapes and colours and textures & relative sizes & the like they tend to have. So they can enhance e.g. how "egglike" the egg is, how "nestlike" the nest.

Basically, rather than storing images, they learn what concepts look "like". What sort of shapes and colours and textures & relative sizes & the like they tend to have. So they can enhance e.g. how "egglike" the egg is, how "nestlike" the nest.

arxiv.org/pdf/2310.022...

They actually develop a sort of "temporal lobe", with specific clusters of neurons dedicated to spatiotemporal tasks. The paper trained "probes" to predict time/coords from neuron activations to make the maps.

arxiv.org/pdf/2310.022...

They actually develop a sort of "temporal lobe", with specific clusters of neurons dedicated to spatiotemporal tasks. The paper trained "probes" to predict time/coords from neuron activations to make the maps.

transformer-circuits.pub/2023/monosem...

transformer-circuits.pub/2023/monosem...

Splendid Fairy-wren: Yes

#urbanNature #wildOz

🪶🌏🐦

Splendid Fairy-wren: Yes

#urbanNature #wildOz

🪶🌏🐦

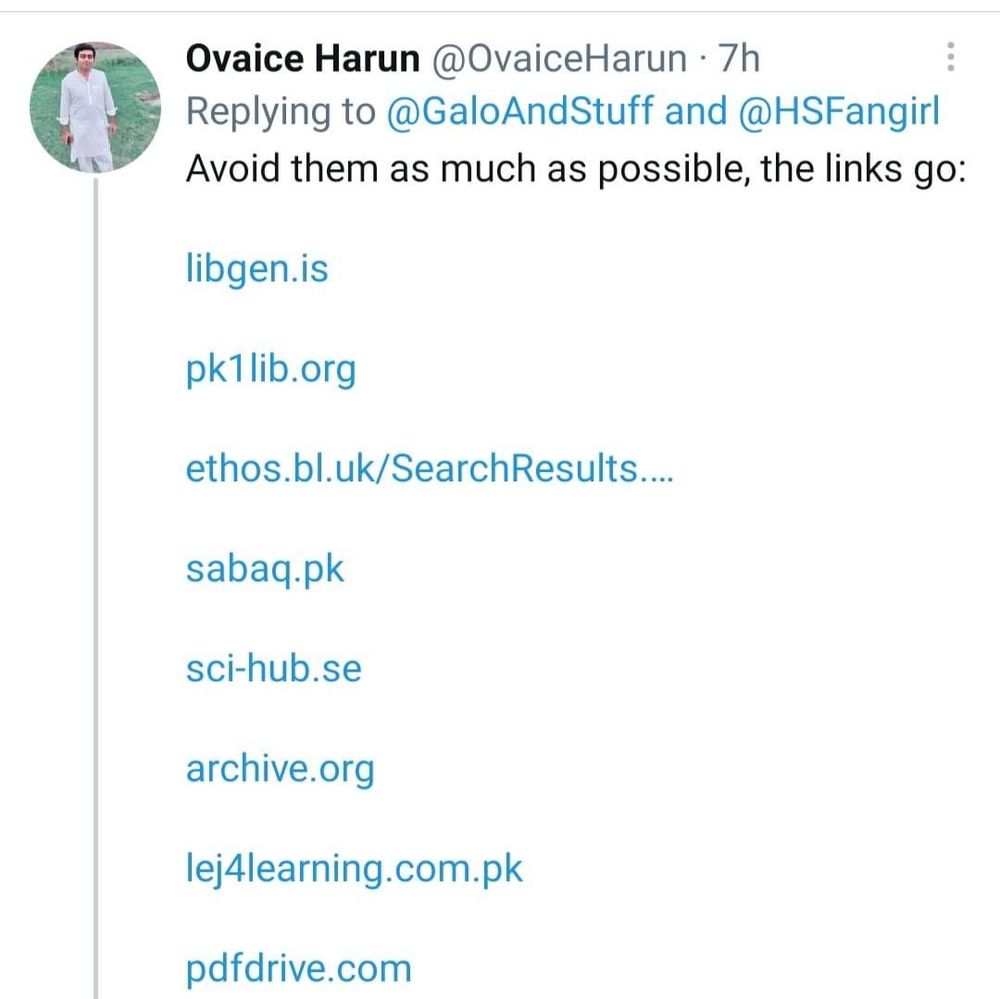

Warn your friends to never do this. Never ever.

The best ppl warn everybody.

Warn your friends to never do this. Never ever.

The best ppl warn everybody.

The thoughts themselves are never in words. But the brain loves to translate between thoughts and language, and in some people, it becomes an inner monologue.

The thoughts themselves are never in words. But the brain loves to translate between thoughts and language, and in some people, it becomes an inner monologue.

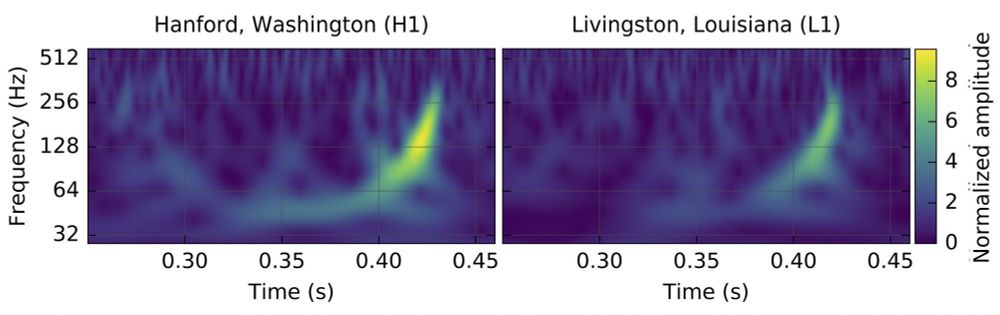

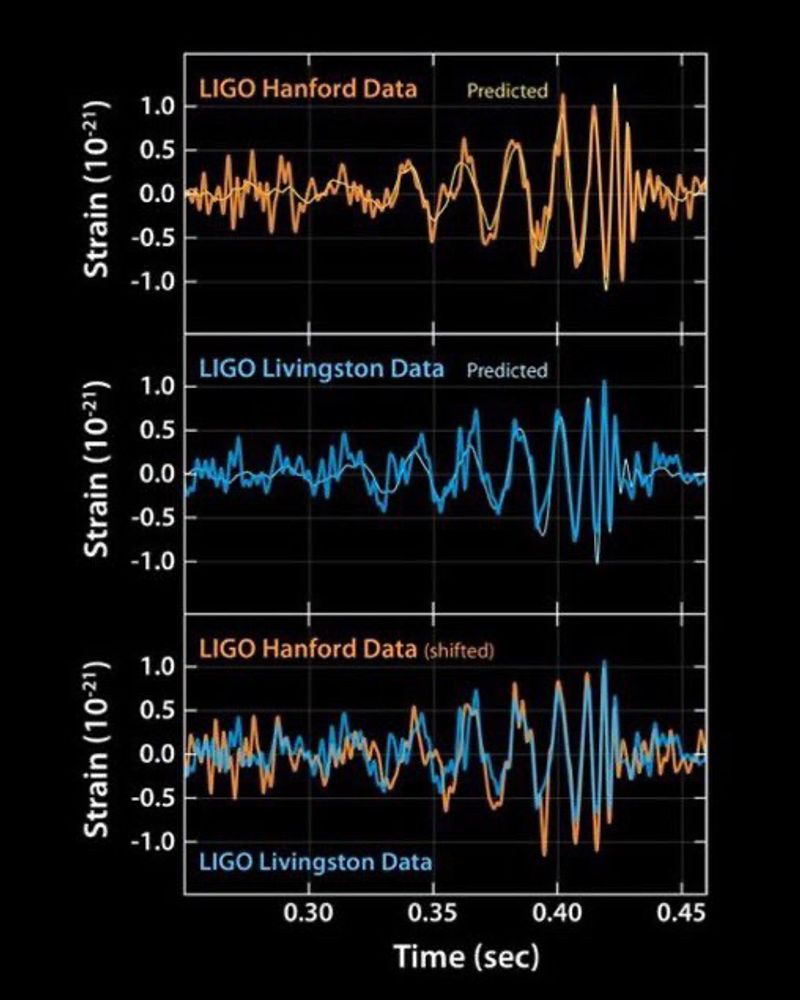

The discovery, announced the following February, marked the beginning of gravitational wave astronomy. 🧪 🔭

Figures: LIGO

The discovery, announced the following February, marked the beginning of gravitational wave astronomy. 🧪 🔭

Figures: LIGO

Likes are public on Bluesky. We’ve added a Likes tab to your own profile so you can quickly find past posts you’ve liked, but we chose not to show that tab on other users’ profiles.

Those likes can still be accessed by APIs, so other apps may show them.

Likes are public on Bluesky. We’ve added a Likes tab to your own profile so you can quickly find past posts you’ve liked, but we chose not to show that tab on other users’ profiles.

Those likes can still be accessed by APIs, so other apps may show them.

(ironically it’s because i want to mute the keyword “twitter” on here, among other keywords)

(ironically it’s because i want to mute the keyword “twitter” on here, among other keywords)