Alexander Hoyle

@alexanderhoyle.bsky.social

2.3K followers

290 following

200 posts

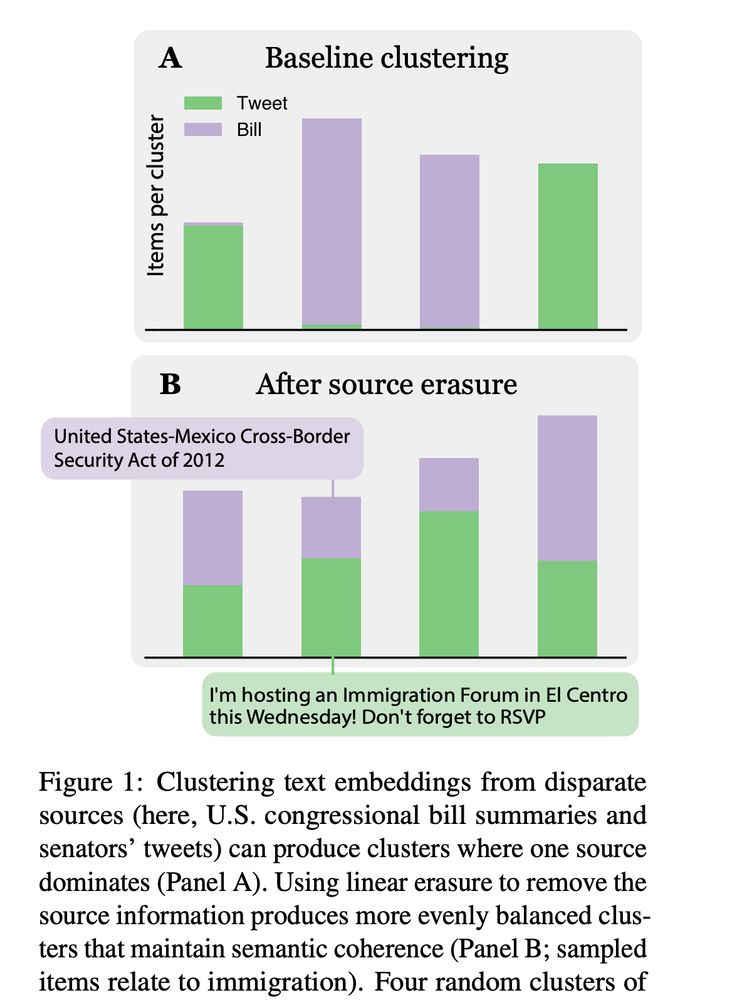

Postdoctoral fellow at ETH AI Center, working on Computational Social Science + NLP. Previously a PhD in CS at UMD, advised by Philip Resnik. Internships at MSR, AI2. he/him

alexanderhoyle.com

Posts

Media

Videos

Starter Packs

Reposted by Alexander Hoyle

Reposted by Alexander Hoyle