Alfredo Canziani

@alfcnz.bsky.social

3.4K followers

94 following

290 posts

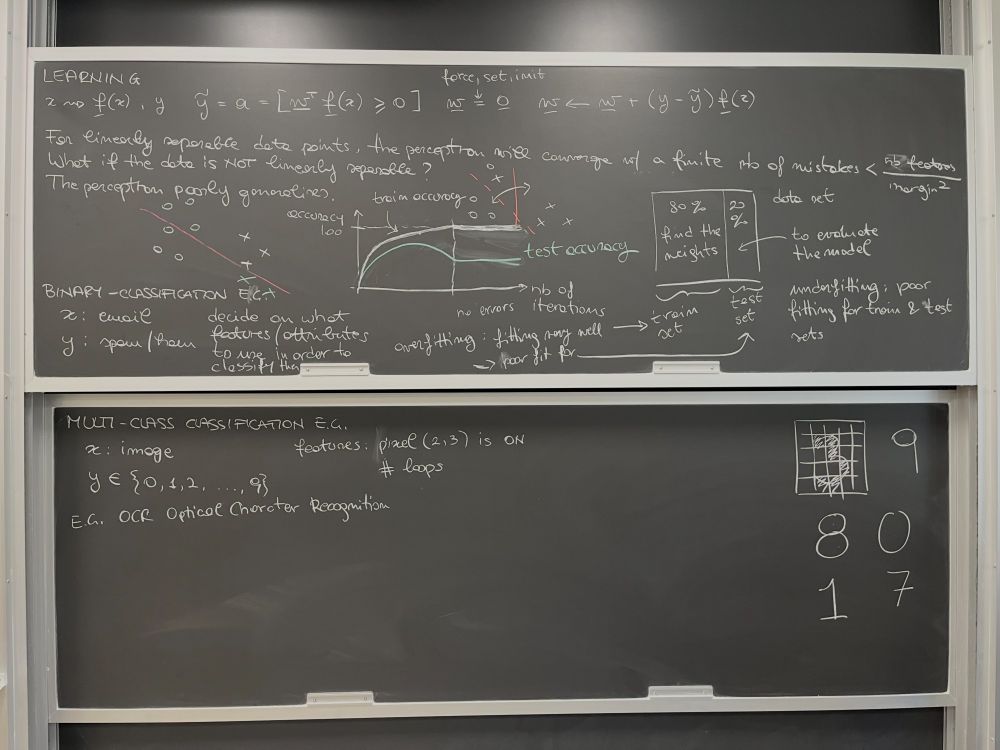

Musician, math lover, cook, dancer, 🏳️🌈, and an ass prof of Computer Science at New York University

Posts

Media

Videos

Starter Packs

Alfredo Canziani

@alfcnz.bsky.social

· Jul 2

Alfredo Canziani

@alfcnz.bsky.social

· Jun 30

Reposted by Alfredo Canziani

Alfredo Canziani

@alfcnz.bsky.social

· Jun 10

Alfredo Canziani

@alfcnz.bsky.social

· Jun 7

Alfredo Canziani

@alfcnz.bsky.social

· Apr 10

Alfredo Canziani

@alfcnz.bsky.social

· Apr 9

Reposted by Alfredo Canziani

khipu.ai

@khipu-ai.bsky.social

· Mar 11

Reposted by Alfredo Canziani

Alfredo Canziani

@alfcnz.bsky.social

· Feb 1

Alfredo Canziani

@alfcnz.bsky.social

· Jan 30

Alfredo Canziani

@alfcnz.bsky.social

· Jan 26

Alfredo Canziani

@alfcnz.bsky.social

· Jan 26

Alfredo Canziani

@alfcnz.bsky.social

· Jan 26