Anand Bhattad

@anandbhattad.bsky.social

200 followers

200 following

59 posts

Incoming Assistant Professor at Johns Hopkins University | RAP at Toyota Technological Institute at Chicago | web: https://anandbhattad.github.io/ | Knowledge in Generative Image Models, Intrinsic Images, Image-based Relighting, Inverse Graphics

Posts

Media

Videos

Starter Packs

Anand Bhattad

@anandbhattad.bsky.social

· Jun 30

Reposted by Anand Bhattad

Anand Bhattad

@anandbhattad.bsky.social

· Jun 23

Reposted by Anand Bhattad

Anand Bhattad

@anandbhattad.bsky.social

· Apr 29

Anand Bhattad

@anandbhattad.bsky.social

· Apr 29

Anand Bhattad

@anandbhattad.bsky.social

· Apr 15

Reposted by Anand Bhattad

Anand Bhattad

@anandbhattad.bsky.social

· Mar 29

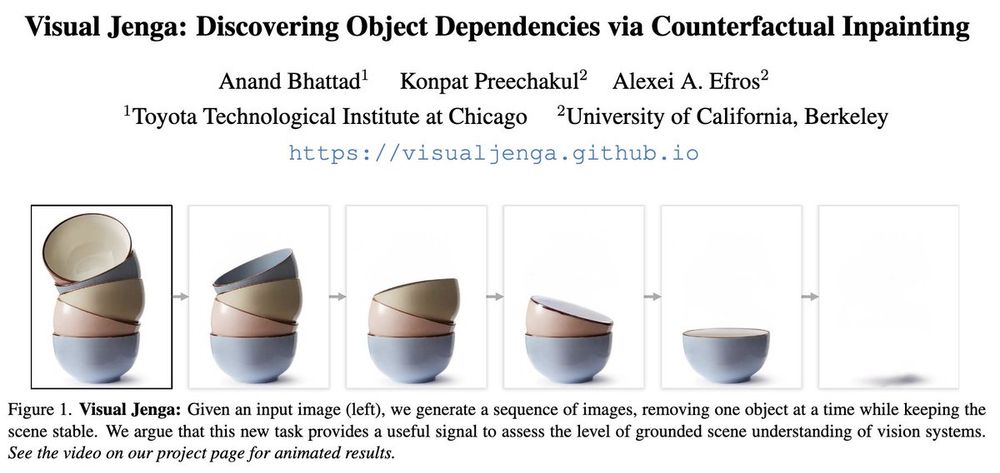

Visual Jenga: Discovering Object Dependencies via Counterfactual Inpainting

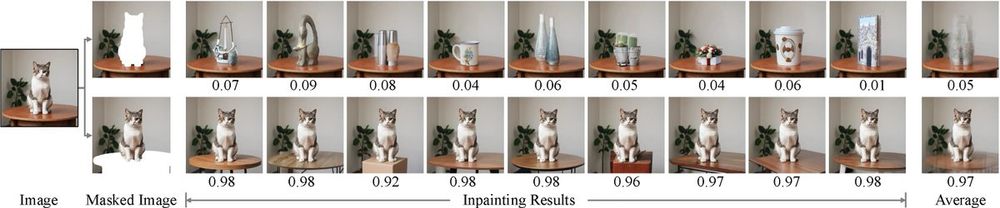

Visual Jenga is a new scene understanding task where the goal is to remove objects one by one from a single image while keeping the rest of the scene stable. We introduce a simple baseline that uses a...

visualjenga.github.io

Anand Bhattad

@anandbhattad.bsky.social

· Mar 29