Andrew Stellman 👾

@andrewstellman.bsky.social

380 followers

420 following

1.1K posts

Author, developer, team lead, musician.

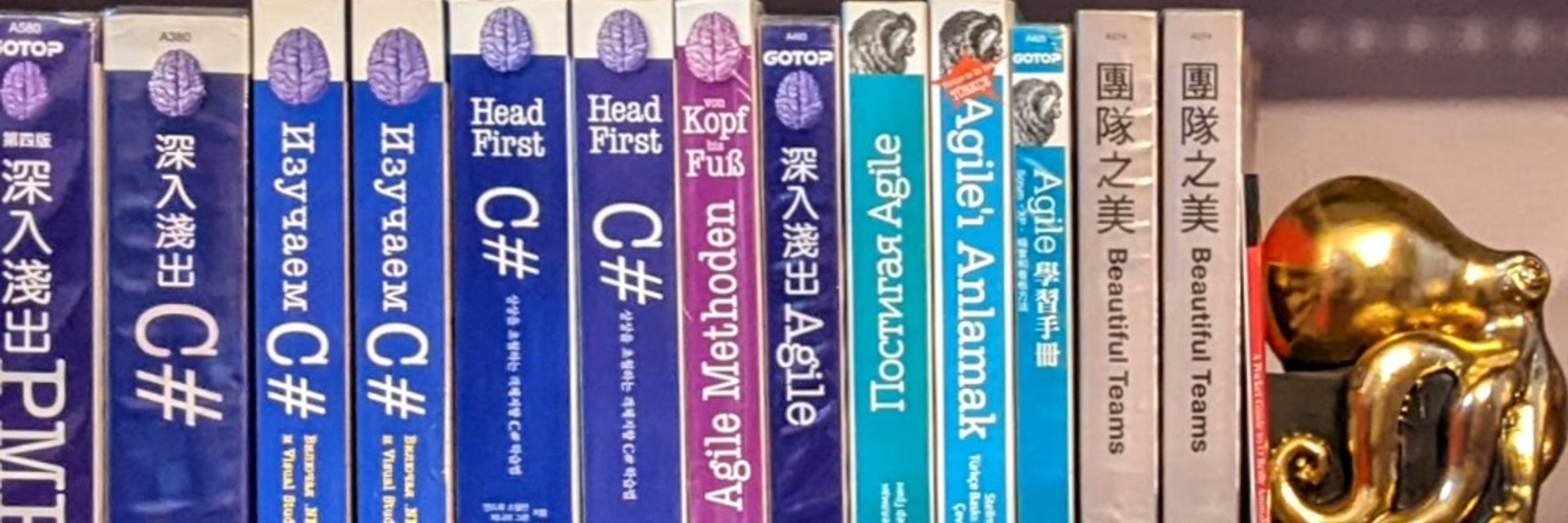

Author of O'Reilly books including Head First C#, Learning Agile, and Head First PMP.

Solving complexity with simplicity.

Posts

Media

Videos

Starter Packs

Reposted by Andrew Stellman 👾