T. Anderson Keller

@andykeller.bsky.social

320 followers

310 following

17 posts

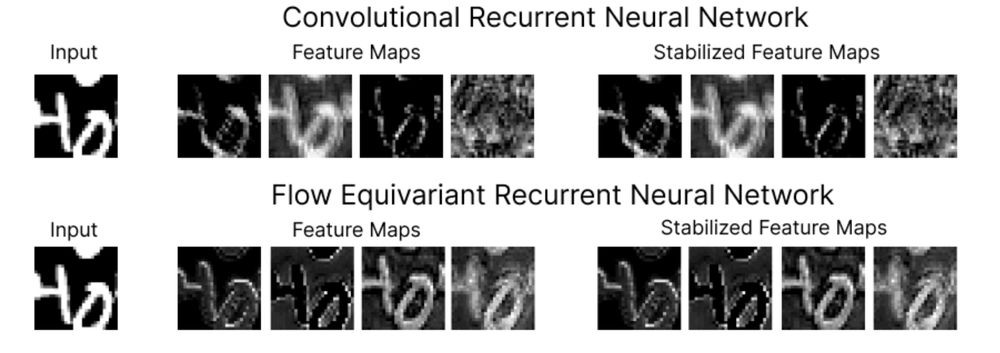

Postdoctoral Fellow at Harvard Kempner Institute. Trying to bring natural structure to artificial neural representations. Prev: PhD at UvA. Intern @ Apple MLR, Work @ Intel Nervana

Posts

Media

Videos

Starter Packs

Pinned

Reposted by T. Anderson Keller

Reposted by T. Anderson Keller

Reposted by T. Anderson Keller

Reposted by T. Anderson Keller

Reposted by T. Anderson Keller

Reposted by T. Anderson Keller

Reposted by T. Anderson Keller

Reposted by T. Anderson Keller

Reposted by T. Anderson Keller

Yohan J John

@dryohanjohn.bsky.social

· Mar 10