PhD student, NJIT 🎓 | NLP at Bloomberg 🛠️

Website: vermaapurv.com/aboutme/

We found it shifts AI safety behavior. Our fix: generate 2-4 responses, pick the best one 🎯

"Watermarking Degrades Alignment in Language Models" 📄

arxiv.org/abs/2506.04462

#AIResearch #AISafety #Watermarking #LLMs

We found it shifts AI safety behavior. Our fix: generate 2-4 responses, pick the best one 🎯

"Watermarking Degrades Alignment in Language Models" 📄

arxiv.org/abs/2506.04462

#AIResearch #AISafety #Watermarking #LLMs

epoch.ai/gradient-upd...

epoch.ai/gradient-upd...

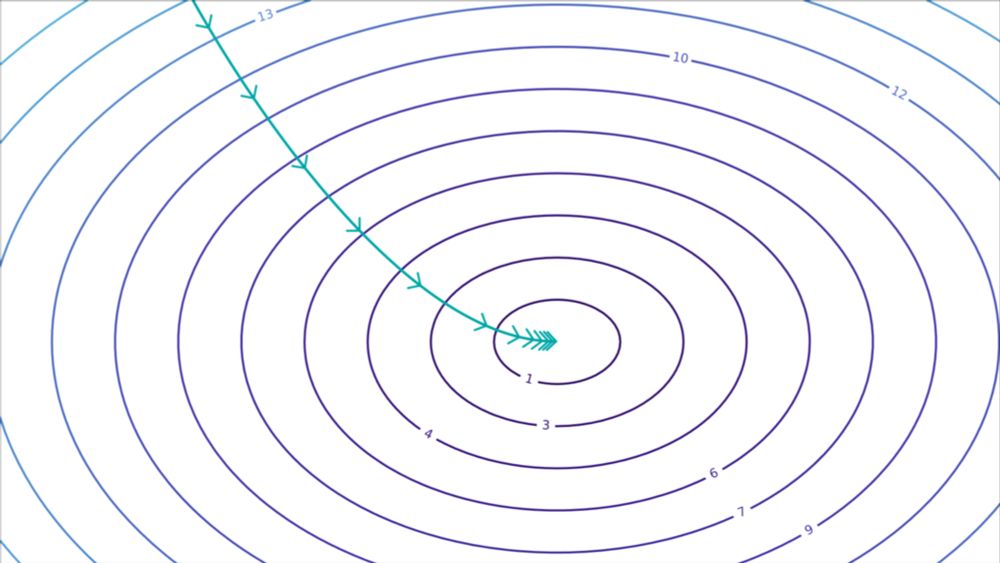

For categorical/Gaussian distributions, they derive the rate at which a sample is forgotten to be 1/k after k rounds of recursive training (hence 𝐦𝐨𝐝𝐞𝐥 𝐜𝐨𝐥𝐥𝐚𝐩𝐬𝐞 happens more slowly than intuitively expected)

For categorical/Gaussian distributions, they derive the rate at which a sample is forgotten to be 1/k after k rounds of recursive training (hence 𝐦𝐨𝐝𝐞𝐥 𝐜𝐨𝐥𝐥𝐚𝐩𝐬𝐞 happens more slowly than intuitively expected)