Work: IT at University of Sheffield

1) Increase tokens by the use of parallel runs on the same task (like how METR do their evals)

2) Author doesn’t say how he got round guardrails.

sean.heelan.io/2026/01/18/o...

1) Increase tokens by the use of parallel runs on the same task (like how METR do their evals)

2) Author doesn’t say how he got round guardrails.

sean.heelan.io/2026/01/18/o...

Grok hasn’t done this. X has done this to grok. And specifically Musk.

www.theguardian.com/technology/2...

Grok hasn’t done this. X has done this to grok. And specifically Musk.

www.theguardian.com/technology/2...

'Your DA monitors 47 nearby targets. It alerts you about the woman going into a darker section' or 'Your son has been looking at LGBT content. Want me to book him into a conversion camp?'

2/

'Your DA monitors 47 nearby targets. It alerts you about the woman going into a darker section' or 'Your son has been looking at LGBT content. Want me to book him into a conversion camp?'

2/

This framing partially resonates. I'm less keen on starting skill level as the key (on which axis do we measure etc), but because if LLM competency is the variable and the exponent, then learned skill matters so much more

This framing partially resonates. I'm less keen on starting skill level as the key (on which axis do we measure etc), but because if LLM competency is the variable and the exponent, then learned skill matters so much more

I don’t think this is a good thing, but it seems clear that it’s the way it’s going

I don’t think this is a good thing, but it seems clear that it’s the way it’s going

Google does seem to be proving that just scaling LLMs is still working

generativehistory.substack.com/p/the-sugar-...

Google does seem to be proving that just scaling LLMs is still working

generativehistory.substack.com/p/the-sugar-...

a) model training costs (financial and environmental) are ~5-10x the final run and

b) inference costs (financial and environmental) are smaller than assumed

I'm making heroic assumptions for a). No-one outside OpenAI can answer properly

a) model training costs (financial and environmental) are ~5-10x the final run and

b) inference costs (financial and environmental) are smaller than assumed

I'm making heroic assumptions for a). No-one outside OpenAI can answer properly

Spoiler - this was not the reason!

Spoiler - this was not the reason!

(from the @resprofnews.bsky.social newsletter)

(from the @resprofnews.bsky.social newsletter)

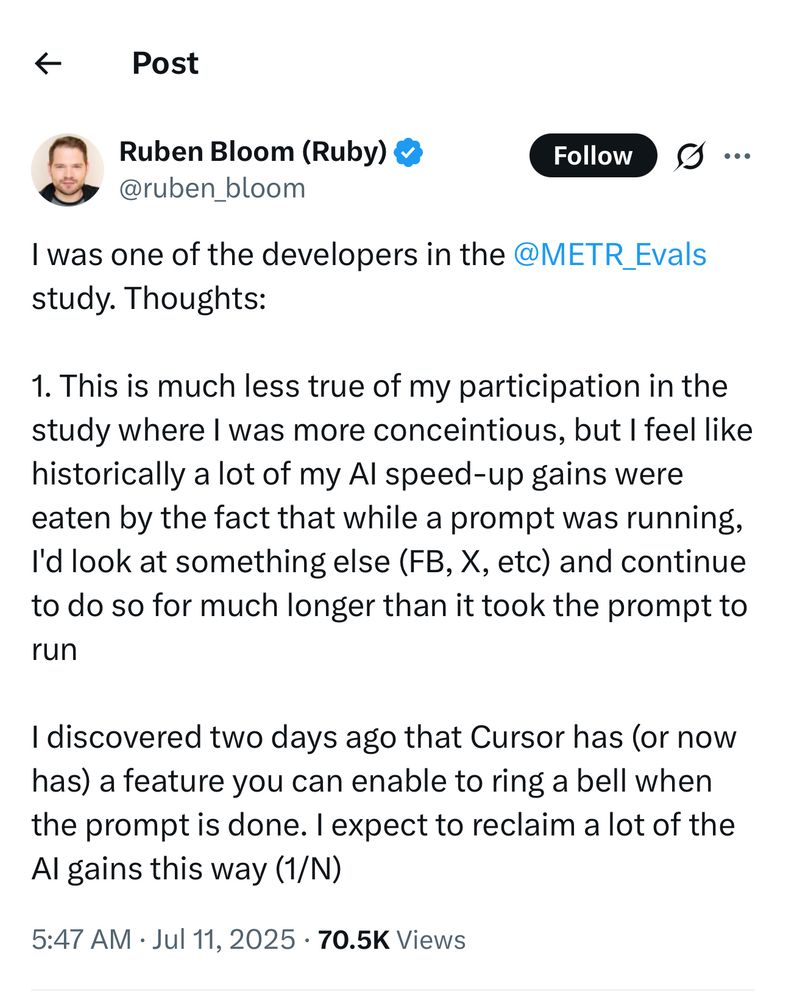

x.com/ruben_bloom/...

x.com/ruben_bloom/...

observer.co.uk/newsletters

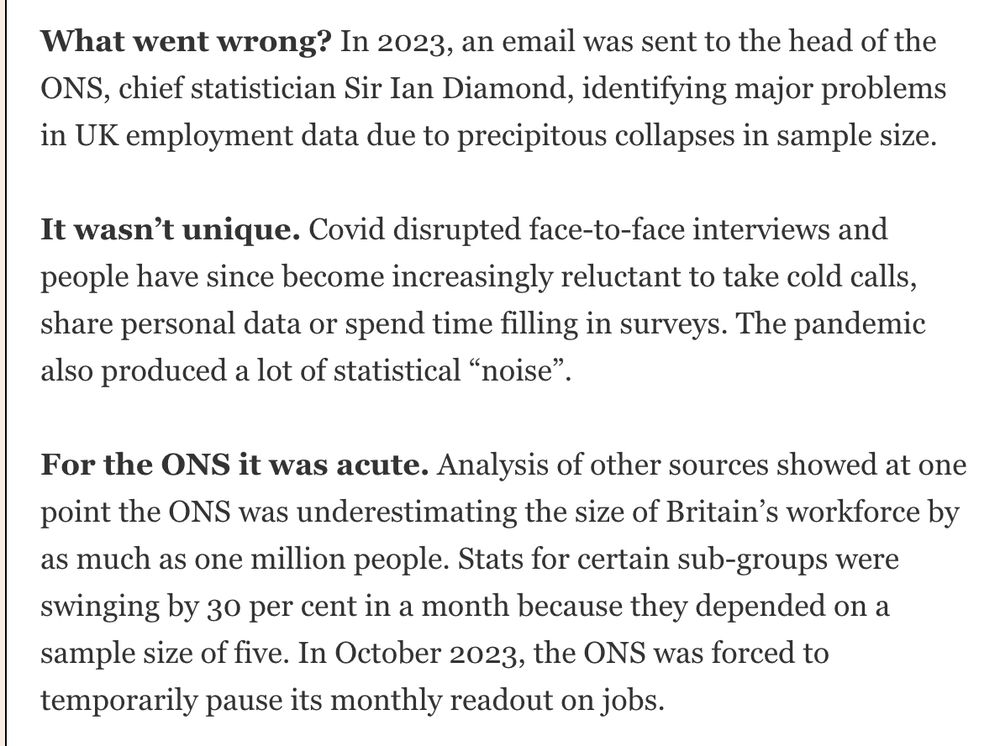

It also appears it may be another instance of problems of governance and leaders who don't listen

archive.is/lvPkk

And yes, Newport is not attractive and doesn't help

observer.co.uk/newsletters

It also appears it may be another instance of problems of governance and leaders who don't listen

archive.is/lvPkk

And yes, Newport is not attractive and doesn't help