Ben Lipkin

@benlipkin.bsky.social

1K followers

280 following

25 posts

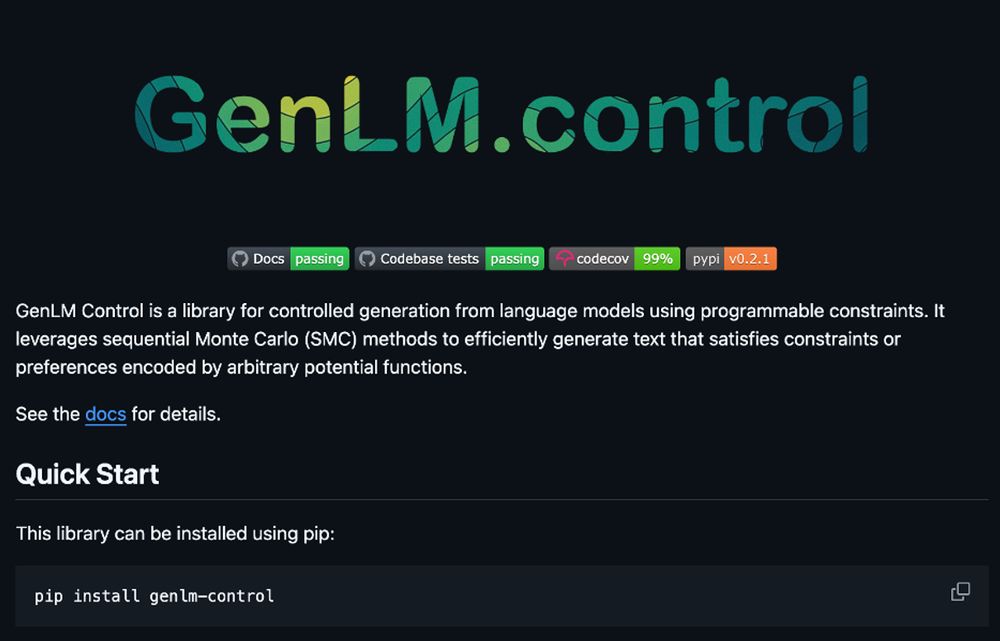

phd @ mit, research @ genlm, intern @ apple

https://benlipkin.github.io/

Posts

Media

Videos

Starter Packs

Pinned

Ben Lipkin

@benlipkin.bsky.social

· May 13

Reposted by Ben Lipkin

Reposted by Ben Lipkin

Hope Kean

@hopekean.bsky.social

· Aug 3

Evidence from Formal Logical Reasoning Reveals that the Language of Thought is not Natural Language

Humans are endowed with a powerful capacity for both inductive and deductive logical thought: we easily form generalizations based on a few examples and draw conclusions from known premises. Humans al...

tinyurl.com

Reposted by Ben Lipkin

Ben Lipkin

@benlipkin.bsky.social

· May 13

Ben Lipkin

@benlipkin.bsky.social

· May 13

Ben Lipkin

@benlipkin.bsky.social

· May 13

Ben Lipkin

@benlipkin.bsky.social

· May 13

Ben Lipkin

@benlipkin.bsky.social

· May 13

Ben Lipkin

@benlipkin.bsky.social

· May 13

Reposted by Ben Lipkin

Reposted by Ben Lipkin

Reposted by Ben Lipkin

Colton Casto

@coltoncasto.bsky.social

· Apr 21

The cerebellar components of the human language network

The cerebellum's capacity for neural computation is arguably unmatched. Yet despite evidence of cerebellar contributions to cognition, including language, its precise role remains debated. Here, we sy...

www.biorxiv.org

Ben Lipkin

@benlipkin.bsky.social

· Apr 10

Ben Lipkin

@benlipkin.bsky.social

· Apr 10

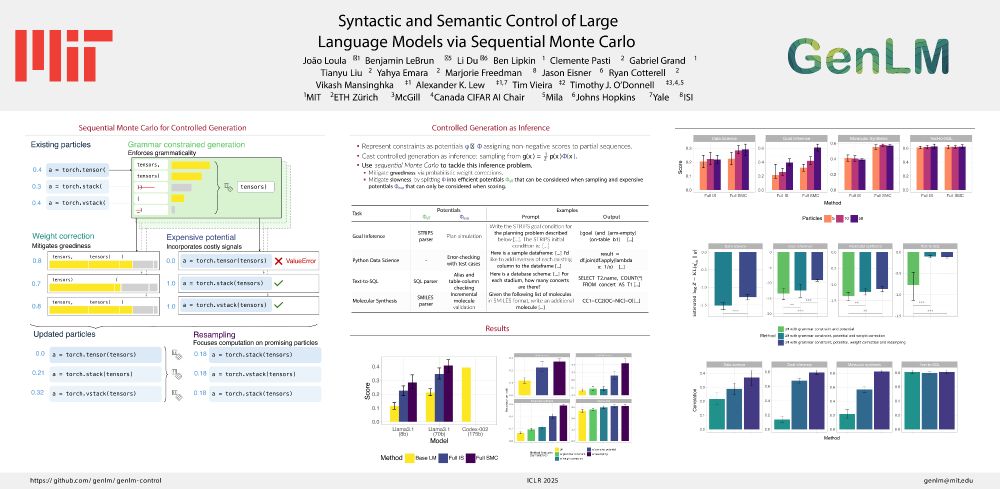

Fast Controlled Generation from Language Models with Adaptive Weighted Rejection Sampling

The dominant approach to generating from language models subject to some constraint is locally constrained decoding (LCD), incrementally sampling tokens at each time step such that the constraint is n...

arxiv.org