Ben Hoover

@bhoov.bsky.social

96 followers

76 following

9 posts

PhD student@GA Tech; Research Engineer @IBM Research. Thinking about Associative Memory, Hopfield Networks, and AI.

Posts

Media

Videos

Starter Packs

Pinned

Reposted by Ben Hoover

Reposted by Ben Hoover

Reposted by Ben Hoover

Ben Hoover

@bhoov.bsky.social

· Dec 4

Ben Hoover

@bhoov.bsky.social

· Dec 4

Reposted by Ben Hoover

Ben Hoover

@bhoov.bsky.social

· Dec 3

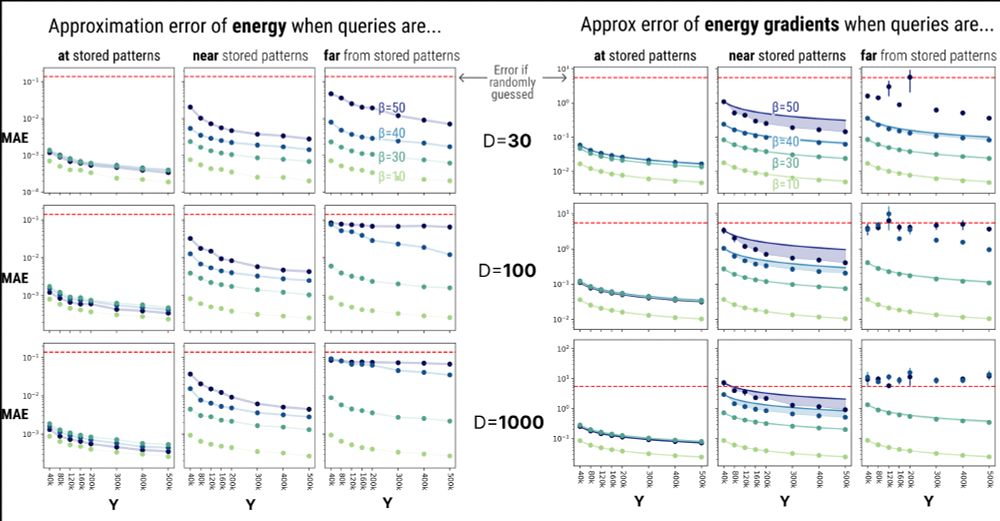

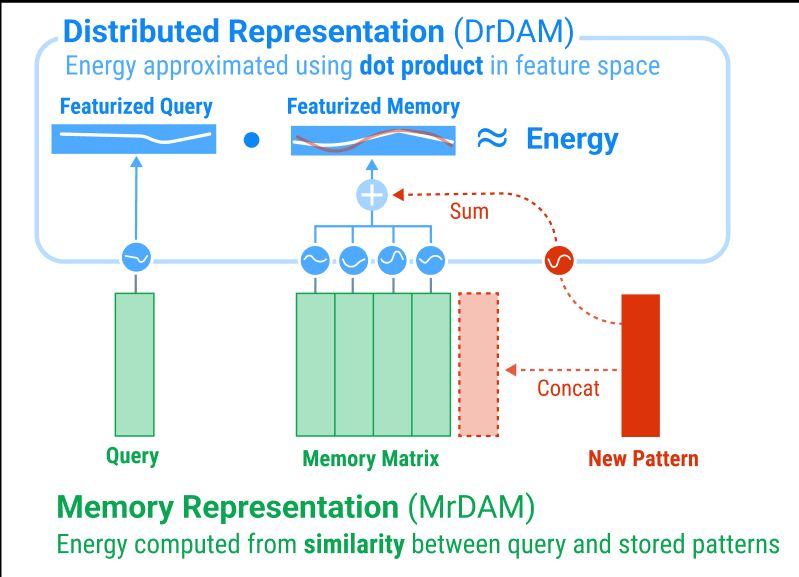

Dense Associative Memory Through the Lens of Random Features

Dense Associative Memories are high storage capacity variants of the Hopfield networks that are capable of storing a large number of memory patterns in the weights of the network of a given size. Thei...

arxiv.org