Bruno Ferman

@brunoferman.bsky.social

990 followers

260 following

19 posts

Professor at Sao Paulo School of Economics - FGV

Econometrics/Applied Micro/affiliate @JPAL

MIT Econ PhD

https://sites.google.com/site/brunoferman/home

Posts

Media

Videos

Starter Packs

Pinned

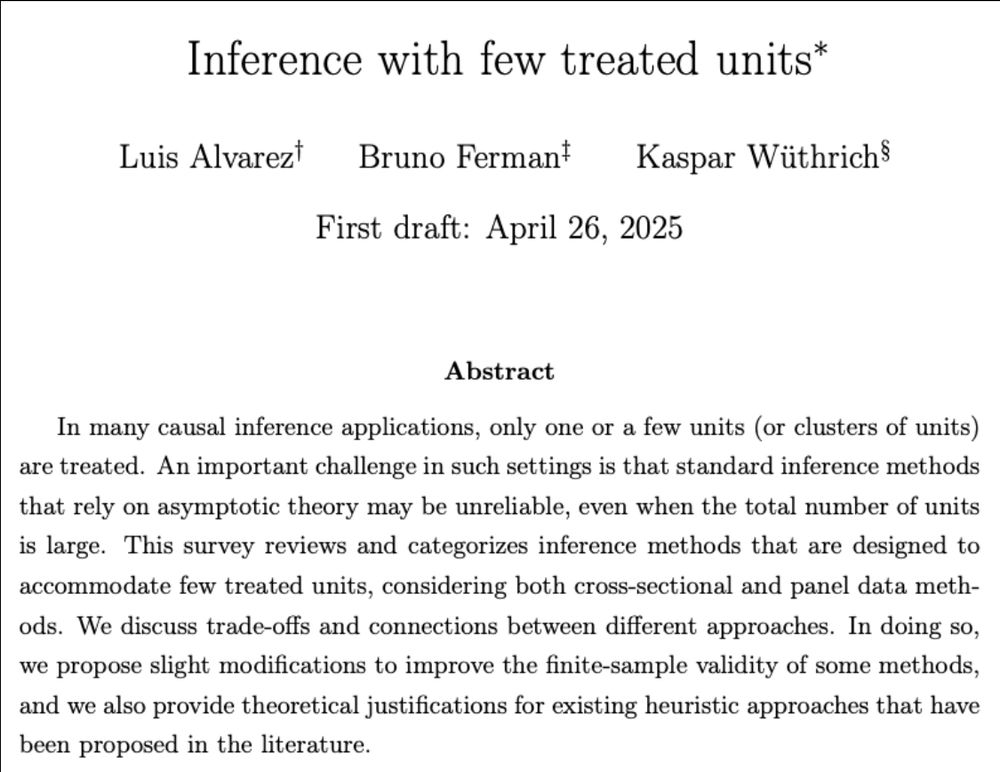

Bruno Ferman

@brunoferman.bsky.social

· May 1

Reposted by Bruno Ferman

Reposted by Bruno Ferman

Bruno Ferman

@brunoferman.bsky.social

· May 1

Bruno Ferman

@brunoferman.bsky.social

· Apr 29

Bruno Ferman

@brunoferman.bsky.social

· Apr 29

Bruno Ferman

@brunoferman.bsky.social

· Apr 29

Bruno Ferman

@brunoferman.bsky.social

· Apr 29

Bruno Ferman

@brunoferman.bsky.social

· Apr 29

Bruno Ferman

@brunoferman.bsky.social

· Apr 29

Bruno Ferman

@brunoferman.bsky.social

· Apr 29

Bruno Ferman

@brunoferman.bsky.social

· Apr 29

Bruno Ferman

@brunoferman.bsky.social

· Apr 29

Bruno Ferman

@brunoferman.bsky.social

· Apr 29

Bruno Ferman

@brunoferman.bsky.social

· Apr 29

Reposted by Bruno Ferman

Nico Ajzenman

@nicolasajz.bsky.social

· Jan 27

Discrimination in the Formation of Academic Networks: A Field Experiment on #EconTwitter

This paper experimentally documents discrimination in the formation of professional networks among academic economists. We created fictitious human-like bot acc

papers.ssrn.com