Can Demircan

@candemircan.bsky.social

56 followers

260 following

8 posts

phd student in Munich, working on machine learning and cognitive science

Posts

Media

Videos

Starter Packs

Reposted by Can Demircan

Taylor Webb

@taylorwwebb.bsky.social

· Mar 10

Emergent Symbolic Mechanisms Support Abstract Reasoning in Large Language Models

Many recent studies have found evidence for emergent reasoning capabilities in large language models, but debate persists concerning the robustness of these capabilities, and the extent to which they ...

arxiv.org

Reposted by Can Demircan

Reposted by Can Demircan

Milena Rmus

@milenamr7.bsky.social

· Feb 26

Towards Automation of Cognitive Modeling using Large Language Models

Computational cognitive models, which formalize theories of cognition, enable researchers to quantify cognitive processes and arbitrate between competing theories by fitting models to behavioral data....

arxiv.org

Reposted by Can Demircan

Reposted by Can Demircan

Eric Schulz

@ericschulz.bsky.social

· Jan 22

Sparse Autoencoders Reveal Temporal Difference Learning in Large Language Models

In-context learning, the ability to adapt based on a few examples in the input prompt, is a ubiquitous feature of large language models (LLMs). However, as LLMs' in-context learning abilities continue...

arxiv.org

Can Demircan

@candemircan.bsky.social

· Dec 10

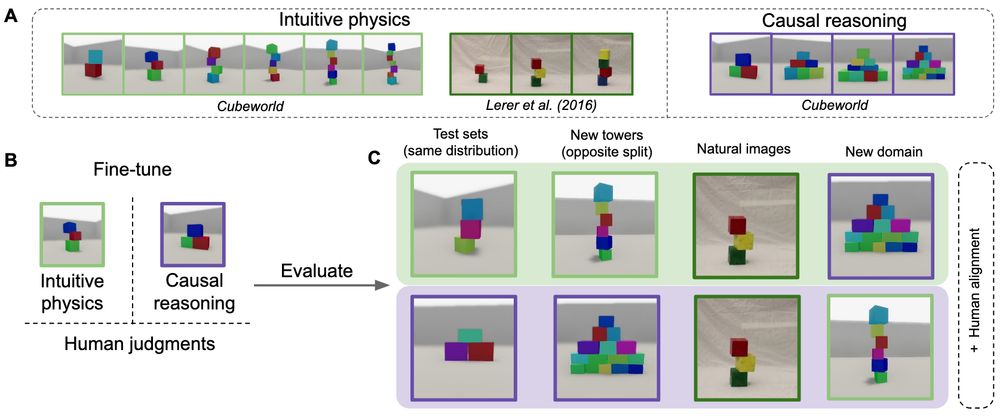

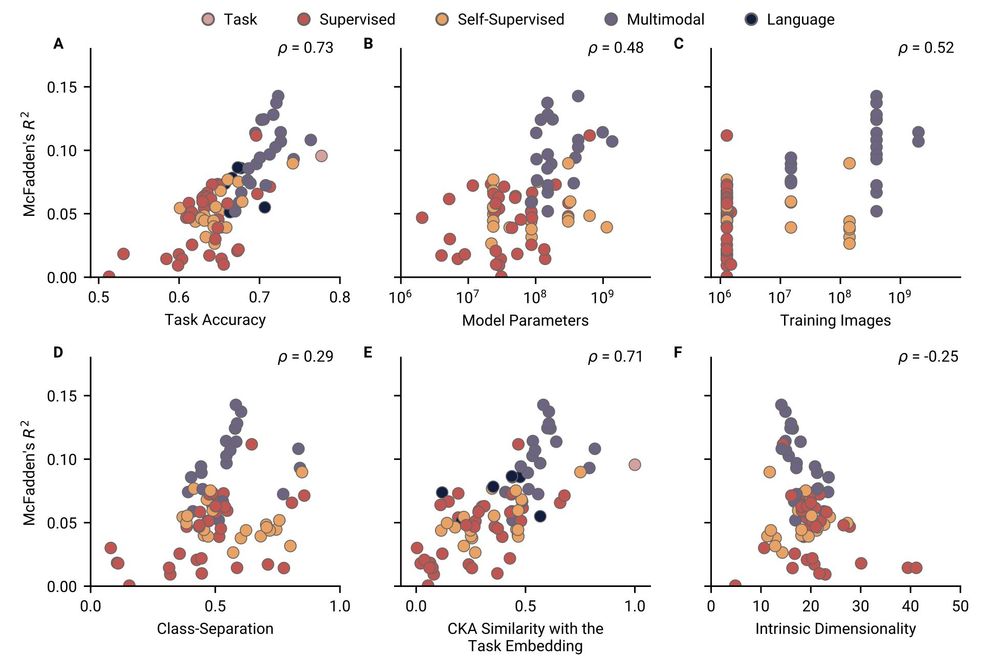

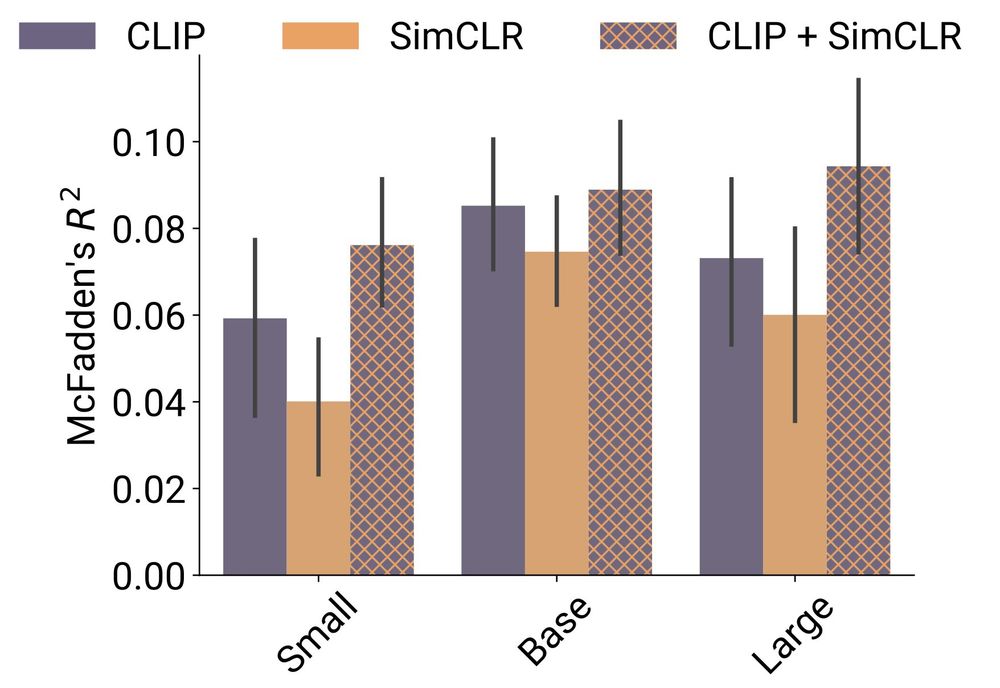

Evaluating alignment between humans and neural network representations in image-based learning tasks

Humans represent scenes and objects in rich feature spaces, carrying information that allows us to generalise about category memberships and abstract functions with few examples. What determines wheth...

arxiv.org

Can Demircan

@candemircan.bsky.social

· Dec 5

Reposted by Can Demircan