Cansu Sancaktar

@cansusancaktar.bsky.social

72 followers

44 following

8 posts

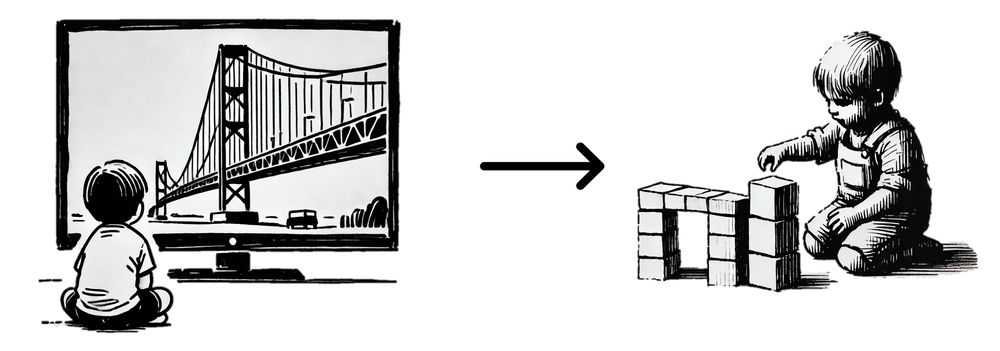

PhD Student @ Max Planck Institute for Intelligent Systems & University of Tübingen | Working on intrinsically motivated open-ended reinforcement learning 🤖

Posts

Media

Videos

Starter Packs

Reposted by Cansu Sancaktar