Catherine Arnett @ 🍁COLM🍁

@catherinearnett.bsky.social

3.9K followers

570 following

98 posts

NLP Researcher at EleutherAI, PhD UC San Diego Linguistics.

Previously PleIAs, Edinburgh University.

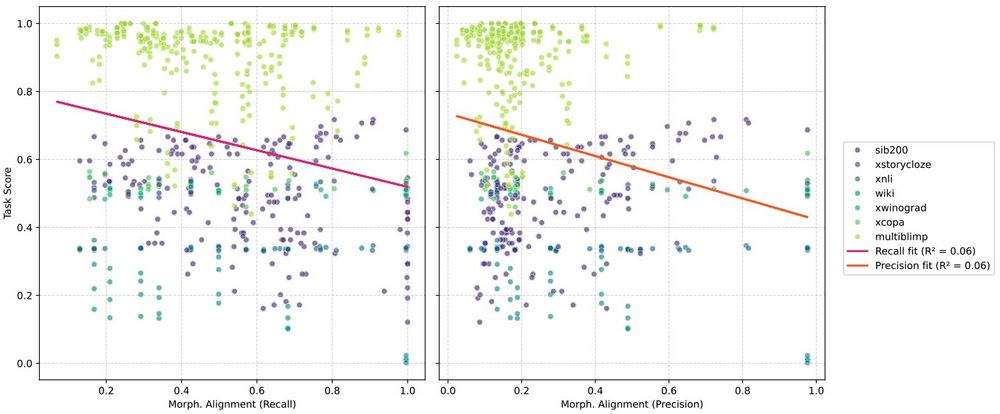

Interested in multilingual NLP, tokenizers, open science.

📍Boston. She/her.

https://catherinearnett.github.io/

Posts

Media

Videos

Starter Packs

Pinned

Reposted by Catherine Arnett @ 🍁COLM🍁

Reposted by Catherine Arnett @ 🍁COLM🍁

Reposted by Catherine Arnett @ 🍁COLM🍁

Reposted by Catherine Arnett @ 🍁COLM🍁

Reposted by Catherine Arnett @ 🍁COLM🍁

Pedro Ortiz Suarez

@pjox.bsky.social

· Jul 21