Kerry Champion

@causalai.bsky.social

Investigating Causal AI Commercialization

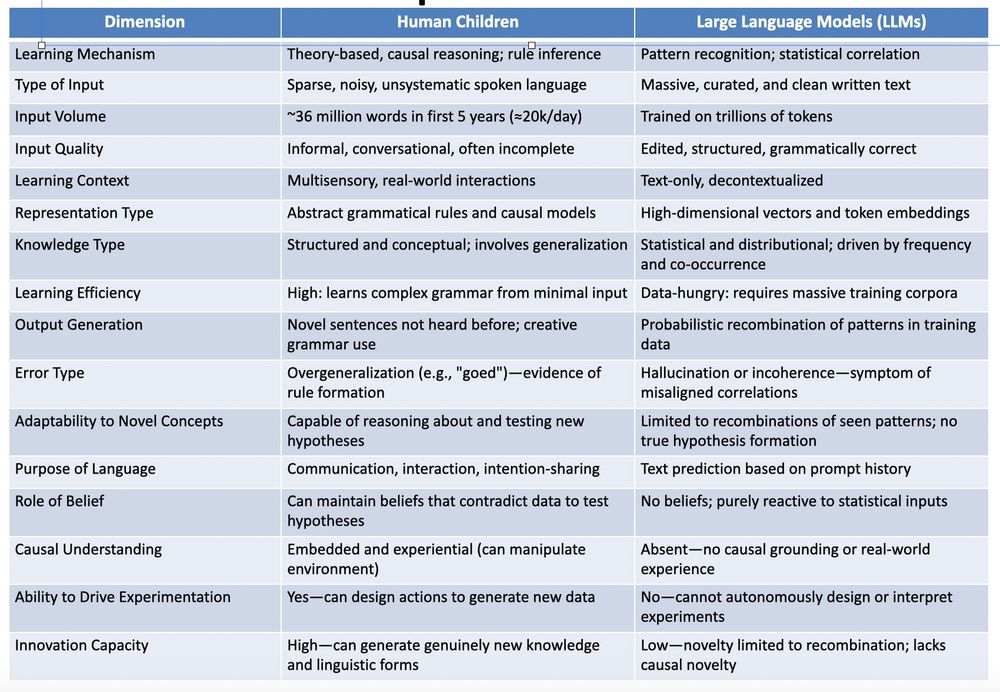

LLMs are missing “theoretical abstraction” capability we see in children.

Multiple folks pointed this out, for example @teppofelin.bsky.social and Holweg in “Theory Is All You Need: AI, Human Cognition, and Causal Reasoning”. papers.ssrn.com/sol3/papers....

#AI #CausalAI #SymbolicAI

1/n

Multiple folks pointed this out, for example @teppofelin.bsky.social and Holweg in “Theory Is All You Need: AI, Human Cognition, and Causal Reasoning”. papers.ssrn.com/sol3/papers....

#AI #CausalAI #SymbolicAI

1/n

June 22, 2025 at 10:39 PM

LLMs are missing “theoretical abstraction” capability we see in children.

Multiple folks pointed this out, for example @teppofelin.bsky.social and Holweg in “Theory Is All You Need: AI, Human Cognition, and Causal Reasoning”. papers.ssrn.com/sol3/papers....

#AI #CausalAI #SymbolicAI

1/n

Multiple folks pointed this out, for example @teppofelin.bsky.social and Holweg in “Theory Is All You Need: AI, Human Cognition, and Causal Reasoning”. papers.ssrn.com/sol3/papers....

#AI #CausalAI #SymbolicAI

1/n

Reposted by Kerry Champion

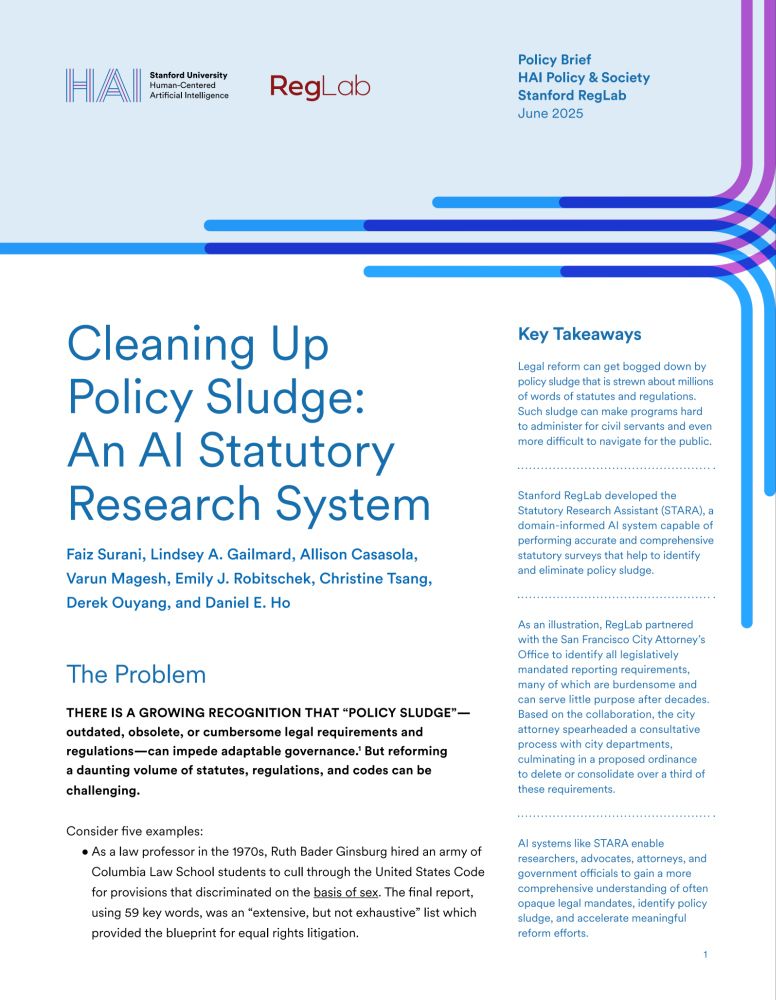

Legal reform can get bogged down by outdated or cumbersome regulations. Our latest brief with Stanford RegLab scholars presents an AI tool that helps governments—such as the San Francisco City Attorney's Office—identify and eliminate such “policy sludge.” hai.stanford.edu/policy/clean...

Cleaning Up Policy Sludge: An AI Statutory Research System | Stanford HAI

This brief introduces a novel AI tool that performs statutory surveys to help governments—such as the San Francisco City Attorney Office—identify policy sludge and accelerate legal reform.

hai.stanford.edu

June 19, 2025 at 4:50 PM

Legal reform can get bogged down by outdated or cumbersome regulations. Our latest brief with Stanford RegLab scholars presents an AI tool that helps governments—such as the San Francisco City Attorney's Office—identify and eliminate such “policy sludge.” hai.stanford.edu/policy/clean...

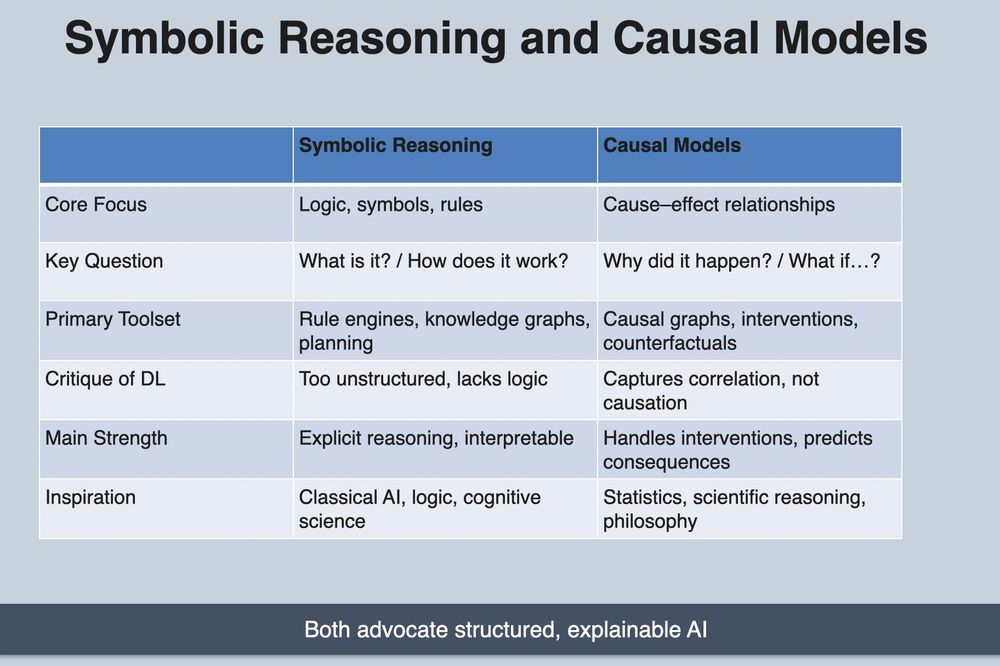

@jwmason.bsky.social The other thing worth knowing is that bigger LLMs are not the only path forward for AI. Combining LLMs with symbolic/causal models has the promise of creating hybrid AI systems that are much more reliable in reflecting the world as it is.

#AI #SymbolicAI #CausalAI

#AI #SymbolicAI #CausalAI

Chatbots — LLMs — do not know facts and are not designed to be able to accurately answer factual questions. They are designed to find and mimic patterns of words, probabilistically. When they’re “right” it’s because correct things are often written down, so those patterns are frequent. That’s all.

June 19, 2025 at 3:06 PM

@jwmason.bsky.social The other thing worth knowing is that bigger LLMs are not the only path forward for AI. Combining LLMs with symbolic/causal models has the promise of creating hybrid AI systems that are much more reliable in reflecting the world as it is.

#AI #SymbolicAI #CausalAI

#AI #SymbolicAI #CausalAI

LLMs form a semi-accurate representation of the world as it is reflected in the writing they train on. A next step would be to create hybrid AIs that combine LLMs with symbolic and causal models that have explicit (and more accurate/auditable) representations of the world #AI #SymbolicAI #CausalAI

LLMs don’t form a representation of the world and hence can’t distinguish between facts/falsehoods, not even probabilistically. How could they? They don’t have perception. What they have is a representation of the written word. And unfortunately the written word is much more equivocal than the world

June 19, 2025 at 2:57 PM

LLMs form a semi-accurate representation of the world as it is reflected in the writing they train on. A next step would be to create hybrid AIs that combine LLMs with symbolic and causal models that have explicit (and more accurate/auditable) representations of the world #AI #SymbolicAI #CausalAI

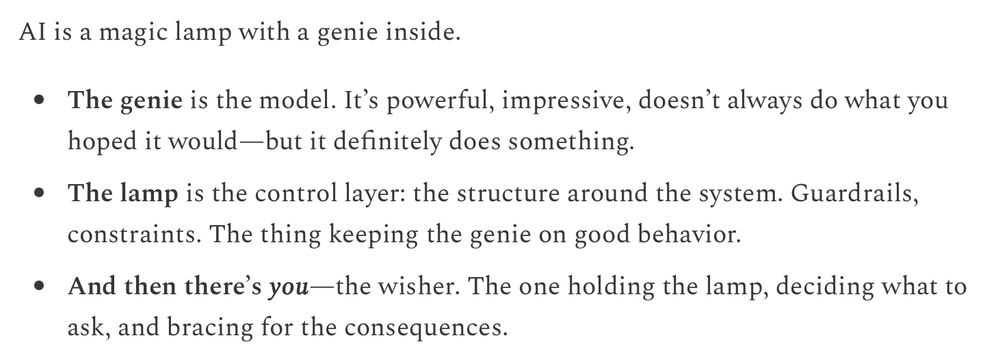

“Wishes have consequences. Especially when they run in production.” - ain't that a fact!

Cassie Kozyrkov's genie metaphor rings true:

1/n

Cassie Kozyrkov's genie metaphor rings true:

1/n

June 18, 2025 at 7:48 PM

“Wishes have consequences. Especially when they run in production.” - ain't that a fact!

Cassie Kozyrkov's genie metaphor rings true:

1/n

Cassie Kozyrkov's genie metaphor rings true:

1/n

Reposted by Kerry Champion

First, through a think-aloud study (N=16) in which participants use ChatGPT to answer objective questions, we identify 3 features of LLM responses that shape users' reliance: #explanations (supporting details for answers), #inconsistencies in explanations, and #sources.

2/7

2/7

February 28, 2025 at 3:21 PM

First, through a think-aloud study (N=16) in which participants use ChatGPT to answer objective questions, we identify 3 features of LLM responses that shape users' reliance: #explanations (supporting details for answers), #inconsistencies in explanations, and #sources.

2/7

2/7

June 17, 2025 at 11:51 PM

@jessicahullman.bsky.social persuasively argues that current AI is poor tool for decisions that fit FIRE (forward-looking, individual/idiosyncratic, require reasoning or experimentation/intervention) profile

Hmm … does this call for #CausalAI

statmodeling.stat.columbia.edu/2025/06/05/w...

Hmm … does this call for #CausalAI

statmodeling.stat.columbia.edu/2025/06/05/w...

When are AI/ML models unlikely to help with decision-making? | Statistical Modeling, Causal Inference, and Social Science

statmodeling.stat.columbia.edu

June 17, 2025 at 10:35 PM

@jessicahullman.bsky.social persuasively argues that current AI is poor tool for decisions that fit FIRE (forward-looking, individual/idiosyncratic, require reasoning or experimentation/intervention) profile

Hmm … does this call for #CausalAI

statmodeling.stat.columbia.edu/2025/06/05/w...

Hmm … does this call for #CausalAI

statmodeling.stat.columbia.edu/2025/06/05/w...

Apple opening on device LLM take-aways:

1) Medium term “Pervasive AI” will have more reach than “Agentic AI”

2) AI best implemented through systems of components and not a single blackbox neural net

3) Use case specific adjustments is needed to balance latency, cost, reliability and safety

1/n

1) Medium term “Pervasive AI” will have more reach than “Agentic AI”

2) AI best implemented through systems of components and not a single blackbox neural net

3) Use case specific adjustments is needed to balance latency, cost, reliability and safety

1/n

June 17, 2025 at 10:21 PM

Apple opening on device LLM take-aways:

1) Medium term “Pervasive AI” will have more reach than “Agentic AI”

2) AI best implemented through systems of components and not a single blackbox neural net

3) Use case specific adjustments is needed to balance latency, cost, reliability and safety

1/n

1) Medium term “Pervasive AI” will have more reach than “Agentic AI”

2) AI best implemented through systems of components and not a single blackbox neural net

3) Use case specific adjustments is needed to balance latency, cost, reliability and safety

1/n