Mastodon: @[email protected]

When I choose to speak, I speak for myself.

🪄 Tensor-enjoyer 🧪

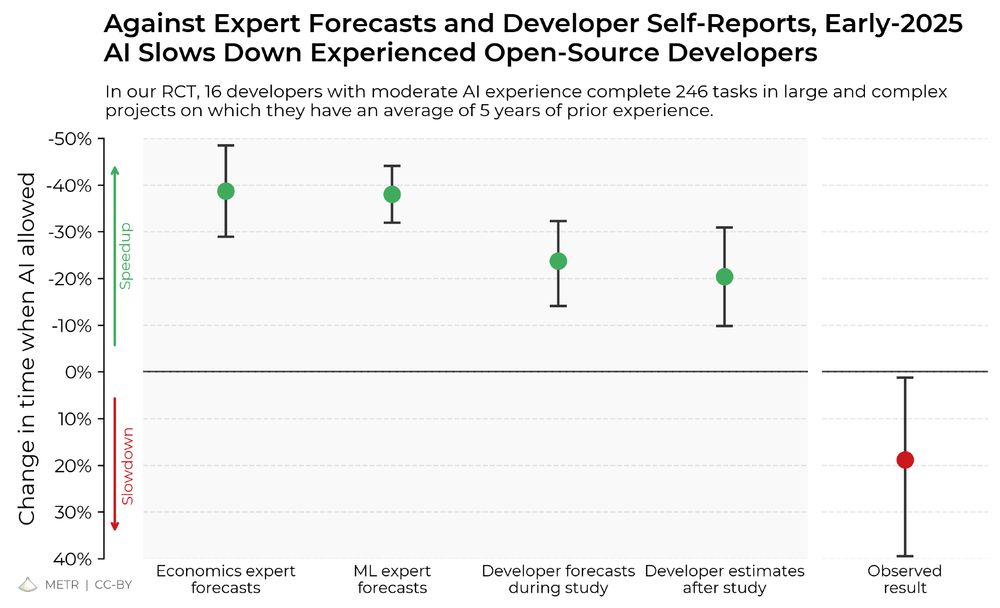

The results surprised us: Developers thought they were 20% faster with AI tools, but they were actually 19% slower when they had access to AI than when they didn't.

The results surprised us: Developers thought they were 20% faster with AI tools, but they were actually 19% slower when they had access to AI than when they didn't.

I’m now on the policy team at Model Evaluation and Threat Research (METR). Excited to be “doing AI policy” full-time.

I’m now on the policy team at Model Evaluation and Threat Research (METR). Excited to be “doing AI policy” full-time.

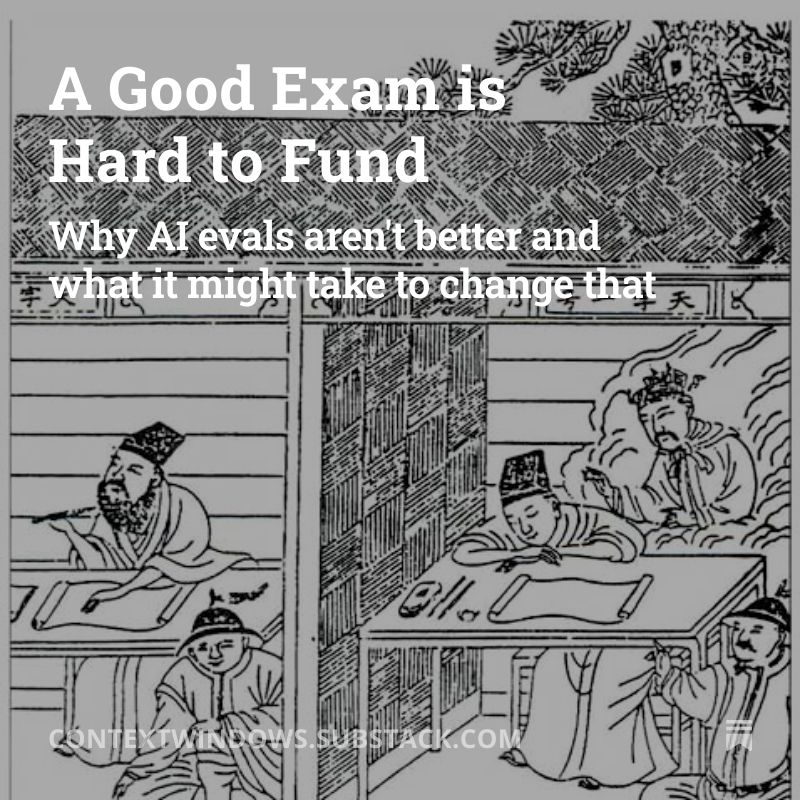

In a new post, I explain how the standardized testing industry works and write about lessons it may have for the AI evals ecosystem.

open.substack.com/pub/contextw...

In a new post, I explain how the standardized testing industry works and write about lessons it may have for the AI evals ecosystem.

open.substack.com/pub/contextw...

But they can map (state₁, action₁, state₂, action₂ ... stateₙ, actionₙ) → stateₙ₊₁

Just reformulate the task!

But they can map (state₁, action₁, state₂, action₂ ... stateₙ, actionₙ) → stateₙ₊₁

Just reformulate the task!

Apple introduces TarFlow, a new Transformer-based variant of Masked Autoregressive Flows.

SOTA on likelihood estimation for images, quality and diversity comparable to diffusion models.

arxiv.org/abs/2412.06329

I’m interested in experiments that look into how much finetuning can “roll back” a post-trained model to its base model perplexity on the original distribution.

Has anyone seen an experiment like this run?

I’m interested in experiments that look into how much finetuning can “roll back” a post-trained model to its base model perplexity on the original distribution.

Has anyone seen an experiment like this run?

open.substack.com/pub/contextw...

open.substack.com/pub/contextw...

If you have 100 verifiable claims you want information on but can only afford to check 10, fund markets on each. Later, use a randomized ordering of them to check the first 10. Resolve those to yes/no, refund the rest.

If you have 100 verifiable claims you want information on but can only afford to check 10, fund markets on each. Later, use a randomized ordering of them to check the first 10. Resolve those to yes/no, refund the rest.

1/ The event felt different than other conferences I've been to. Rather than the meeting of a tribe or a cluster of tribes around a shared idea, it was two conflicting tribes.

I would like to see more events like this.

open.substack.com/pub/understa...

open.substack.com/pub/understa...

Would you pay 1% of your earnings per year to protect a year’s worth of future earnings if most jobs are suddenly automated away?

Would you pay 1% of your earnings per year to protect a year’s worth of future earnings if most jobs are suddenly automated away?

Now, they have two hard problems.

Now, they have two hard problems.

“$10,000? My AI could’ve figured out the problem in an instant!”

The bioactuator relied: “It’s $1 for knowing which screw to turn, and $9,999 for turning it”

“$10,000? My AI could’ve figured out the problem in an instant!”

The bioactuator relied: “It’s $1 for knowing which screw to turn, and $9,999 for turning it”

- M. Shelley in Frankenstein

- M. Shelley in Frankenstein