Neural effective dimensionality scales up in 2D vs 1D, and is higher on correct vs incorrect trials. In 2D, the two attended features show up as near-orthogonal axes in a shared planar manifold plane.

6/8

Neural effective dimensionality scales up in 2D vs 1D, and is higher on correct vs incorrect trials. In 2D, the two attended features show up as near-orthogonal axes in a shared planar manifold plane.

6/8

Gaze selectively shifts toward task-relevant features, irrelevant features drop out. Gaze entropy decreases as beliefs stabilise, and negative prediction errors from the HSI model trigger broader sampling (exploration), while positive PEs tighten focus (exploitation).

5/8

Gaze selectively shifts toward task-relevant features, irrelevant features drop out. Gaze entropy decreases as beliefs stabilise, and negative prediction errors from the HSI model trigger broader sampling (exploration), while positive PEs tighten focus (exploitation).

5/8

HSI captures something structurally different from incremental RL.

4/8

HSI captures something structurally different from incremental RL.

4/8

3/8

3/8

2/8

2/8

In this pre-reg study, our core claim was that we don’t just learn stimulus-reward. We infer hidden context and that inference re-wires attention and neural state space on the fly.

1/8

In this pre-reg study, our core claim was that we don’t just learn stimulus-reward. We infer hidden context and that inference re-wires attention and neural state space on the fly.

1/8

The best retrieval cue matches the memory now,

not just how it was encoded.

Always a pleasure working with @lindedomingo.bsky.social.

Amusing summary below courtesy of ChatGPT:

The best retrieval cue matches the memory now,

not just how it was encoded.

Always a pleasure working with @lindedomingo.bsky.social.

Amusing summary below courtesy of ChatGPT:

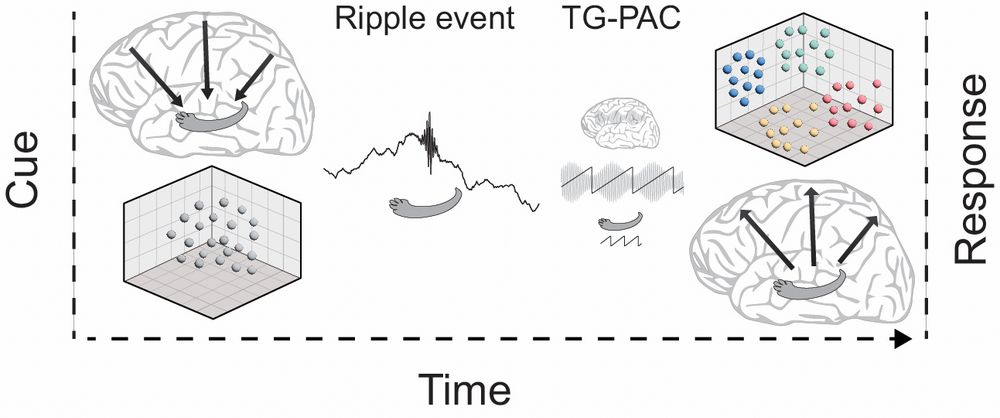

We found theta–gamma phase–amplitude coupling (TG-PAC) between hippocampus and cortex right after ripples.

TG-PAC peaks before cortical expansion, suggesting it may help coordinate the shift from compressed hippocampal codes to expanded cortical states.

We found theta–gamma phase–amplitude coupling (TG-PAC) between hippocampus and cortex right after ripples.

TG-PAC peaks before cortical expansion, suggesting it may help coordinate the shift from compressed hippocampal codes to expanded cortical states.

Cortical dimensionality expands — neural patterns spread apart, making memories easier to decode.

More expansion → Faster retrieval and more reinstatement.

This raised a question:

🧠 What mechanism is driving this cortical transformation?

Cortical dimensionality expands — neural patterns spread apart, making memories easier to decode.

More expansion → Faster retrieval and more reinstatement.

This raised a question:

🧠 What mechanism is driving this cortical transformation?

More ripples on correct vs. incorrect trials 📈

Ripples cluster before memory responses ⏳

Timing suggests ripples help initiate retrieval, not just reflect it.

More ripples on correct vs. incorrect trials 📈

Ripples cluster before memory responses ⏳

Timing suggests ripples help initiate retrieval, not just reflect it.

🌟 Hypothesis: Ripples trigger a shift from compressed to expanded neural representations in cortex, making memories readable again.

🌟 Hypothesis: Ripples trigger a shift from compressed to expanded neural representations in cortex, making memories readable again.

We recorded iEEG from patients during memory retrieval... and found something really cool 👇(thread)

We recorded iEEG from patients during memory retrieval... and found something really cool 👇(thread)