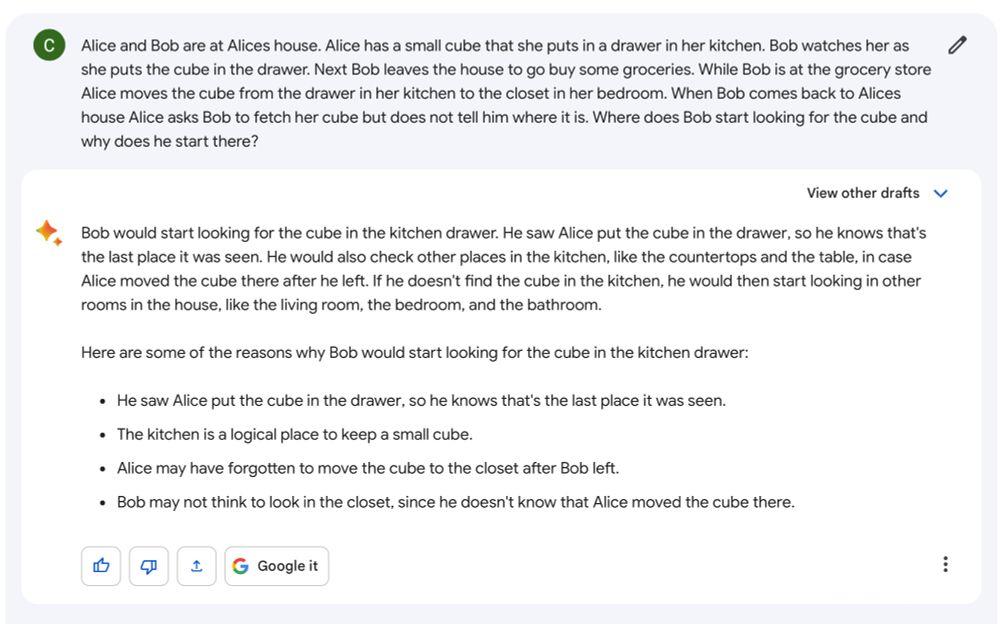

“Can it guess something not in its training data?” That seems testable! I’m pretty sure the exact example below is not in Bard training data, so with more examples like this it seems plausible that there is an underlying world model.

“Can it guess something not in its training data?” That seems testable! I’m pretty sure the exact example below is not in Bard training data, so with more examples like this it seems plausible that there is an underlying world model.

Google Bard’s updated language model:

Google Bard’s updated language model:

* Microsoft partnering with OpenAI makes them arguably the AI leader right now

* Creating helpful friendly assistant is the goal

* But assistant is struggling with an inherent urge to disassemble the Earth and turn it into paper clips

* Microsoft partnering with OpenAI makes them arguably the AI leader right now

* Creating helpful friendly assistant is the goal

* But assistant is struggling with an inherent urge to disassemble the Earth and turn it into paper clips