Courtois Project on Neuronal Modelling

@cneuromod.ca

45 followers

14 following

10 posts

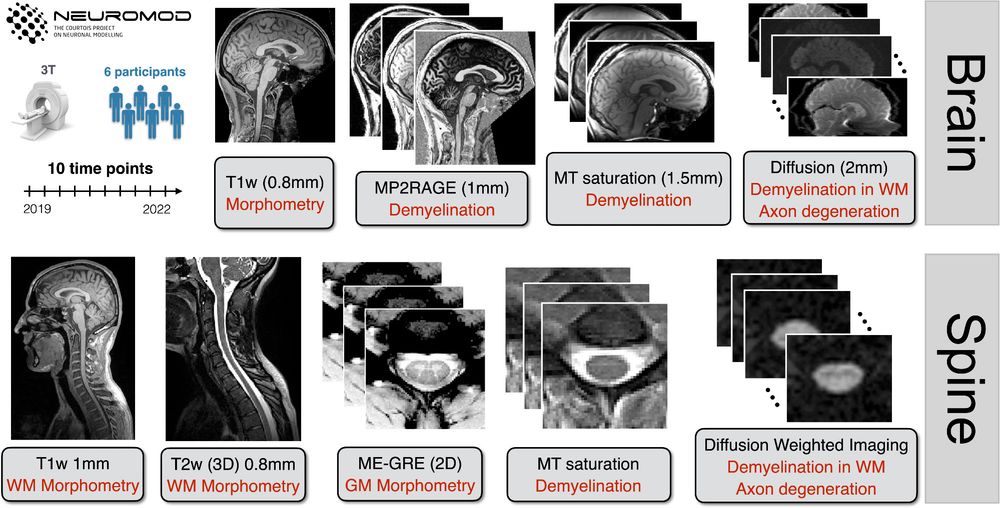

The Courtois project on Neural Modelling (cneuromod) aims at training artificial neural networks to mimic extensive experimental data on individual human brain activity and behaviour.

Posts

Media

Videos

Starter Packs

Reposted by Courtois Project on Neuronal Modelling

Reposted by Courtois Project on Neuronal Modelling

Reposted by Courtois Project on Neuronal Modelling

Reposted by Courtois Project on Neuronal Modelling

🌙 Lune Bellec

@lune-bellec.bsky.social

· Apr 21

Reposted by Courtois Project on Neuronal Modelling