Daniel Scalena

@danielsc4.it

420 followers

190 following

15 posts

PhDing @unimib 🇮🇹 & @gronlp.bsky.social 🇳🇱, interpretability et similia

danielsc4.it

Posts

Media

Videos

Starter Packs

Daniel Scalena

@danielsc4.it

· Aug 20

Daniel Scalena

@danielsc4.it

· May 23

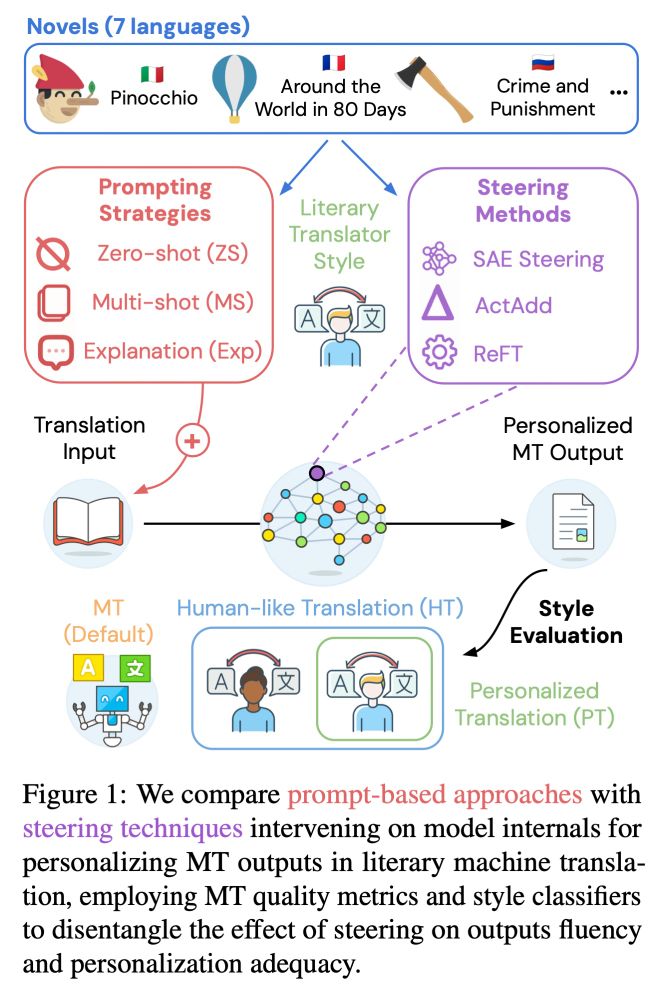

Steering Large Language Models for Machine Translation Personalization

High-quality machine translation systems based on large language models (LLMs) have simplified the production of personalized translations reflecting specific stylistic constraints. However, these sys...

arxiv.org

Daniel Scalena

@danielsc4.it

· May 23

Daniel Scalena

@danielsc4.it

· May 23

Daniel Scalena

@danielsc4.it

· Dec 4

Daniel Scalena

@danielsc4.it

· Nov 28

Daniel Scalena

@danielsc4.it

· Nov 19

Daniel Scalena

@danielsc4.it

· Nov 19

Daniel Scalena

@danielsc4.it

· Nov 16