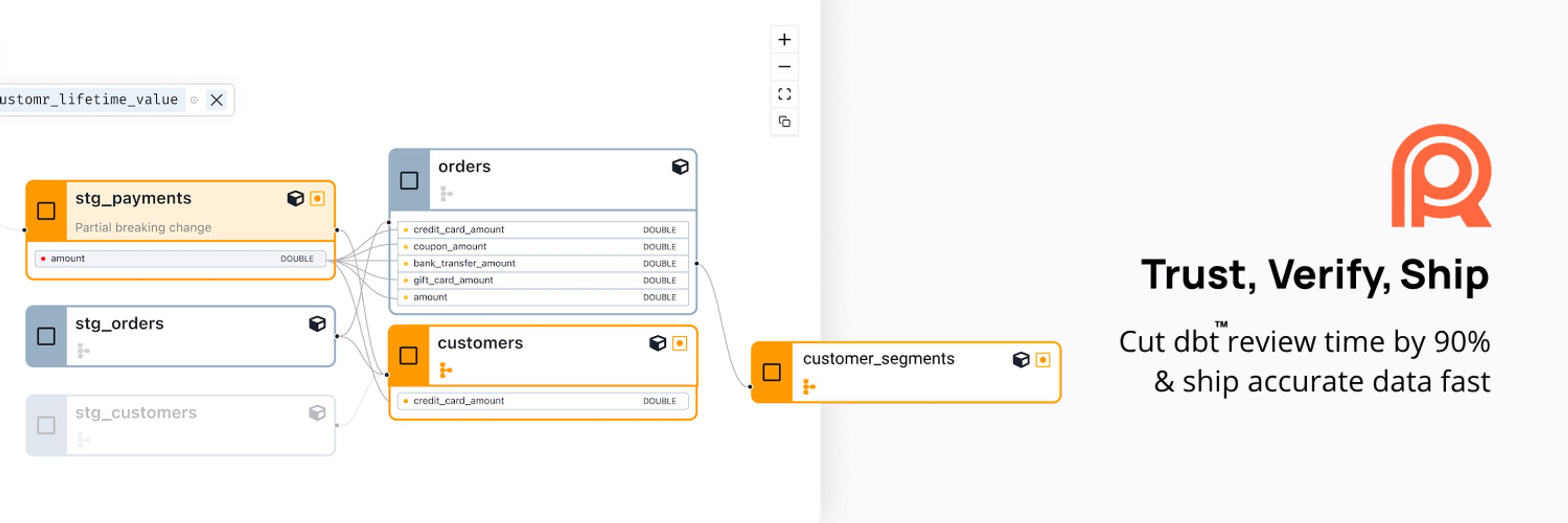

Recce - Trust, Verify, Ship

@datarecce.bsky.social

Metadata analysis eliminates unnecessary validation queries.

Data practitioners commonly validate dbt changes by checking row counts across all downstream models: 47 models generating significant warehouse costs to identify the 3 that actually changed.

Try using metadata only

Data practitioners commonly validate dbt changes by checking row counts across all downstream models: 47 models generating significant warehouse costs to identify the 3 that actually changed.

Try using metadata only

August 30, 2025 at 9:41 AM

Metadata analysis eliminates unnecessary validation queries.

Data practitioners commonly validate dbt changes by checking row counts across all downstream models: 47 models generating significant warehouse costs to identify the 3 that actually changed.

Try using metadata only

Data practitioners commonly validate dbt changes by checking row counts across all downstream models: 47 models generating significant warehouse costs to identify the 3 that actually changed.

Try using metadata only

Column-level lineage emerges from standard dbt artifacts.

Running `dbt run` and `dbt docs generate` produces artifacts that enable column-level lineage visualization and impact analysis.

cloud.reccehq.com accepts dbt artifacts to demonstrate metadata analysis

Running `dbt run` and `dbt docs generate` produces artifacts that enable column-level lineage visualization and impact analysis.

cloud.reccehq.com accepts dbt artifacts to demonstrate metadata analysis

August 25, 2025 at 9:36 PM

Column-level lineage emerges from standard dbt artifacts.

Running `dbt run` and `dbt docs generate` produces artifacts that enable column-level lineage visualization and impact analysis.

cloud.reccehq.com accepts dbt artifacts to demonstrate metadata analysis

Running `dbt run` and `dbt docs generate` produces artifacts that enable column-level lineage visualization and impact analysis.

cloud.reccehq.com accepts dbt artifacts to demonstrate metadata analysis

A partial breaking change can have no impact on downstream models.

Though breaking change analysis works at the column level, identifying a partial breaking change still isn't enough to get the precise impact radius.

Why breaking change analysis isn't enough 👇

reccehq.com/blog/Buildin...

Though breaking change analysis works at the column level, identifying a partial breaking change still isn't enough to get the precise impact radius.

Why breaking change analysis isn't enough 👇

reccehq.com/blog/Buildin...

July 27, 2025 at 8:01 PM

A partial breaking change can have no impact on downstream models.

Though breaking change analysis works at the column level, identifying a partial breaking change still isn't enough to get the precise impact radius.

Why breaking change analysis isn't enough 👇

reccehq.com/blog/Buildin...

Though breaking change analysis works at the column level, identifying a partial breaking change still isn't enough to get the precise impact radius.

Why breaking change analysis isn't enough 👇

reccehq.com/blog/Buildin...

🚨 Breaking Change Analysis Gap Confusing Data Teams

Software Dev: Breaking changes = planned improvements

Analytics Engineering: Breaking changes = unplanned problems

Solution: Column-level precision showing what actually needs validation.

reccehq.com/blog/Buildin...

Software Dev: Breaking changes = planned improvements

Analytics Engineering: Breaking changes = unplanned problems

Solution: Column-level precision showing what actually needs validation.

reccehq.com/blog/Buildin...

July 25, 2025 at 7:02 PM

🚨 Breaking Change Analysis Gap Confusing Data Teams

Software Dev: Breaking changes = planned improvements

Analytics Engineering: Breaking changes = unplanned problems

Solution: Column-level precision showing what actually needs validation.

reccehq.com/blog/Buildin...

Software Dev: Breaking changes = planned improvements

Analytics Engineering: Breaking changes = unplanned problems

Solution: Column-level precision showing what actually needs validation.

reccehq.com/blog/Buildin...

A partial breaking change can have full impact on downstream models.

Though breaking change analysis works at the column level, identifying a partial breaking change still isn't enough to get the precise impact radius.

Why breaking change analysis isn't enough 👇

Though breaking change analysis works at the column level, identifying a partial breaking change still isn't enough to get the precise impact radius.

Why breaking change analysis isn't enough 👇

July 23, 2025 at 8:02 PM

A partial breaking change can have full impact on downstream models.

Though breaking change analysis works at the column level, identifying a partial breaking change still isn't enough to get the precise impact radius.

Why breaking change analysis isn't enough 👇

Though breaking change analysis works at the column level, identifying a partial breaking change still isn't enough to get the precise impact radius.

Why breaking change analysis isn't enough 👇

**Data validation is only useful if teams adopt it.**

Early demos of Recce, which highlights exactly what changes and how to validate, showed strong response. But low setup success rates revealed a different story.

The team is now reducing this friction. Stay tuned.

Early demos of Recce, which highlights exactly what changes and how to validate, showed strong response. But low setup success rates revealed a different story.

The team is now reducing this friction. Stay tuned.

July 21, 2025 at 12:03 PM

**Data validation is only useful if teams adopt it.**

Early demos of Recce, which highlights exactly what changes and how to validate, showed strong response. But low setup success rates revealed a different story.

The team is now reducing this friction. Stay tuned.

Early demos of Recce, which highlights exactly what changes and how to validate, showed strong response. But low setup success rates revealed a different story.

The team is now reducing this friction. Stay tuned.

Breaking change analysis reveals WHAT changed, but not what to DO about it.

Teams discover a model has a partial breaking change. But which downstream models need validation? Which columns?

reccehq.com/blog/Buildin...

Teams discover a model has a partial breaking change. But which downstream models need validation? Which columns?

reccehq.com/blog/Buildin...

July 18, 2025 at 6:29 AM

Breaking change analysis reveals WHAT changed, but not what to DO about it.

Teams discover a model has a partial breaking change. But which downstream models need validation? Which columns?

reccehq.com/blog/Buildin...

Teams discover a model has a partial breaking change. But which downstream models need validation? Which columns?

reccehq.com/blog/Buildin...

'Validate everything downstream' is expensive and wasteful.

Impact Radius changes that to 'validate exactly what matters' with column-level precision.

🙌 Explore the insights and discoveries that shaped this approach.

reccehq.com/blog/Buildin...

Impact Radius changes that to 'validate exactly what matters' with column-level precision.

🙌 Explore the insights and discoveries that shaped this approach.

reccehq.com/blog/Buildin...

July 15, 2025 at 4:01 AM

'Validate everything downstream' is expensive and wasteful.

Impact Radius changes that to 'validate exactly what matters' with column-level precision.

🙌 Explore the insights and discoveries that shaped this approach.

reccehq.com/blog/Buildin...

Impact Radius changes that to 'validate exactly what matters' with column-level precision.

🙌 Explore the insights and discoveries that shaped this approach.

reccehq.com/blog/Buildin...

From validating all downstream models to validating only what matters.

Impact Radius delivers precision in data validation. Instead of 'validate everything downstream,' teams can now 'validate exactly this.'

🧵 How column-level precision transforms validation workflows

reccehq.com/blog/Buildin...

Impact Radius delivers precision in data validation. Instead of 'validate everything downstream,' teams can now 'validate exactly this.'

🧵 How column-level precision transforms validation workflows

reccehq.com/blog/Buildin...

July 11, 2025 at 4:18 AM

From validating all downstream models to validating only what matters.

Impact Radius delivers precision in data validation. Instead of 'validate everything downstream,' teams can now 'validate exactly this.'

🧵 How column-level precision transforms validation workflows

reccehq.com/blog/Buildin...

Impact Radius delivers precision in data validation. Instead of 'validate everything downstream,' teams can now 'validate exactly this.'

🧵 How column-level precision transforms validation workflows

reccehq.com/blog/Buildin...

Recce is now SOC 2 Type 2 compliant!

Our security controls don't just work in theory, they work consistently in practice.

For data teams: same speed and visibility, now with battle-tested security.

Our Trust Center: trust.reccehq.com

#SOC2 #DataSecurity #DataEngineering

Our security controls don't just work in theory, they work consistently in practice.

For data teams: same speed and visibility, now with battle-tested security.

Our Trust Center: trust.reccehq.com

#SOC2 #DataSecurity #DataEngineering

July 4, 2025 at 10:01 AM

Recce is now SOC 2 Type 2 compliant!

Our security controls don't just work in theory, they work consistently in practice.

For data teams: same speed and visibility, now with battle-tested security.

Our Trust Center: trust.reccehq.com

#SOC2 #DataSecurity #DataEngineering

Our security controls don't just work in theory, they work consistently in practice.

For data teams: same speed and visibility, now with battle-tested security.

Our Trust Center: trust.reccehq.com

#SOC2 #DataSecurity #DataEngineering

"Which downstream models actually need my attention?" Every data engineer asks at 11pm before a deploy. Syntax and tests? Those aren't your real worry.

We're obsessed with helping teams validate precisely what matters.

🧵 See how we build Impact Radius

reccehq.com/blog/Buildin...

We're obsessed with helping teams validate precisely what matters.

🧵 See how we build Impact Radius

reccehq.com/blog/Buildin...

July 4, 2025 at 5:20 AM

"Which downstream models actually need my attention?" Every data engineer asks at 11pm before a deploy. Syntax and tests? Those aren't your real worry.

We're obsessed with helping teams validate precisely what matters.

🧵 See how we build Impact Radius

reccehq.com/blog/Buildin...

We're obsessed with helping teams validate precisely what matters.

🧵 See how we build Impact Radius

reccehq.com/blog/Buildin...

Benefits of "automate everything" data validation , but hidden cost 💸

1️⃣ Compute Spend

2️⃣ Alert Fatigue

3️⃣ Team Trust

Compare automation-first vs human-in-the-loop: datarecce.io/blog/recce-v...

#DataEngineering #DataValidation #dbt #DataCosts

1️⃣ Compute Spend

2️⃣ Alert Fatigue

3️⃣ Team Trust

Compare automation-first vs human-in-the-loop: datarecce.io/blog/recce-v...

#DataEngineering #DataValidation #dbt #DataCosts

July 1, 2025 at 11:02 PM

Benefits of "automate everything" data validation , but hidden cost 💸

1️⃣ Compute Spend

2️⃣ Alert Fatigue

3️⃣ Team Trust

Compare automation-first vs human-in-the-loop: datarecce.io/blog/recce-v...

#DataEngineering #DataValidation #dbt #DataCosts

1️⃣ Compute Spend

2️⃣ Alert Fatigue

3️⃣ Team Trust

Compare automation-first vs human-in-the-loop: datarecce.io/blog/recce-v...

#DataEngineering #DataValidation #dbt #DataCosts

Is high-quality data the same as correct data?

Data can pass every test, but still be wrong!

✅ Schema checks

✅ Null constraints

🚫 No correctness validation

Recce introduces a workflow built around data correctness

Find and fix silent errors:

reccehq.com/blog/high-qu...

#dataquality #dbt

Data can pass every test, but still be wrong!

✅ Schema checks

✅ Null constraints

🚫 No correctness validation

Recce introduces a workflow built around data correctness

Find and fix silent errors:

reccehq.com/blog/high-qu...

#dataquality #dbt

July 1, 2025 at 3:45 PM

Is high-quality data the same as correct data?

Data can pass every test, but still be wrong!

✅ Schema checks

✅ Null constraints

🚫 No correctness validation

Recce introduces a workflow built around data correctness

Find and fix silent errors:

reccehq.com/blog/high-qu...

#dataquality #dbt

Data can pass every test, but still be wrong!

✅ Schema checks

✅ Null constraints

🚫 No correctness validation

Recce introduces a workflow built around data correctness

Find and fix silent errors:

reccehq.com/blog/high-qu...

#dataquality #dbt

Your team’s judgement should become team knowledge, not just lost in Slack.

Every PR review is a decision:

✅ What matters

❌ What doesn’t

🧠 Why a change is okay or not

Learn how👉 datarecce.io/blog/more-th...

Every PR review is a decision:

✅ What matters

❌ What doesn’t

🧠 Why a change is okay or not

Learn how👉 datarecce.io/blog/more-th...

July 1, 2025 at 1:01 AM

Your team’s judgement should become team knowledge, not just lost in Slack.

Every PR review is a decision:

✅ What matters

❌ What doesn’t

🧠 Why a change is okay or not

Learn how👉 datarecce.io/blog/more-th...

Every PR review is a decision:

✅ What matters

❌ What doesn’t

🧠 Why a change is okay or not

Learn how👉 datarecce.io/blog/more-th...

Choose Recce and Datafold?

Datafold if:

→ large-scale data

→ automated CI/CD coverage all

Recce if:

→ focus on dev-time validation

→ prefer lightweight, open-source flexibility

Full comparison: datarecce.io/blog/recce-v...

#DataEngineering #DataValidation #dbt #BuyersGuide

Datafold if:

→ large-scale data

→ automated CI/CD coverage all

Recce if:

→ focus on dev-time validation

→ prefer lightweight, open-source flexibility

Full comparison: datarecce.io/blog/recce-v...

#DataEngineering #DataValidation #dbt #BuyersGuide

June 25, 2025 at 12:01 AM

Choose Recce and Datafold?

Datafold if:

→ large-scale data

→ automated CI/CD coverage all

Recce if:

→ focus on dev-time validation

→ prefer lightweight, open-source flexibility

Full comparison: datarecce.io/blog/recce-v...

#DataEngineering #DataValidation #dbt #BuyersGuide

Datafold if:

→ large-scale data

→ automated CI/CD coverage all

Recce if:

→ focus on dev-time validation

→ prefer lightweight, open-source flexibility

Full comparison: datarecce.io/blog/recce-v...

#DataEngineering #DataValidation #dbt #BuyersGuide

Auto-diff every model on every PR?

You’ll get ⚠️ dozens of alerts, irrelevant!

CI without context = alert spam.

Real-world data work needs: what changed, why, and what to do.

Learn how 👉 datarecce.io/blog/more-th...

#dataengineering #datadiff #analyticsengineering #datavalidation

You’ll get ⚠️ dozens of alerts, irrelevant!

CI without context = alert spam.

Real-world data work needs: what changed, why, and what to do.

Learn how 👉 datarecce.io/blog/more-th...

#dataengineering #datadiff #analyticsengineering #datavalidation

June 24, 2025 at 3:01 AM

Auto-diff every model on every PR?

You’ll get ⚠️ dozens of alerts, irrelevant!

CI without context = alert spam.

Real-world data work needs: what changed, why, and what to do.

Learn how 👉 datarecce.io/blog/more-th...

#dataengineering #datadiff #analyticsengineering #datavalidation

You’ll get ⚠️ dozens of alerts, irrelevant!

CI without context = alert spam.

Real-world data work needs: what changed, why, and what to do.

Learn how 👉 datarecce.io/blog/more-th...

#dataengineering #datadiff #analyticsengineering #datavalidation

Hot take: Automating ALL data diffs by default is backwards 🔥

🤖 Datafold's automation-first vs 🙋Recce's human-in-the-loop philosophy

Getting 50 automated alerts or 5 targeted insights?

See comparison datarecce.io/blog/recce-v...

#DataEngineering #DataValidation #datadiff

🤖 Datafold's automation-first vs 🙋Recce's human-in-the-loop philosophy

Getting 50 automated alerts or 5 targeted insights?

See comparison datarecce.io/blog/recce-v...

#DataEngineering #DataValidation #datadiff

June 18, 2025 at 3:01 AM

Hot take: Automating ALL data diffs by default is backwards 🔥

🤖 Datafold's automation-first vs 🙋Recce's human-in-the-loop philosophy

Getting 50 automated alerts or 5 targeted insights?

See comparison datarecce.io/blog/recce-v...

#DataEngineering #DataValidation #datadiff

🤖 Datafold's automation-first vs 🙋Recce's human-in-the-loop philosophy

Getting 50 automated alerts or 5 targeted insights?

See comparison datarecce.io/blog/recce-v...

#DataEngineering #DataValidation #datadiff

"The value didn't justify the effort."

A Sr. Data Engineer at Swedish MediaTech after their Datafold PoC.

❌ Heavy setup → Noisy results → Alert fatigue → No way to start small

Comparsion: datarecce.io/blog/recce-v...

#DataEngineering #DataValidation #analyticsengineering

A Sr. Data Engineer at Swedish MediaTech after their Datafold PoC.

❌ Heavy setup → Noisy results → Alert fatigue → No way to start small

Comparsion: datarecce.io/blog/recce-v...

#DataEngineering #DataValidation #analyticsengineering

June 11, 2025 at 8:00 AM

"The value didn't justify the effort."

A Sr. Data Engineer at Swedish MediaTech after their Datafold PoC.

❌ Heavy setup → Noisy results → Alert fatigue → No way to start small

Comparsion: datarecce.io/blog/recce-v...

#DataEngineering #DataValidation #analyticsengineering

A Sr. Data Engineer at Swedish MediaTech after their Datafold PoC.

❌ Heavy setup → Noisy results → Alert fatigue → No way to start small

Comparsion: datarecce.io/blog/recce-v...

#DataEngineering #DataValidation #analyticsengineering

Don’t start with what changed. Start with what SHOULD change!

Because not every diff is a problem, and not every problem shows up as a diff.

👉 datarecce.io/blog/more-th...

#dataengineering #datadiff #analyticsengineering #datavalidation

Because not every diff is a problem, and not every problem shows up as a diff.

👉 datarecce.io/blog/more-th...

#dataengineering #datadiff #analyticsengineering #datavalidation

June 10, 2025 at 3:49 AM

Don’t start with what changed. Start with what SHOULD change!

Because not every diff is a problem, and not every problem shows up as a diff.

👉 datarecce.io/blog/more-th...

#dataengineering #datadiff #analyticsengineering #datavalidation

Because not every diff is a problem, and not every problem shows up as a diff.

👉 datarecce.io/blog/more-th...

#dataengineering #datadiff #analyticsengineering #datavalidation

🚨 Data diff isn’t enough.

You’re putting out harmless fires while real metric failures burn unnoticed.

You don’t need more diffing. You need better understanding.

Read 👉 datarecce.io/blog/more-th...

#dataops #datadiff #datavalidation #dataengineering

You’re putting out harmless fires while real metric failures burn unnoticed.

You don’t need more diffing. You need better understanding.

Read 👉 datarecce.io/blog/more-th...

#dataops #datadiff #datavalidation #dataengineering

June 3, 2025 at 5:29 AM

🚨 Data diff isn’t enough.

You’re putting out harmless fires while real metric failures burn unnoticed.

You don’t need more diffing. You need better understanding.

Read 👉 datarecce.io/blog/more-th...

#dataops #datadiff #datavalidation #dataengineering

You’re putting out harmless fires while real metric failures burn unnoticed.

You don’t need more diffing. You need better understanding.

Read 👉 datarecce.io/blog/more-th...

#dataops #datadiff #datavalidation #dataengineering

Understanding these types helps you validate logic, assess risk, and build more transparent models in dbt

Recce uses this classification to power column-level lineage and breaking change analysis

Read more:

datarecce.io/blog/column-...

#recce #dbt #lineage #dataengineering

Recce uses this classification to power column-level lineage and breaking change analysis

Read more:

datarecce.io/blog/column-...

#recce #dbt #lineage #dataengineering

May 22, 2025 at 3:24 AM

Understanding these types helps you validate logic, assess risk, and build more transparent models in dbt

Recce uses this classification to power column-level lineage and breaking change analysis

Read more:

datarecce.io/blog/column-...

#recce #dbt #lineage #dataengineering

Recce uses this classification to power column-level lineage and breaking change analysis

Read more:

datarecce.io/blog/column-...

#recce #dbt #lineage #dataengineering

Type 5: Unknown

Ambiguous or unsupported logic. Schema needed to resolve

SELECT a, b FROM T1 JOIN T2 ON T1.id = T2.id

🤷 Refer to table schemas to determine if a and b come from T1, T2, or both

#sqllineage #dbtdebugging #schema

Ambiguous or unsupported logic. Schema needed to resolve

SELECT a, b FROM T1 JOIN T2 ON T1.id = T2.id

🤷 Refer to table schemas to determine if a and b come from T1, T2, or both

#sqllineage #dbtdebugging #schema

May 22, 2025 at 3:24 AM

Type 5: Unknown

Ambiguous or unsupported logic. Schema needed to resolve

SELECT a, b FROM T1 JOIN T2 ON T1.id = T2.id

🤷 Refer to table schemas to determine if a and b come from T1, T2, or both

#sqllineage #dbtdebugging #schema

Ambiguous or unsupported logic. Schema needed to resolve

SELECT a, b FROM T1 JOIN T2 ON T1.id = T2.id

🤷 Refer to table schemas to determine if a and b come from T1, T2, or both

#sqllineage #dbtdebugging #schema

Type 4: Source

Comes from a literal or function, not an upstream model

SELECT CURRENT_TIMESTAMP AS created_at

📍 created_at doesn’t rely on any input column

#etl #sqlfunctions #datapipelines

Comes from a literal or function, not an upstream model

SELECT CURRENT_TIMESTAMP AS created_at

📍 created_at doesn’t rely on any input column

#etl #sqlfunctions #datapipelines

May 22, 2025 at 3:24 AM

Type 4: Source

Comes from a literal or function, not an upstream model

SELECT CURRENT_TIMESTAMP AS created_at

📍 created_at doesn’t rely on any input column

#etl #sqlfunctions #datapipelines

Comes from a literal or function, not an upstream model

SELECT CURRENT_TIMESTAMP AS created_at

📍 created_at doesn’t rely on any input column

#etl #sqlfunctions #datapipelines

Type 3: Derived

Created using math, logic, or expressions.

SELECT price * quantity

AS total_amount

FROM {{ ref("sales") }}

🧮 total_amount is derived from price and quantity

#businesslogic #dbt

Created using math, logic, or expressions.

SELECT price * quantity

AS total_amount

FROM {{ ref("sales") }}

🧮 total_amount is derived from price and quantity

#businesslogic #dbt

May 22, 2025 at 3:24 AM

Type 3: Derived

Created using math, logic, or expressions.

SELECT price * quantity

AS total_amount

FROM {{ ref("sales") }}

🧮 total_amount is derived from price and quantity

#businesslogic #dbt

Created using math, logic, or expressions.

SELECT price * quantity

AS total_amount

FROM {{ ref("sales") }}

🧮 total_amount is derived from price and quantity

#businesslogic #dbt

Type 2: Renamed

Same data, new name.

SELECT user_id AS id FROM {{ ref("users") }}

✅ id is just an alias of user_id

#analyticsengineering #sql #datamodeling

Same data, new name.

SELECT user_id AS id FROM {{ ref("users") }}

✅ id is just an alias of user_id

#analyticsengineering #sql #datamodeling

May 22, 2025 at 3:24 AM

Type 2: Renamed

Same data, new name.

SELECT user_id AS id FROM {{ ref("users") }}

✅ id is just an alias of user_id

#analyticsengineering #sql #datamodeling

Same data, new name.

SELECT user_id AS id FROM {{ ref("users") }}

✅ id is just an alias of user_id

#analyticsengineering #sql #datamodeling