David Debot

@daviddebot.bsky.social

180 followers

240 following

16 posts

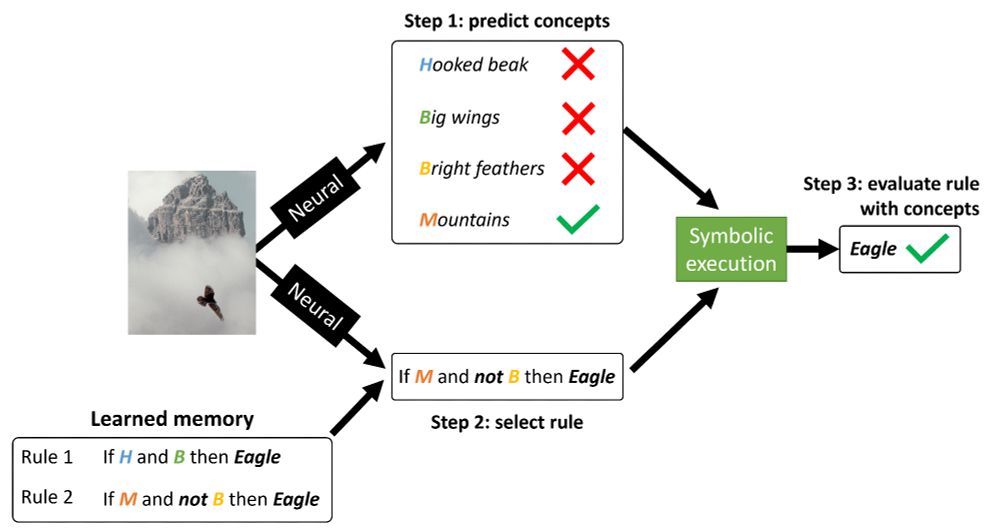

PhD student @dtai-kuleuven.bsky.social in neurosymbolic AI and concept-based learning

https://daviddebot.github.io/

Posts

Media

Videos

Starter Packs

Reposted by David Debot

Reposted by David Debot

Reposted by David Debot

David Debot

@daviddebot.bsky.social

· Feb 24

Reposted by David Debot

Reposted by David Debot

David Debot

@daviddebot.bsky.social

· Feb 24

David Debot

@daviddebot.bsky.social

· Feb 24

David Debot

@daviddebot.bsky.social

· Feb 24

David Debot

@daviddebot.bsky.social

· Feb 24

David Debot

@daviddebot.bsky.social

· Feb 24

David Debot

@daviddebot.bsky.social

· Feb 24

David Debot

@daviddebot.bsky.social

· Feb 24

David Debot

@daviddebot.bsky.social

· Feb 24

David Debot

@daviddebot.bsky.social

· Dec 4

David Debot

@daviddebot.bsky.social

· Dec 4

David Debot

@daviddebot.bsky.social

· Dec 4

David Debot

@daviddebot.bsky.social

· Dec 4

David Debot

@daviddebot.bsky.social

· Dec 4