Contributor: Apache Iceberg | Apache Hudi

Distributed Computing | Technical Author (O’Reilly, Packt)

Prev📍: DevRel @Onehouse.ai, Dremio, Engineering @Qlik, @OTIS Elevators

Book 📕: https://a.co/d/fUDs7G6

- Intro of the LSM trees (log-structured merge-trees)

- Expression, Secondary indexes

- Non-blocking concurrency control

- Partial Merges

Blog: hudi.apache.org/blog/2024/12...

- Intro of the LSM trees (log-structured merge-trees)

- Expression, Secondary indexes

- Non-blocking concurrency control

- Partial Merges

Blog: hudi.apache.org/blog/2024/12...

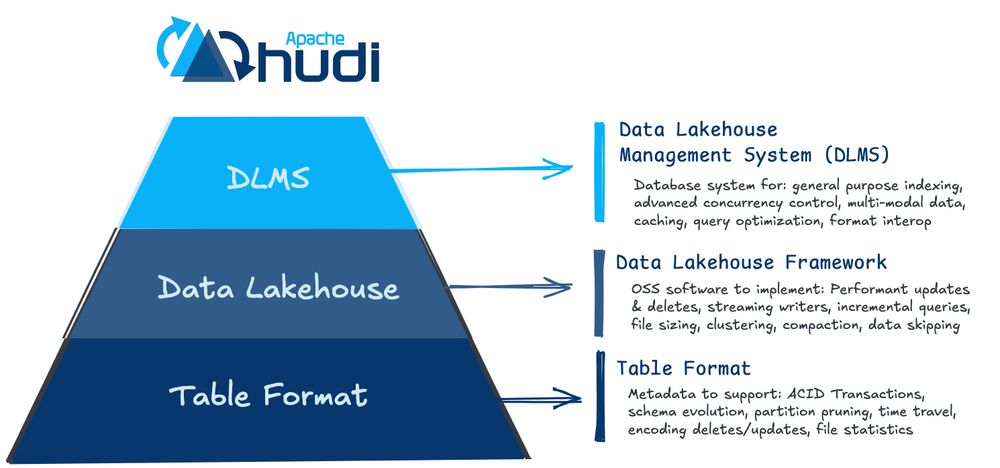

✅ If we compare Hudi's architecture to the seminal "Architecture of a Database System" paper, we can see how Hudi serves as the foundational half of a database optimized for data lakes.

✅ If we compare Hudi's architecture to the seminal "Architecture of a Database System" paper, we can see how Hudi serves as the foundational half of a database optimized for data lakes.

With the new 1.0 release of Apache Hudi, we are now closer to the vision of building the first transactional database for the data lake.

Let’s explore:

With the new 1.0 release of Apache Hudi, we are now closer to the vision of building the first transactional database for the data lake.

Let’s explore:

Hudi brings a core transactional layer (via its Storage Engine) to cloud data lakes, typically seen in any database management system.

Hudi brings a core transactional layer (via its Storage Engine) to cloud data lakes, typically seen in any database management system.

The question we should ask- Can I seamlessly switch between specific components or the overall platform—whether it's a vendor-managed or self-managed open source solution—as new requirements emerge?

The question we should ask- Can I seamlessly switch between specific components or the overall platform—whether it's a vendor-managed or self-managed open source solution—as new requirements emerge?

This is powered by XTable

xtable.apache.org

This is powered by XTable

xtable.apache.org

The announcement on Fabric OneLake-Snowflake interoperability is a critical example that solidifies point (1).

The announcement on Fabric OneLake-Snowflake interoperability is a critical example that solidifies point (1).

So, to summarize, I see XTable having 2 major applications:

✅ On the compute-side with vendors using XTable as the interoperability layer

So, to summarize, I see XTable having 2 major applications:

✅ On the compute-side with vendors using XTable as the interoperability layer

In reality, it is tough to have robust support for every single format. By robust I mean - full write support, schema evolution, compaction.

In reality, it is tough to have robust support for every single format. By robust I mean - full write support, schema evolution, compaction.

And so based on your use case & technical fit (in your data architecture), you should be free to use anything without being married to just one.

And so based on your use case & technical fit (in your data architecture), you should be free to use anything without being married to just one.

That you should be able to write data in any format of your choice irrespective of whether it’s Iceberg, Hudi or Delta.

Then you can bring any compute engine of your choice that works well with a particular format & run analytics on top.

That you should be able to write data in any format of your choice irrespective of whether it’s Iceberg, Hudi or Delta.

Then you can bring any compute engine of your choice that works well with a particular format & run analytics on top.