• ~5 minutes completion time (vs 12+ for full version)

• 91% congruence with comprehensive assessment

• Strong performance for group-level analysis

Trade-off: slightly lower individual reliability (α = 0.61 vs 0.74)

• ~5 minutes completion time (vs 12+ for full version)

• 91% congruence with comprehensive assessment

• Strong performance for group-level analysis

Trade-off: slightly lower individual reliability (α = 0.61 vs 0.74)

• What is AI?

• What can AI do?

• How does AI work?

• How do people perceive AI?

• How should AI be used?

Special emphasis on technical understanding—the foundation of true AI literacy.

• What is AI?

• What can AI do?

• How does AI work?

• How do people perceive AI?

• How should AI be used?

Special emphasis on technical understanding—the foundation of true AI literacy.

Our solution distills a robust 28-item instrument into 10 key questions—validated with 1,465 university students across the US, Germany, and UK.

Our solution distills a robust 28-item instrument into 10 key questions—validated with 1,465 university students across the US, Germany, and UK.

• Keeping up with AI's rapid evolution

• Teaching abstract concepts to diverse audiences

• Shortage of trained educators

• Misalignment between teaching goals & assessment methods

• Keeping up with AI's rapid evolution

• Teaching abstract concepts to diverse audiences

• Shortage of trained educators

• Misalignment between teaching goals & assessment methods

They tackle societal issues—bias, fairness, privacy, social justice. Higher-ed leads with comprehensive curricula, but K-12 efforts are still catching up.

They tackle societal issues—bias, fairness, privacy, social justice. Higher-ed leads with comprehensive curricula, but K-12 efforts are still catching up.

The results? A field full of promise but grappling with fundamental challenges in what to teach, how to teach it, and whether students are actually learning.

The results? A field full of promise but grappling with fundamental challenges in what to teach, how to teach it, and whether students are actually learning.

🚀 AI Advocates (48%): Tech-savvy, confident, excited

🤔 Cautious Critics (21%): Skeptical, low confidence, minimal use

⚖️ Pragmatic Observers (31%): Neutral attitudes, moderate interest

🚀 AI Advocates (48%): Tech-savvy, confident, excited

🤔 Cautious Critics (21%): Skeptical, low confidence, minimal use

⚖️ Pragmatic Observers (31%): Neutral attitudes, moderate interest

✅ Using AI tools (like ChatGPT) boosts interest

✅ Positive attitudes predict higher engagement

✅ Interest acts as the bridge connecting attitudes, literacy, and confidence

Our validated path model:

✅ Using AI tools (like ChatGPT) boosts interest

✅ Positive attitudes predict higher engagement

✅ Interest acts as the bridge connecting attitudes, literacy, and confidence

Our validated path model:

www.sciencedirect.com/science/art...

www.sciencedirect.com/science/art...

SCA uncovered growing rift:

⚾ Pro-forgiveness coalition

⚾ Purist coalition

Schism reinforced decision silos over time

SCA uncovered growing rift:

⚾ Pro-forgiveness coalition

⚾ Purist coalition

Schism reinforced decision silos over time

SCA revealed two warring coalitions:

🛠️ Technical design focus

🌍 Cultural norms focus

Leadership missed these divisions—strategy disaster followed

SCA revealed two warring coalitions:

🛠️ Technical design focus

🌍 Cultural norms focus

Leadership missed these divisions—strategy disaster followed

Result? Clear maps of internal divisions, even with sparse data 📊

Result? Clear maps of internal divisions, even with sparse data 📊

• Republicans: Pro-AI in defense, law enforcement

• Democrats: Focused on risks, equity concerns

This mirrored broader ideological divides over regulation and government intervention.

• Republicans: Pro-AI in defense, law enforcement

• Democrats: Focused on risks, equity concerns

This mirrored broader ideological divides over regulation and government intervention.

Early AI framing was broad: transparency, privacy, ethics. But when linked to racial equity or redistribution, partisan divides flared.

• Dems: Equity-focused reforms

• GOP: Industry self-regulation

Early AI framing was broad: transparency, privacy, ethics. But when linked to racial equity or redistribution, partisan divides flared.

• Dems: Equity-focused reforms

• GOP: Industry self-regulation

We identify 4 key triggers of polarization:

1️⃣ Competing problem definitions

2️⃣ Divergent policy tools

3️⃣ Stakeholder dynamics

4️⃣ Strategic "subsystem shopping"

We identify 4 key triggers of polarization:

1️⃣ Competing problem definitions

2️⃣ Divergent policy tools

3️⃣ Stakeholder dynamics

4️⃣ Strategic "subsystem shopping"

We need to:

• Integrate ethics into core STEM curricula

• Leverage peer-based learning (it works!)

• Connect coursework to real challenges

• Rethink "STEM readiness"

Technical competence without social consciousness isn't enough.

@purduepolsci.bsky.social @GRAILcenter.bsky

We need to:

• Integrate ethics into core STEM curricula

• Leverage peer-based learning (it works!)

• Connect coursework to real challenges

• Rethink "STEM readiness"

Technical competence without social consciousness isn't enough.

@purduepolsci.bsky.social @GRAILcenter.bsky

• Too technical (little ethics integration)

• Disconnected from societal dimensions

• Career-focused rather than impact-focused

Meanwhile, STEM professionals shape our future—from AI to climate tech ⚡

• Too technical (little ethics integration)

• Disconnected from societal dimensions

• Career-focused rather than impact-focused

Meanwhile, STEM professionals shape our future—from AI to climate tech ⚡

• Professional Connectedness scores dropped significantly (5.65 → 5.43, p < 0.001)

• Students increasingly prioritized salary over societal impact

• Self-efficacy to drive social change declined

Published in International Journal of STEM Education

• Professional Connectedness scores dropped significantly (5.65 → 5.43, p < 0.001)

• Students increasingly prioritized salary over societal impact

• Self-efficacy to drive social change declined

Published in International Journal of STEM Education

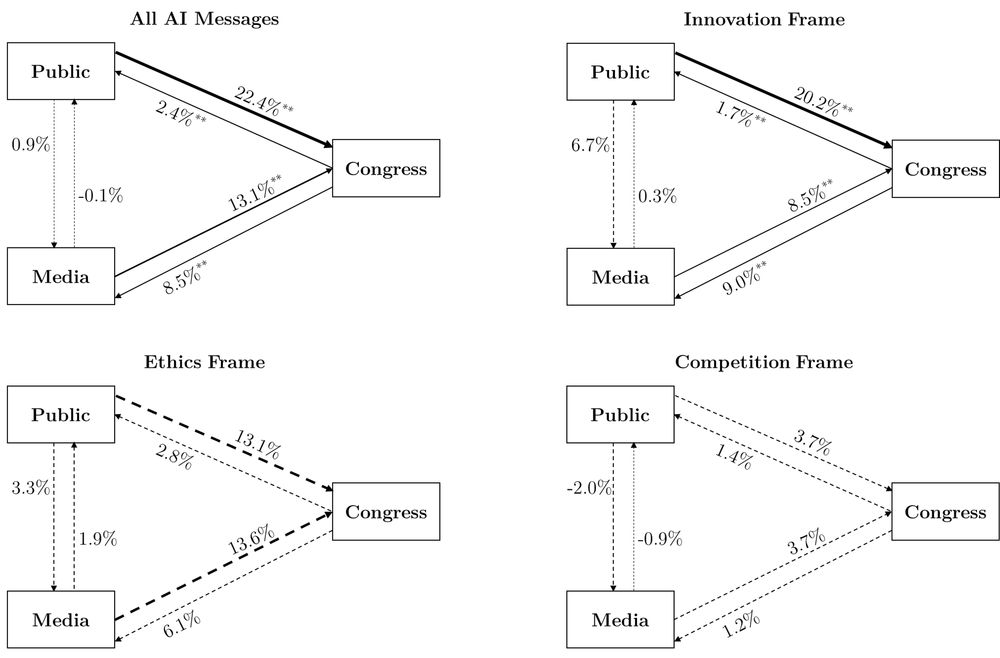

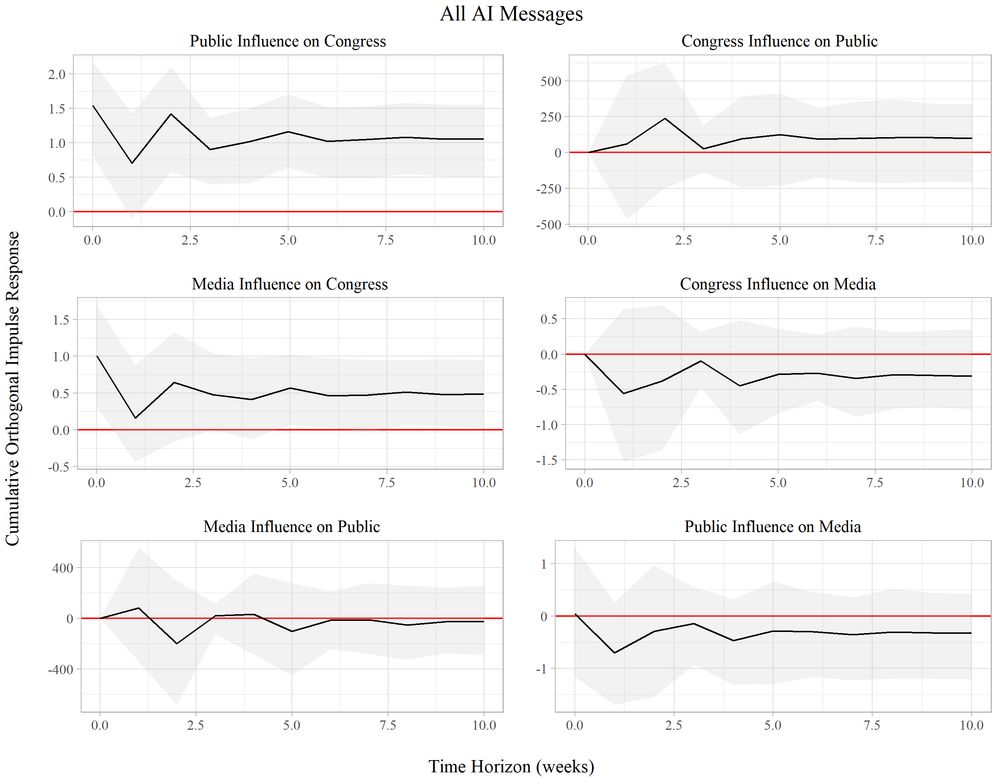

The time-series analysis (ARIMA + VAR) shows that a one standard deviation increase in public tweets about AI is associated with a 22.4% increase in Congressional messaging on AI that same week.

The time-series analysis (ARIMA + VAR) shows that a one standard deviation increase in public tweets about AI is associated with a 22.4% increase in Congressional messaging on AI that same week.

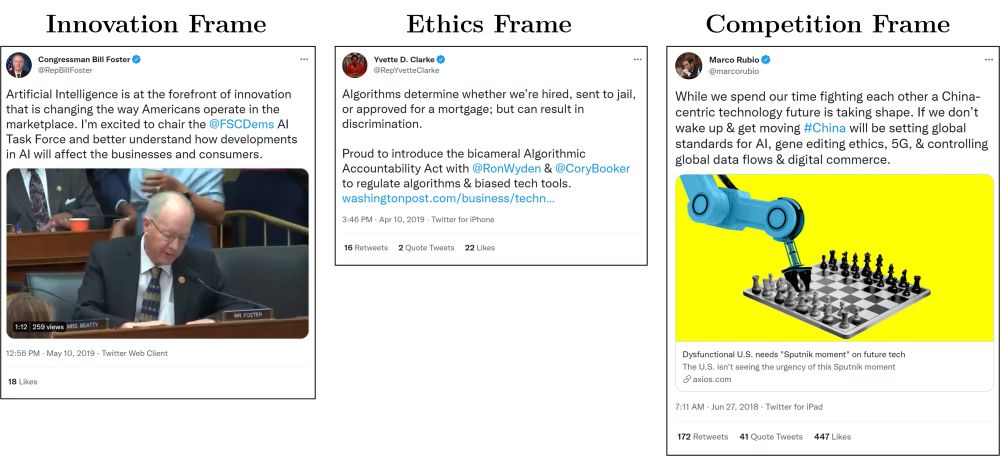

📈 Innovation: AI as a driver of economic growth & productivity.

🙏 Ethics: AI's impact on fairness, rights, bias, and safety.

⚔️ Competition: AI in the context of the US-China race.

📈 Innovation: AI as a driver of economic growth & productivity.

🙏 Ethics: AI's impact on fairness, rights, bias, and safety.

⚔️ Competition: AI in the context of the US-China race.

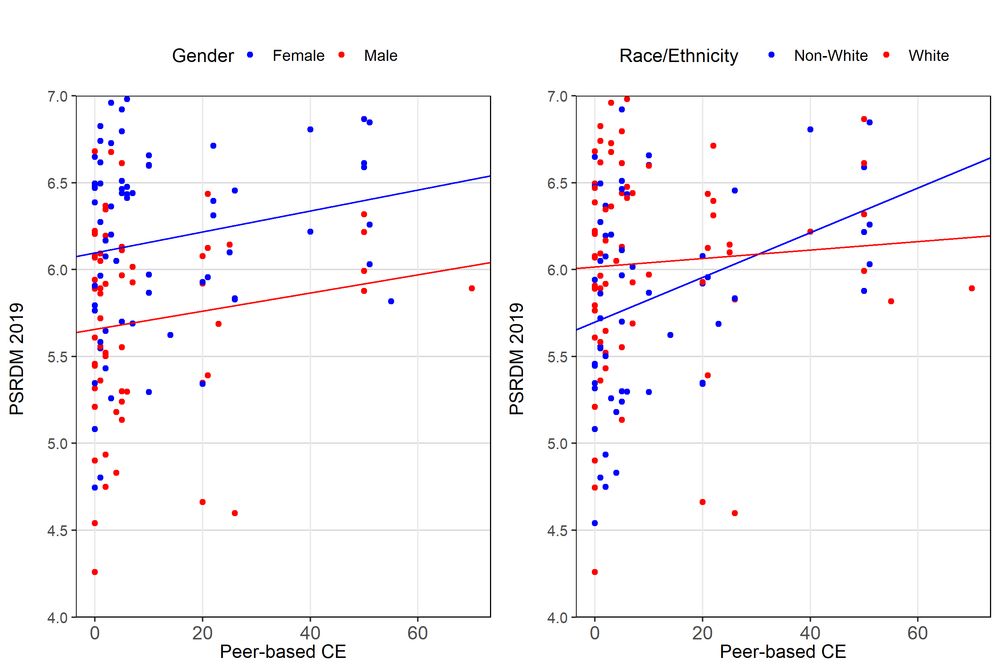

Another note: Women have higher SR scores than men consistently

Another note: Women have higher SR scores than men consistently