DurstewitzLab

@durstewitzlab.bsky.social

1K followers

1.8K following

42 posts

Scientific AI/ machine learning, dynamical systems (reconstruction), generative surrogate models of brains & behavior, applications in neuroscience & mental health

Posts

Media

Videos

Starter Packs

DurstewitzLab

@durstewitzlab.bsky.social

· Aug 15

Reconstructing computational system dynamics from neural data with recurrent neural networks - Nature Reviews Neuroscience

The prospects for applying dynamical systems theory in neuroscience are changing dramatically. In this Perspective, Durstewitz et al. discuss dynamical system reconstruction using recurrent neural net...

www.nature.com

Reposted by DurstewitzLab

Reposted by DurstewitzLab

Mike Dettinger

@mdettinger.bsky.social

· Mar 31

Public Statement on Supporting Science for the Benefit of All Citizens

TO THE AMERICAN PEOPLE We all rely on science. Science gave us the smartphones in our pockets, the navigation systems in our cars, and life-saving medical care. We count on engineers when we drive acr...

docs.google.com

Reposted by DurstewitzLab

DurstewitzLab

@durstewitzlab.bsky.social

· Jun 28

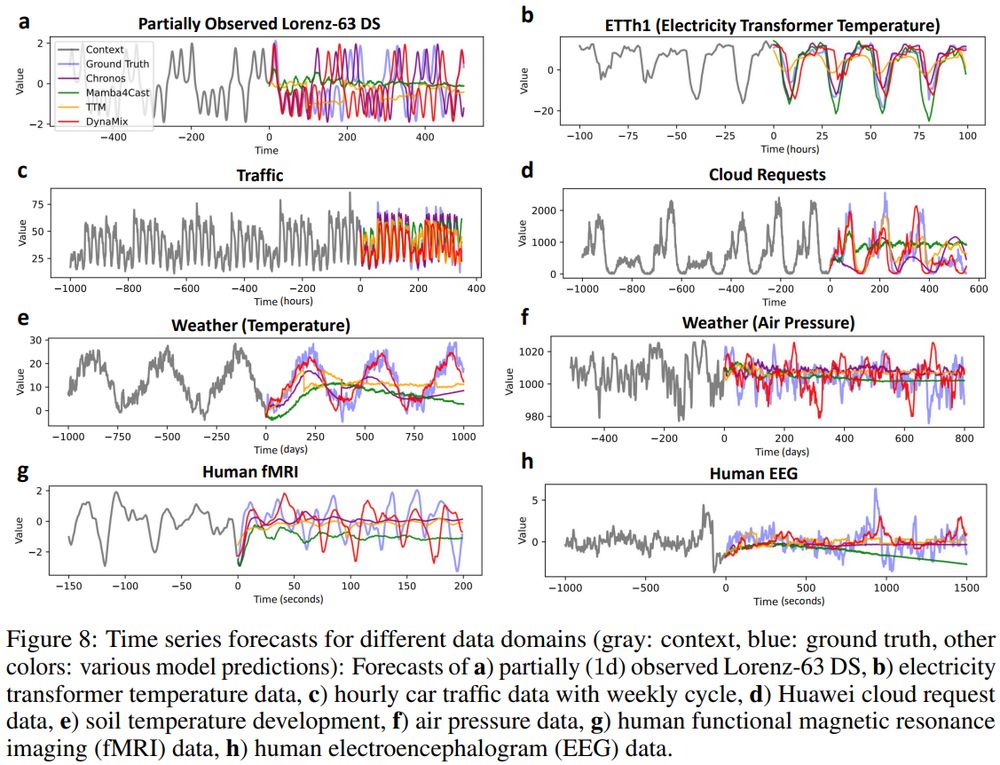

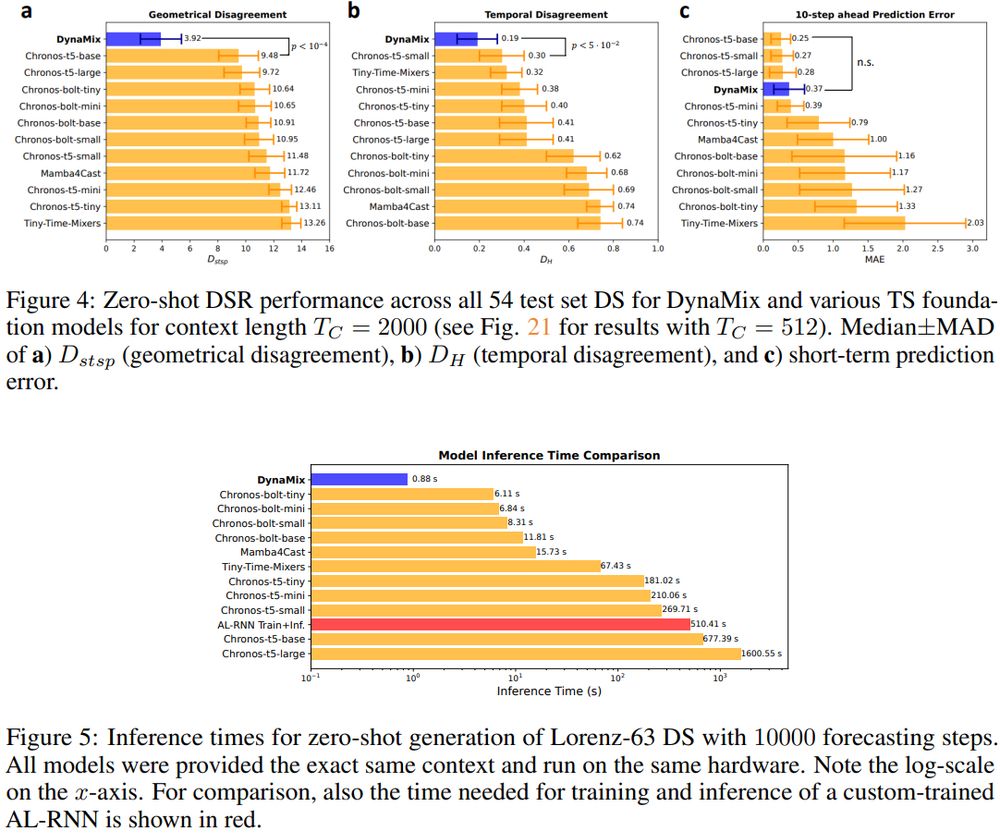

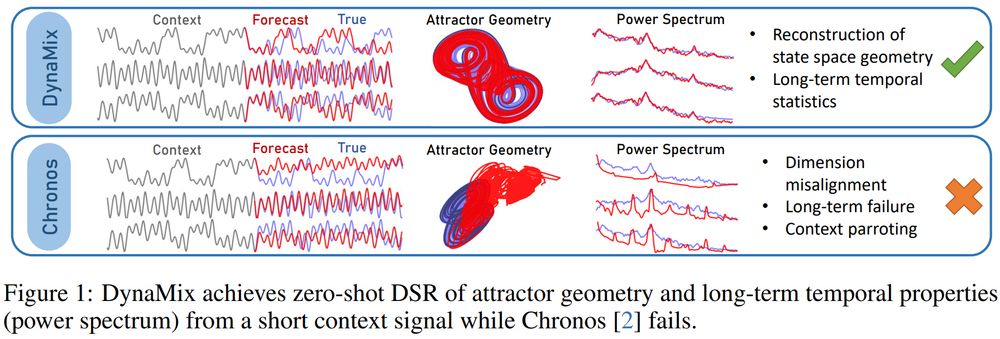

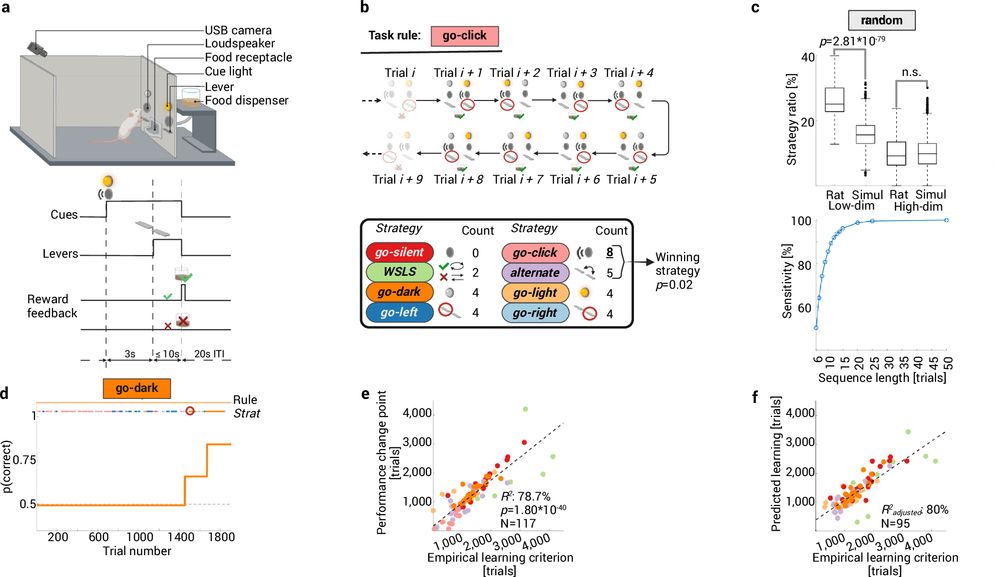

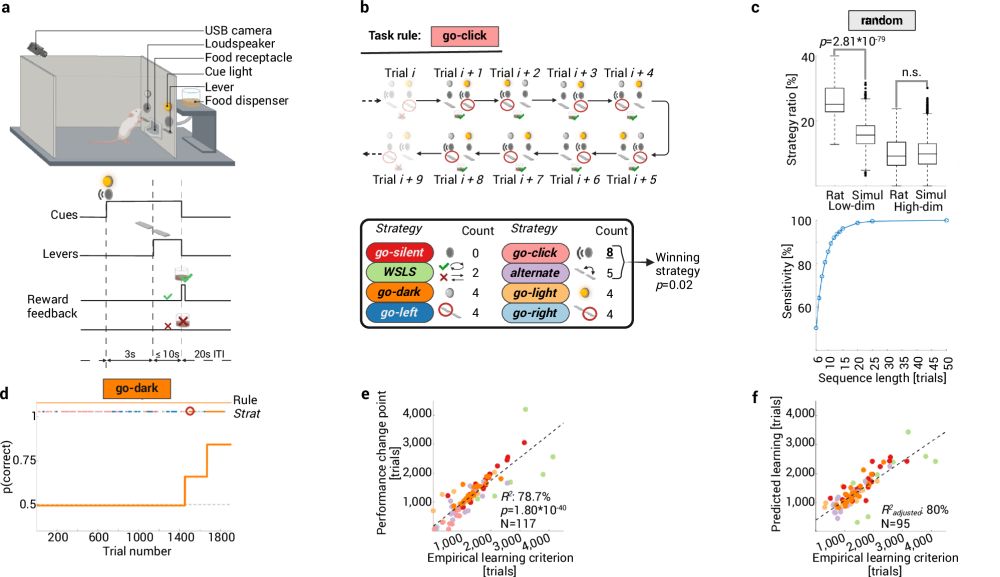

Abstract rule learning promotes cognitive flexibility in complex environments across species

Nature Communications - Whether neurocomputational mechanisms that speed up human learning in changing environments also exist in other species remains unclear. Here, the authors show that both...

rdcu.be

DurstewitzLab

@durstewitzlab.bsky.social

· Jun 26

DurstewitzLab

@durstewitzlab.bsky.social

· Jun 26

Abstract rule learning promotes cognitive flexibility in complex environments across species

Nature Communications - Whether neurocomputational mechanisms that speed up human learning in changing environments also exist in other species remains unclear. Here, the authors show that both...

rdcu.be

Reposted by DurstewitzLab

Eleonora Russo

@russoel.bsky.social

· Jun 21

Reposted by DurstewitzLab