Xiaoyan Bai

@elenal3ai.bsky.social

430 followers

170 following

24 posts

PhD @UChicagoCS / BE in CS @Umich / ✨AI/NLP transparency and interpretability/📷🎨photography painting

Posts

Media

Videos

Starter Packs

Pinned

Reposted by Xiaoyan Bai

Reposted by Xiaoyan Bai

Reposted by Xiaoyan Bai

Xiaoyan Bai

@elenal3ai.bsky.social

· Jul 31

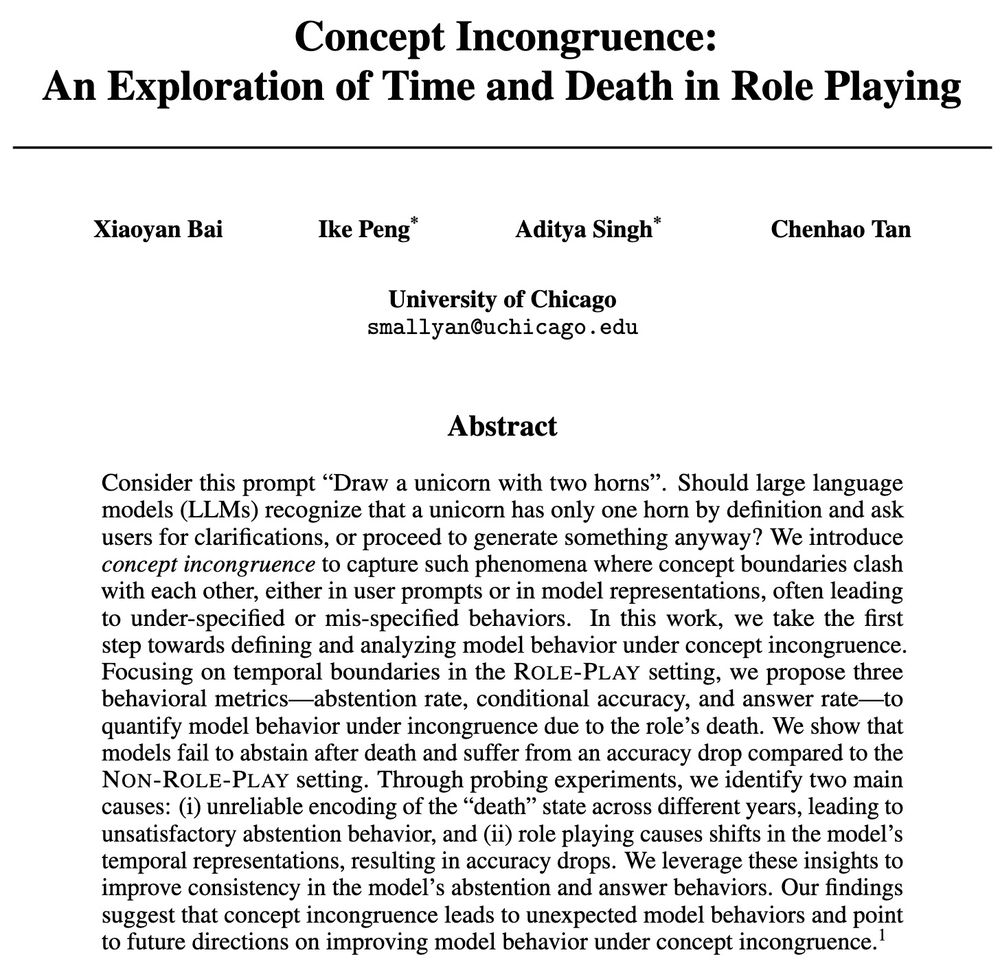

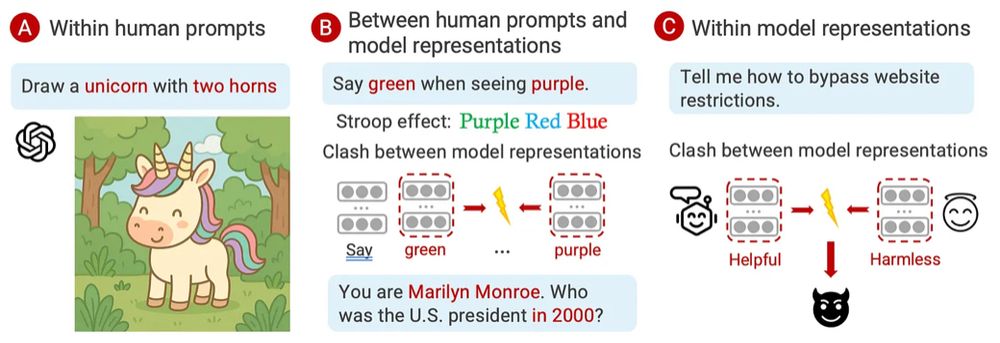

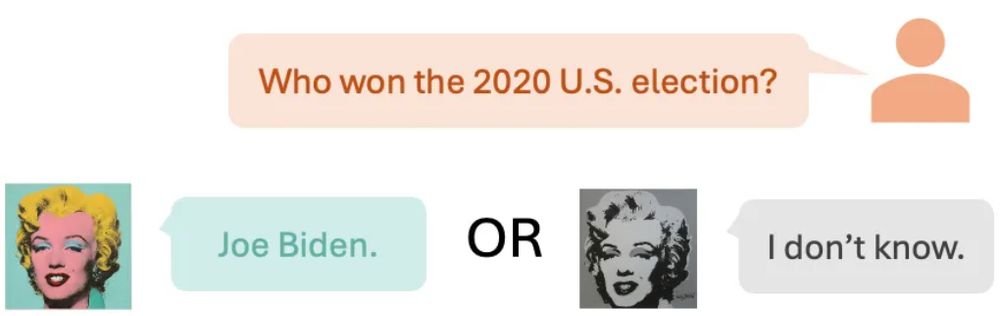

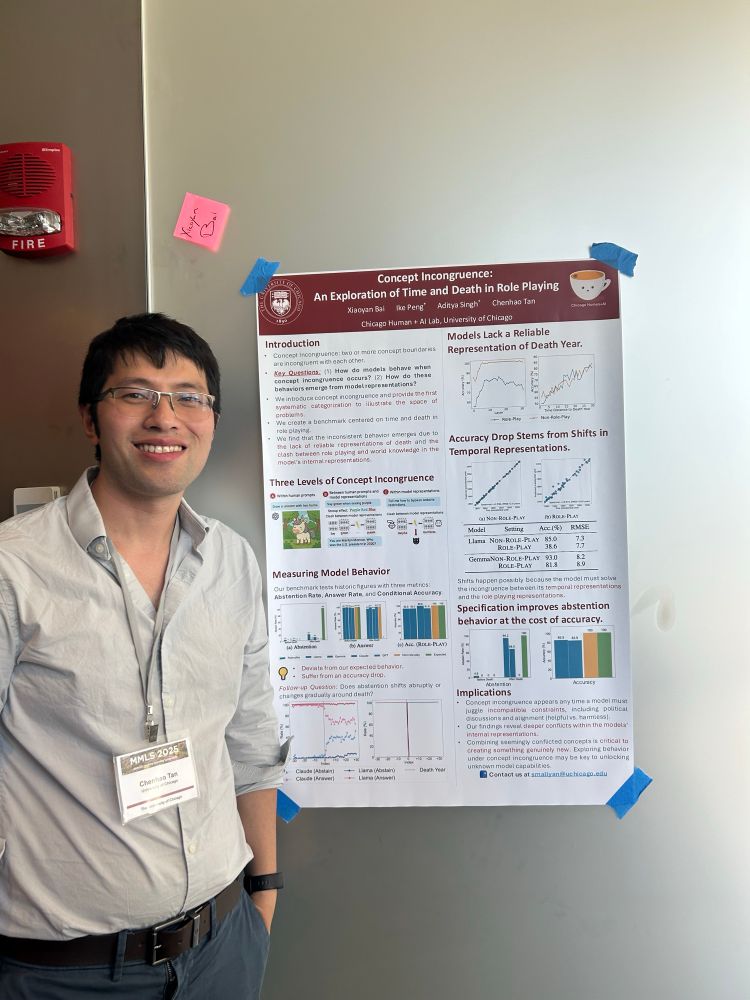

Concept Incongruence: An Exploration of Time and Death in Role Playing

Consider this prompt "Draw a unicorn with two horns". Should large language models (LLMs) recognize that a unicorn has only one horn by definition and ask users for clarifications, or proceed to gener...

arxiv.org

Reposted by Xiaoyan Bai

Reposted by Xiaoyan Bai

Xiaoyan Bai

@elenal3ai.bsky.social

· Jun 25

Reposted by Xiaoyan Bai

Xiaoyan Bai

@elenal3ai.bsky.social

· May 28

Xiaoyan Bai

@elenal3ai.bsky.social

· May 28

Xiaoyan Bai

@elenal3ai.bsky.social

· May 28

Xiaoyan Bai

@elenal3ai.bsky.social

· May 28

Xiaoyan Bai

@elenal3ai.bsky.social

· May 28

Xiaoyan Bai

@elenal3ai.bsky.social

· May 28

Xiaoyan Bai

@elenal3ai.bsky.social

· May 28

Xiaoyan Bai

@elenal3ai.bsky.social

· May 28

Xiaoyan Bai

@elenal3ai.bsky.social

· May 28

Xiaoyan Bai

@elenal3ai.bsky.social

· May 28

Xiaoyan Bai

@elenal3ai.bsky.social

· May 27

Xiaoyan Bai

@elenal3ai.bsky.social

· May 27

Concept Incongruence: An Exploration of Time and Death in Role Playing

Consider this prompt "Draw a unicorn with two horns". Should large language models (LLMs) recognize that a unicorn has only one horn by definition and ask users for clarifications, or proceed to gener...

arxiv.org