- Per-Token Activation Scaling: Each token gets its own scaling factor

- Per-Channel Weight Scaling: Each weight column (output channel) gets its own scaling factor

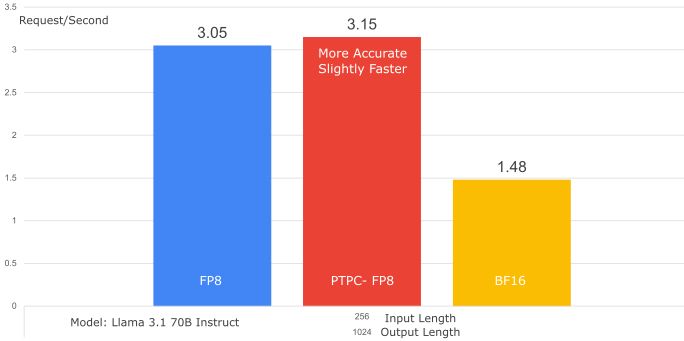

Delivers FP8 speed with accuracy closer to BF16 – the best FP8 option for ROCm! [2/2]

- Per-Token Activation Scaling: Each token gets its own scaling factor

- Per-Channel Weight Scaling: Each weight column (output channel) gets its own scaling factor

Delivers FP8 speed with accuracy closer to BF16 – the best FP8 option for ROCm! [2/2]

- Idefics3 (VLM)

- H2OVL-Mississippi (VLM for OCR/docs!)

- Qwen2-Audio (Audio LLM)

- FalconMamba

- Florence-2 (VLM)

Plus new encoder-decoder embedding models like BERT, RoBERTa, XLM-RoBERTa.

- Idefics3 (VLM)

- H2OVL-Mississippi (VLM for OCR/docs!)

- Qwen2-Audio (Audio LLM)

- FalconMamba

- Florence-2 (VLM)

Plus new encoder-decoder embedding models like BERT, RoBERTa, XLM-RoBERTa.