ExplainableML

@eml-munich.bsky.social

470 followers

24 following

120 posts

Institute for Explainable Machine Learning at @www.helmholtz-munich.de and Interpretable and Reliable Machine Learning group at Technical University of Munich and part of @munichcenterml.bsky.social

Posts

Media

Videos

Starter Packs

ExplainableML

@eml-munich.bsky.social

· Aug 4

Disentanglement of Correlated Factors via Hausdorff Factorized Support

A grand goal in deep learning research is to learn representations capable of generalizing across distribution shifts. Disentanglement is one promising direction aimed at aligning a model's representa...

arxiv.org

ExplainableML

@eml-munich.bsky.social

· Aug 4

Waffling around for Performance: Visual Classification with Random Words and Broad Concepts

The visual classification performance of vision-language models such as CLIP has been shown to benefit from additional semantic knowledge from large language models (LLMs) such as GPT-3. In particular...

arxiv.org

ExplainableML

@eml-munich.bsky.social

· Aug 4

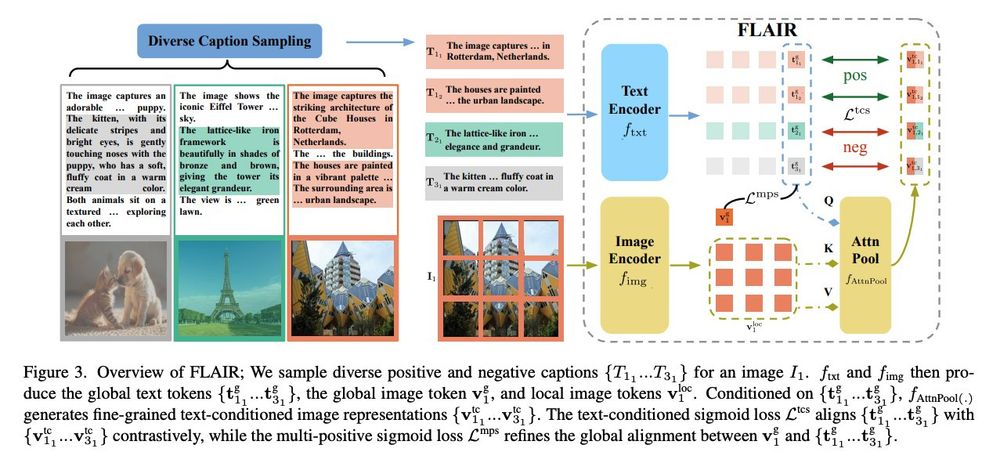

Vision-by-Language for Training-Free Compositional Image Retrieval

Given an image and a target modification (e.g an image of the Eiffel tower and the text "without people and at night-time"), Compositional Image Retrieval (CIR) aims to retrieve the relevant target im...

arxiv.org

ExplainableML

@eml-munich.bsky.social

· Aug 4

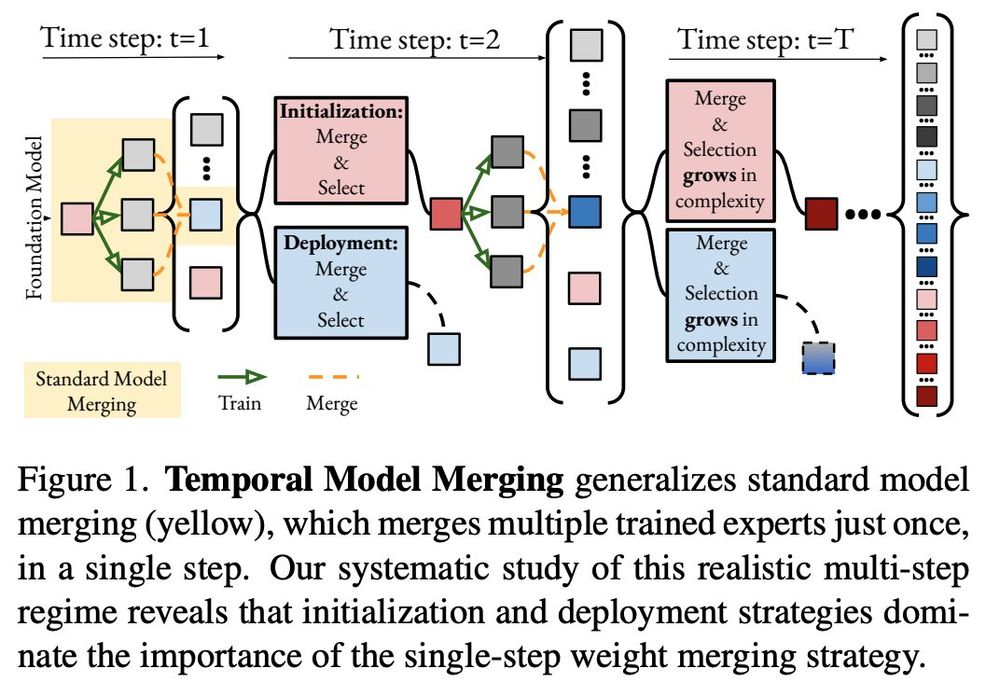

Fantastic Gains and Where to Find Them: On the Existence and Prospect of General Knowledge Transfer between Any Pretrained Model

Training deep networks requires various design decisions regarding for instance their architecture, data augmentation, or optimization. In this work, we find these training variations to result in net...

arxiv.org

ExplainableML

@eml-munich.bsky.social

· Aug 4

A Practitioner's Guide to Continual Multimodal Pretraining

Multimodal foundation models serve numerous applications at the intersection of vision and language. Still, despite being pretrained on extensive data, they become outdated over time. To keep models u...

arxiv.org

ExplainableML

@eml-munich.bsky.social

· Aug 4

A Practitioner's Guide to Continual Multimodal Pretraining

Multimodal foundation models serve numerous applications at the intersection of vision and language. Still, despite being pretrained on extensive data, they become outdated over time. To keep models u...

arxiv.org

ExplainableML

@eml-munich.bsky.social

· Aug 4

ExplainableML

@eml-munich.bsky.social

· Aug 4

ExplainableML

@eml-munich.bsky.social

· Aug 4

Reposted by ExplainableML

ExplainableML

@eml-munich.bsky.social

· Jun 18

EgoCVR: An Egocentric Benchmark for Fine-Grained Composed Video Retrieval

In Composed Video Retrieval, a video and a textual description which modifies the video content are provided as inputs to the model. The aim is to retrieve the relevant video with the modified content...

arxiv.org

ExplainableML

@eml-munich.bsky.social

· Jun 18

ReNO: Enhancing One-step Text-to-Image Models through Reward-based Noise Optimization

Text-to-Image (T2I) models have made significant advancements in recent years, but they still struggle to accurately capture intricate details specified in complex compositional prompts. While fine-tu...

arxiv.org

ExplainableML

@eml-munich.bsky.social

· Jun 18

ExplainableML

@eml-munich.bsky.social

· Jun 18

ExplainableML

@eml-munich.bsky.social

· Jun 18

Reposted by ExplainableML