Fabian Schneider

@fabianschneider.bsky.social

95 followers

120 following

18 posts

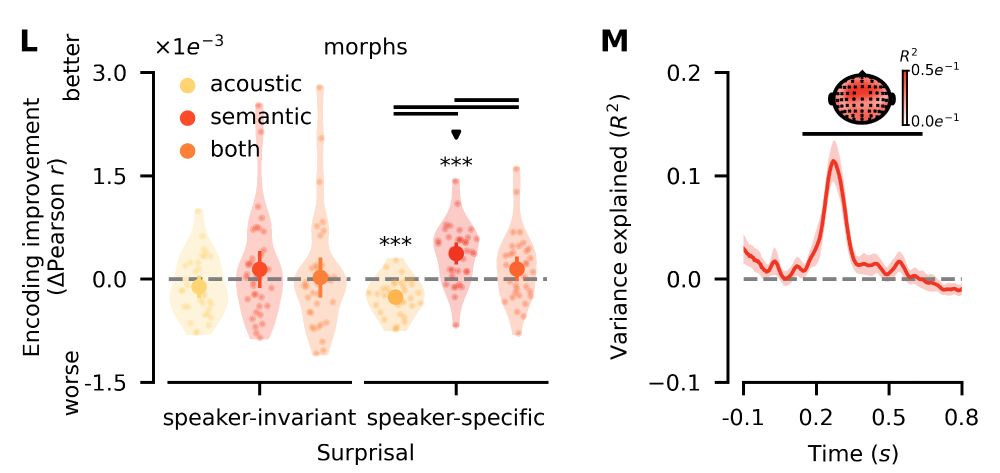

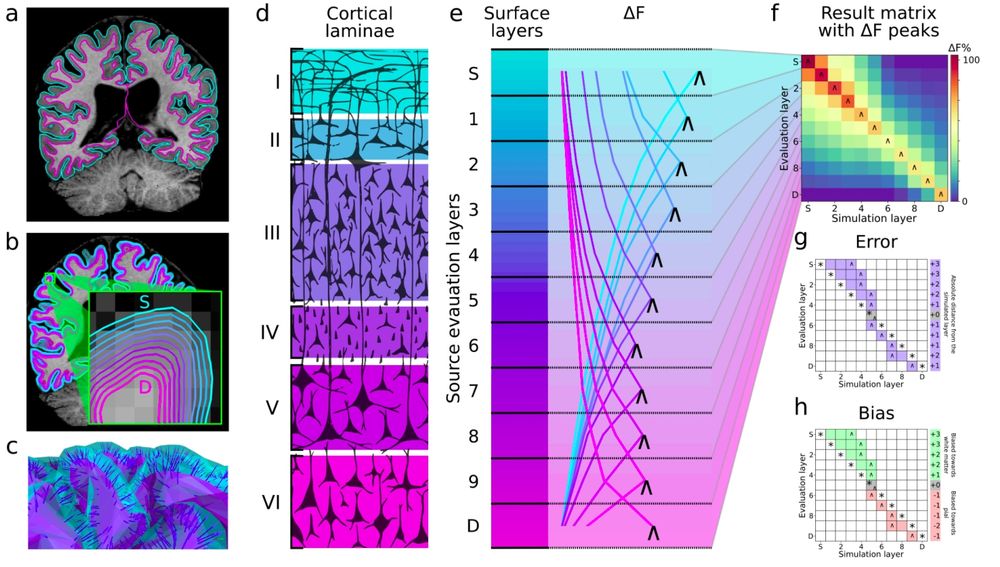

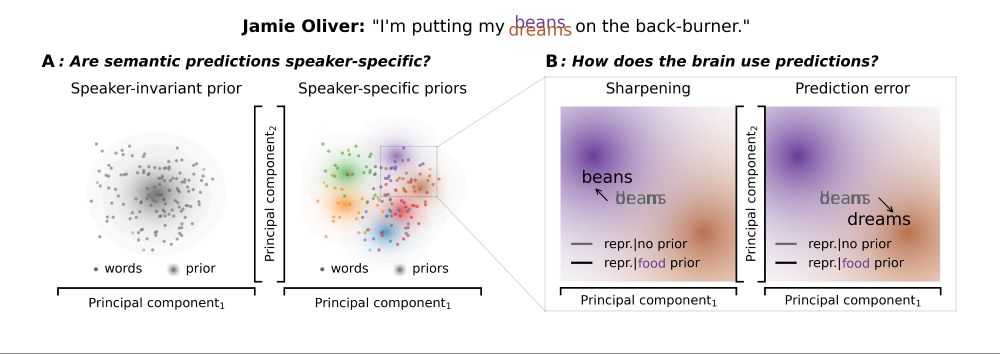

Doctoral researcher. Interested in memory, audition, semantics, predictive coding, spiking networks.

Posts

Media

Videos

Starter Packs

Pinned

Reposted by Fabian Schneider

Reposted by Fabian Schneider

![What do representations tell us about a system? Image of a mouse with a scope showing a vector of activity patterns, and a neural network with a vector of unit activity patterns

Common analyses of neural representations: Encoding models (relating activity to task features) drawing of an arrow from a trace saying [on_____on____] to a neuron and spike train. Comparing models via neural predictivity: comparing two neural networks by their R^2 to mouse brain activity. RSA: assessing brain-brain or model-brain correspondence using representational dissimilarity matrices](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:e6ewzleebkdi2y2bxhjxoknt/bafkreiav2io2ska33o4kizf57co5bboqyyfdpnozo2gxsicrfr5l7qzjcq@jpeg)