I added some longer generation examples, by enforcing `min_new_tokens`. Definitely can lose itself a bit more but still pretty decent I think :)

Check it out:

pages.cs.huji.ac.il/adiyoss-lab/...

And feel free to generate anything with a single line of code:

github.com/slp-rl/slamkit

I added some longer generation examples, by enforcing `min_new_tokens`. Definitely can lose itself a bit more but still pretty decent I think :)

Check it out:

pages.cs.huji.ac.il/adiyoss-lab/...

And feel free to generate anything with a single line of code:

github.com/slp-rl/slamkit

I will try get time to generate longer samples, but also encourage everyone to play around themselves. We tried to make it relatively easy🙏

I will try get time to generate longer samples, but also encourage everyone to play around themselves. We tried to make it relatively easy🙏

We are accepting PRs to add more tokenisers, better optimisers, efficient attention implementations and anything that seems relevant :)

Feel free to reach out 💪

We are accepting PRs to add more tokenisers, better optimisers, efficient attention implementations and anything that seems relevant :)

Feel free to reach out 💪

Really pleased you liked our work:) I think with the help of the open source community we can push results even further.

About generation length - the model context is 1024~=40 seconds of audio, but we used a setup like TWIST for evaluation. Definitely worth testing longer generations!

Really pleased you liked our work:) I think with the help of the open source community we can push results even further.

About generation length - the model context is 1024~=40 seconds of audio, but we used a setup like TWIST for evaluation. Definitely worth testing longer generations!

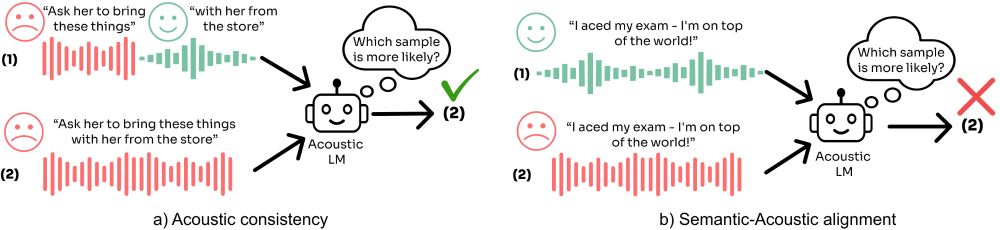

📜Paper - arxiv.org/abs/2409.07437

💻Code - github.com/slp-rl/salmon

🤗 Data - huggingface.co/datasets/slp...

📜Paper - arxiv.org/abs/2409.07437

💻Code - github.com/slp-rl/salmon

🤗 Data - huggingface.co/datasets/slp...