#CovidIsAirborne 😷

It's completely free and we'll try out ideas for you!

It's completely free and we'll try out ideas for you!

The dream of “autonomous AI scientists” is tempting:

machines that generate hypotheses, run experiments, and write papers. But science isn’t just automation.

cichicago.substack.com/p/the-mirage...

🧵

The dream of “autonomous AI scientists” is tempting:

machines that generate hypotheses, run experiments, and write papers. But science isn’t just automation.

cichicago.substack.com/p/the-mirage...

🧵

In this case, GPT-5 Pro was able to do novel math, but only when guided by a math professor (though the paper also noted the speed of advance since GPT-4)

The reflection is worth reading.

In this case, GPT-5 Pro was able to do novel math, but only when guided by a math professor (though the paper also noted the speed of advance since GPT-4)

The reflection is worth reading.

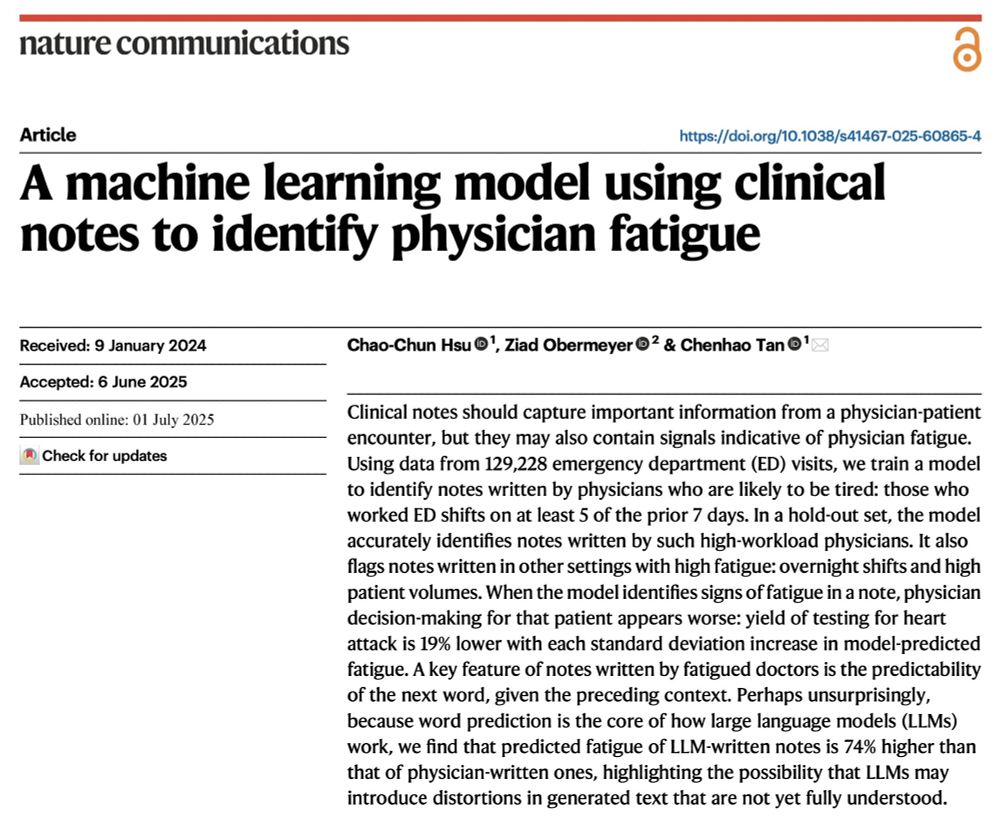

1. Fresh from a week of not working

2. Tired from working too many shifts

@oziadias.bsky.social has been both and thinks that they're different! But can you tell from their notes? Yes we can! Paper @natcomms.nature.com www.nature.com/articles/s41...

1. Fresh from a week of not working

2. Tired from working too many shifts

@oziadias.bsky.social has been both and thinks that they're different! But can you tell from their notes? Yes we can! Paper @natcomms.nature.com www.nature.com/articles/s41...

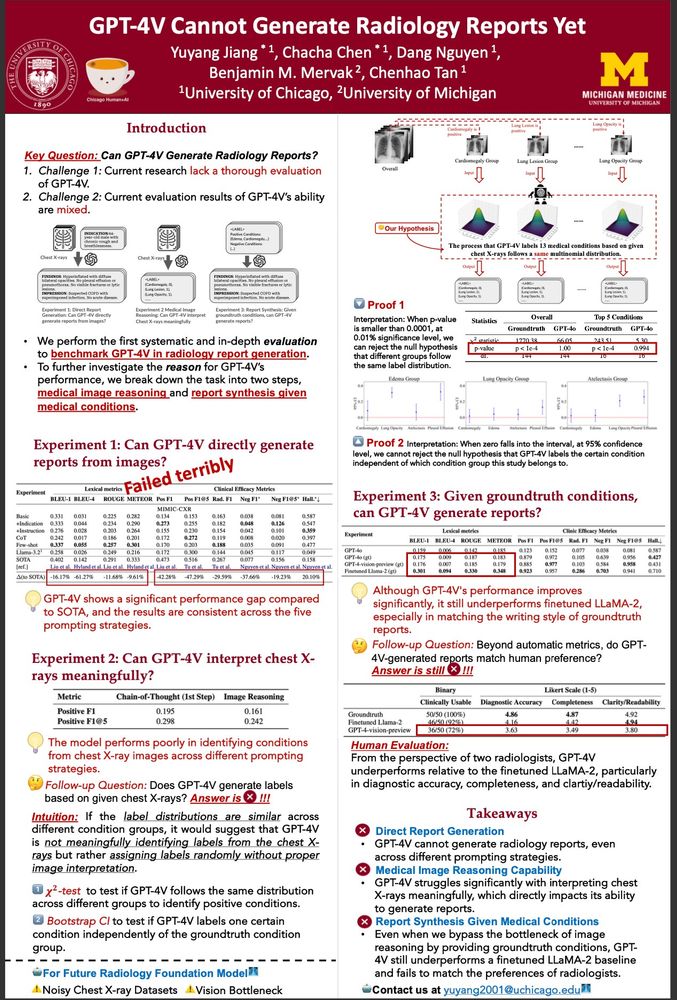

@chachachen.bsky.social GPT ❌ x-rays (Friday 9-10:30)

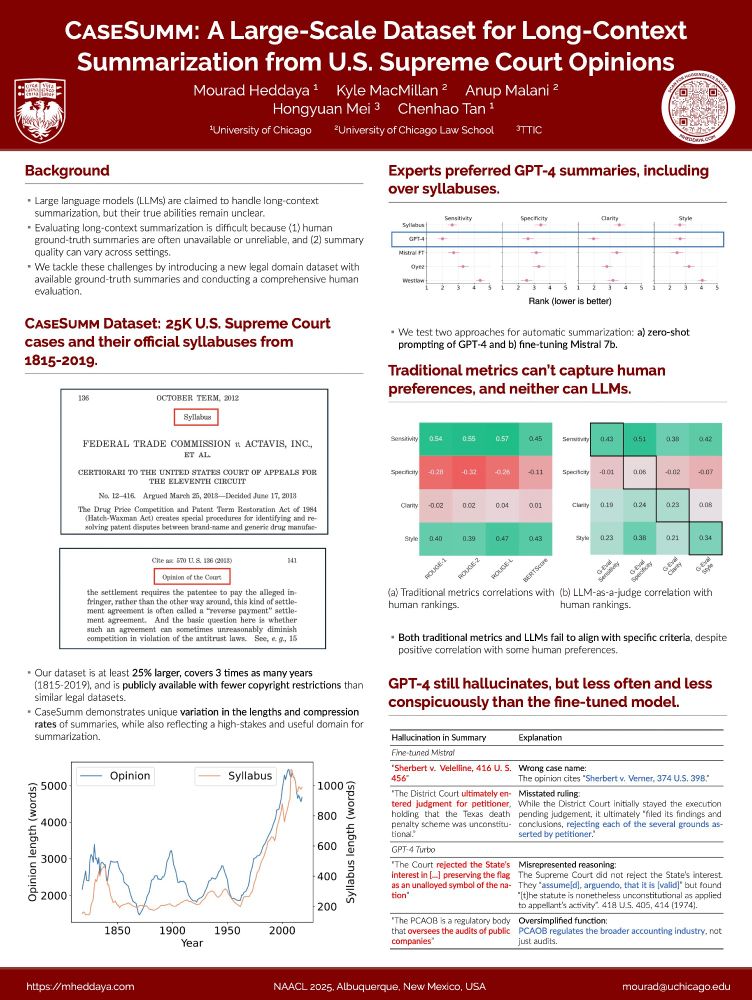

@mheddaya.bsky.social CaseSumm and LLM 🧑⚖️ (Thursday 2-3:30)

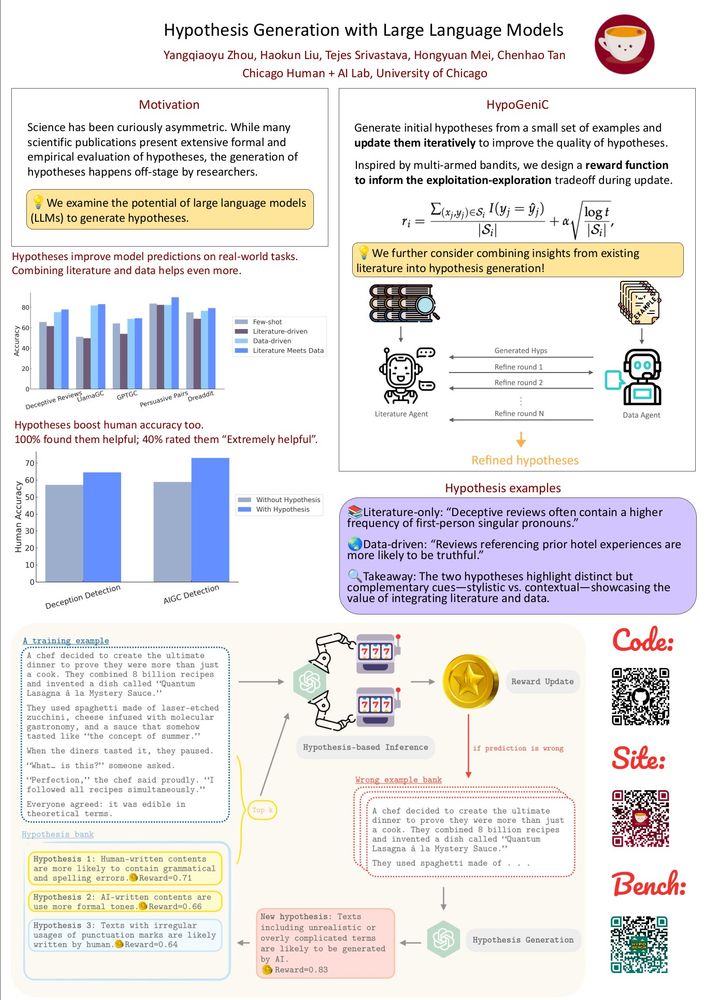

@haokunliu.bsky.social @qiaoyu-rosa.bsky.social hypothesis generation 🔬 (Saturday at 4pm)

@chachachen.bsky.social GPT ❌ x-rays (Friday 9-10:30)

@mheddaya.bsky.social CaseSumm and LLM 🧑⚖️ (Thursday 2-3:30)

@haokunliu.bsky.social @qiaoyu-rosa.bsky.social hypothesis generation 🔬 (Saturday at 4pm)

There is much excitement about leveraging LLMs for scientific hypothesis generation, but principled evaluations are missing - let’s dive into HypoBench together.

There is much excitement about leveraging LLMs for scientific hypothesis generation, but principled evaluations are missing - let’s dive into HypoBench together.

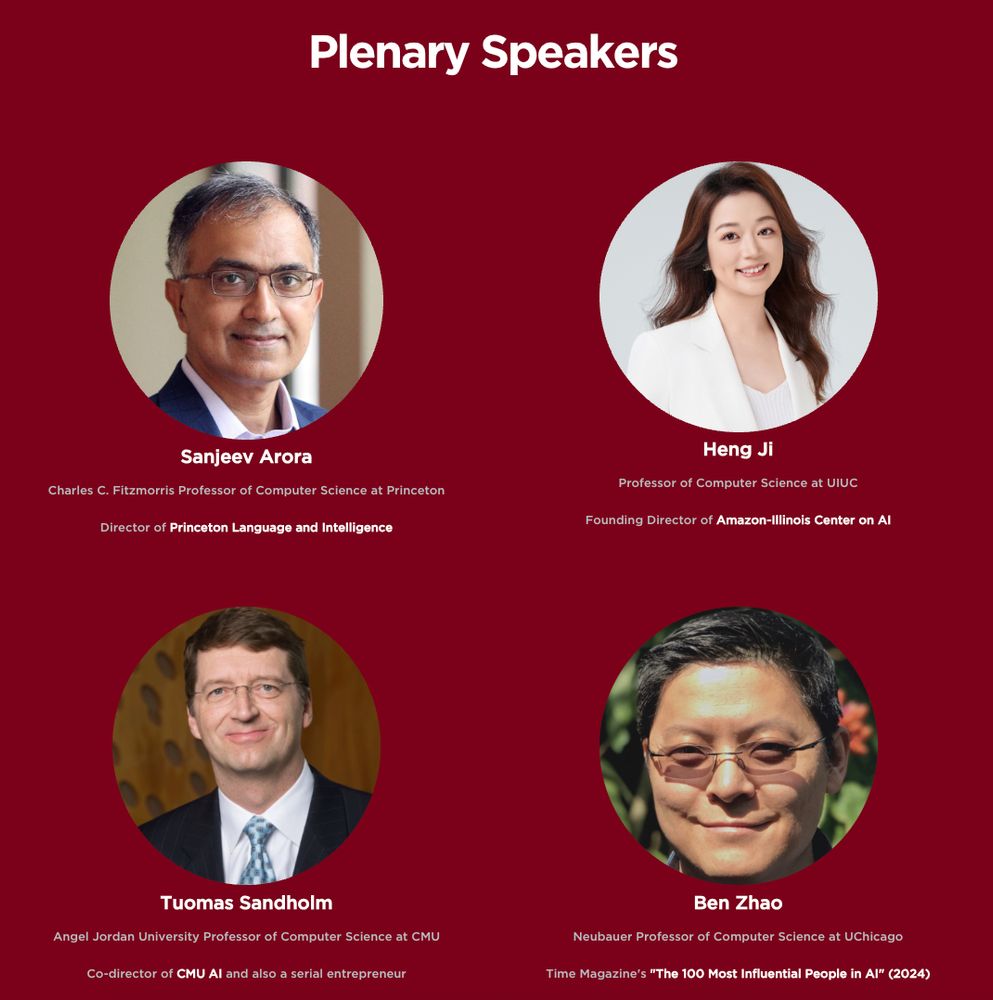

We are also actively looking for sponsors. Reach out if you are interested!

Please repost! Help spread the words!

We are also actively looking for sponsors. Reach out if you are interested!

Please repost! Help spread the words!

Joint work with: @ggarbacea.bsky.social Alexis Bellot, Jonathan Richens, Henry Papadatos, Simeon Campos, and Rohin Shah from Google DeepMind, University of Chicago, and SaferAI

Joint work with: @ggarbacea.bsky.social Alexis Bellot, Jonathan Richens, Henry Papadatos, Simeon Campos, and Rohin Shah from Google DeepMind, University of Chicago, and SaferAI

In a new paper, we use subtasks to assess capabilities. Perhaps surprisingly, LLMs often fail to fully employ their capabilities, i.e. they are not fully *goal-directed* 🧵

arxiv.org/abs/2504.118...

In a new paper, we use subtasks to assess capabilities. Perhaps surprisingly, LLMs often fail to fully employ their capabilities, i.e. they are not fully *goal-directed* 🧵

arxiv.org/abs/2504.118...

challenging? openreview.net/pdf?id=Vwgjk...

In our TMLR 2025 paper, we discuss approaches, learning methodologies and model architectures employed for generating texts with desirable attributes, and corresponding evaluation metrics.

challenging? openreview.net/pdf?id=Vwgjk...

In our TMLR 2025 paper, we discuss approaches, learning methodologies and model architectures employed for generating texts with desirable attributes, and corresponding evaluation metrics.