Gokul Swamy

@gokul.dev

4.2K followers

420 following

110 posts

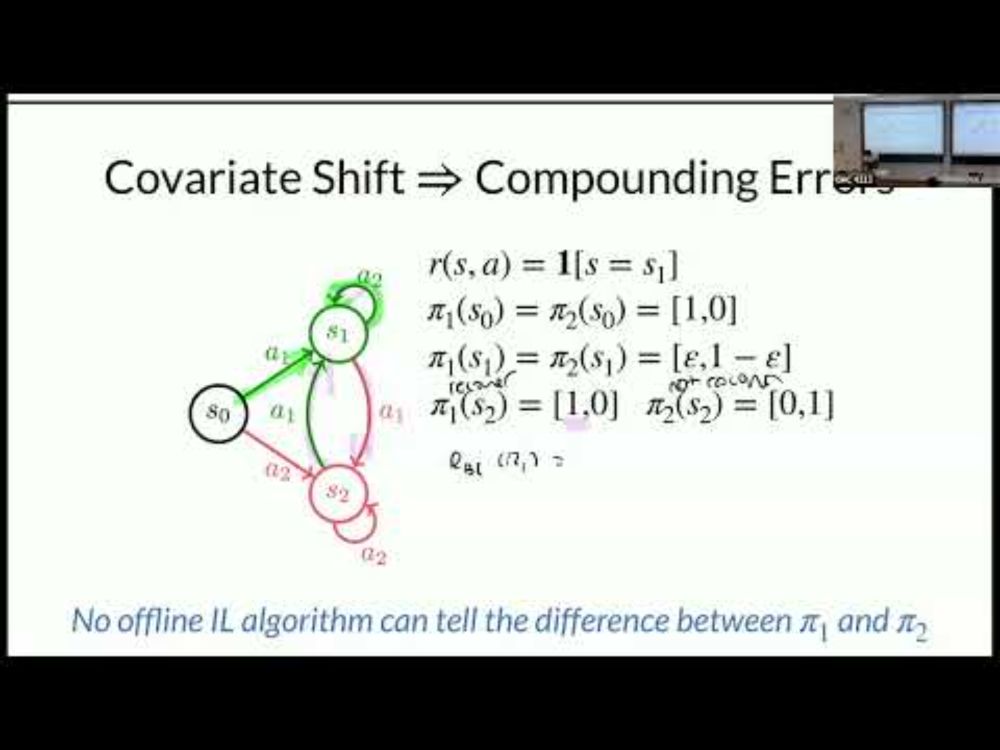

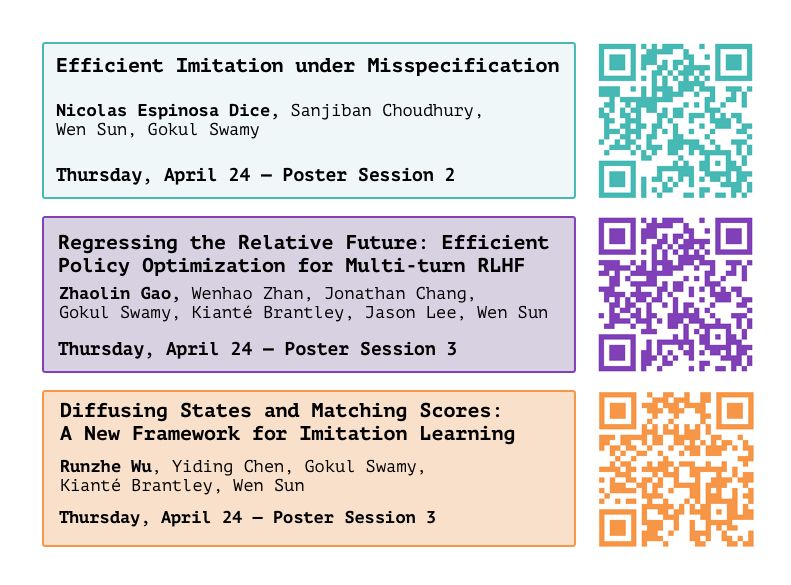

final year PhD student at @cmurobotics.bsky.social working on efficient algorithms for interactive learning (e.g. imitation / RL / RLHF). no model is an island. prefers email. https://gokul.dev/.

Posts

Media

Videos

Starter Packs

Pinned

Gokul Swamy

@gokul.dev

· Mar 6

Gokul Swamy

@gokul.dev

· Aug 23

Gokul Swamy

@gokul.dev

· Aug 23

Gokul Swamy

@gokul.dev

· Jul 15

Gokul Swamy

@gokul.dev

· Jul 15

Gokul Swamy

@gokul.dev

· Jul 15

Gokul Swamy

@gokul.dev

· Jul 15

Gokul Swamy

@gokul.dev

· Jul 15

Gokul Swamy

@gokul.dev

· Jul 15

Reposted by Gokul Swamy

Reposted by Gokul Swamy

Gokul Swamy

@gokul.dev

· Jun 20

Gokul Swamy

@gokul.dev

· Jun 20

Gokul Swamy

@gokul.dev

· Jun 20

Gokul Swamy

@gokul.dev

· Jun 20

Gokul Swamy

@gokul.dev

· Jun 20

Gokul Swamy

@gokul.dev

· Apr 27