Gaspard Lambrechts

@gsprd.be

2.1K followers

650 following

26 posts

PhD Student doing RL in POMDP at the University of Liège - Intern at McGill - gsprd.be

Posts

Media

Videos

Starter Packs

Reposted by Gaspard Lambrechts

Reposted by Gaspard Lambrechts

Reposted by Gaspard Lambrechts

Gaspard Lambrechts

@gsprd.be

· Jun 9

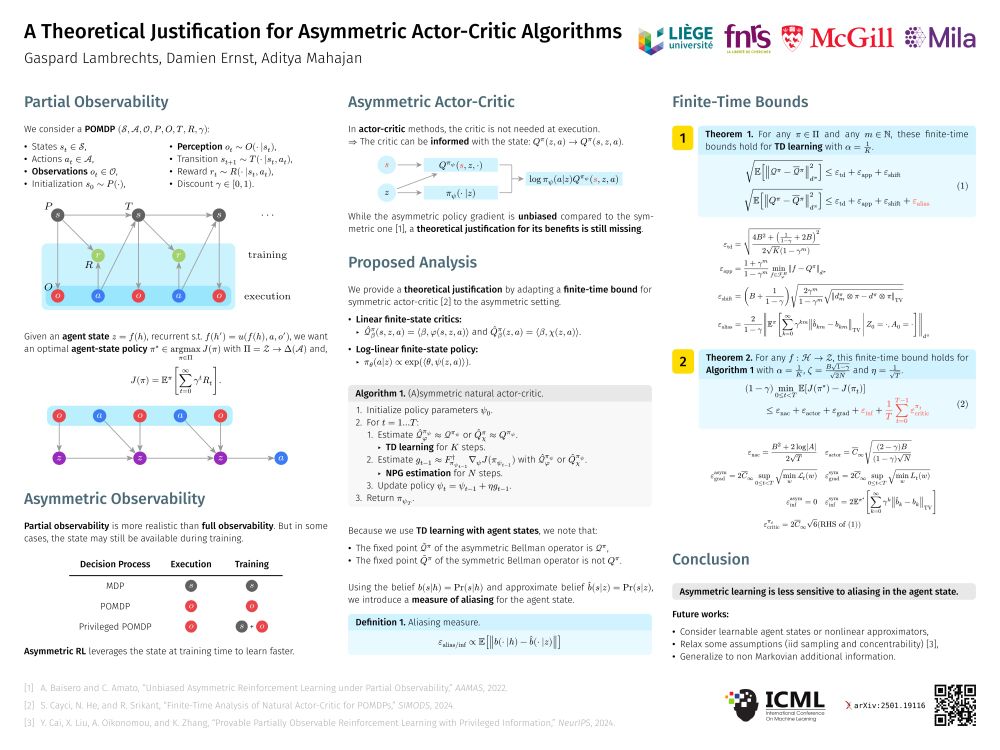

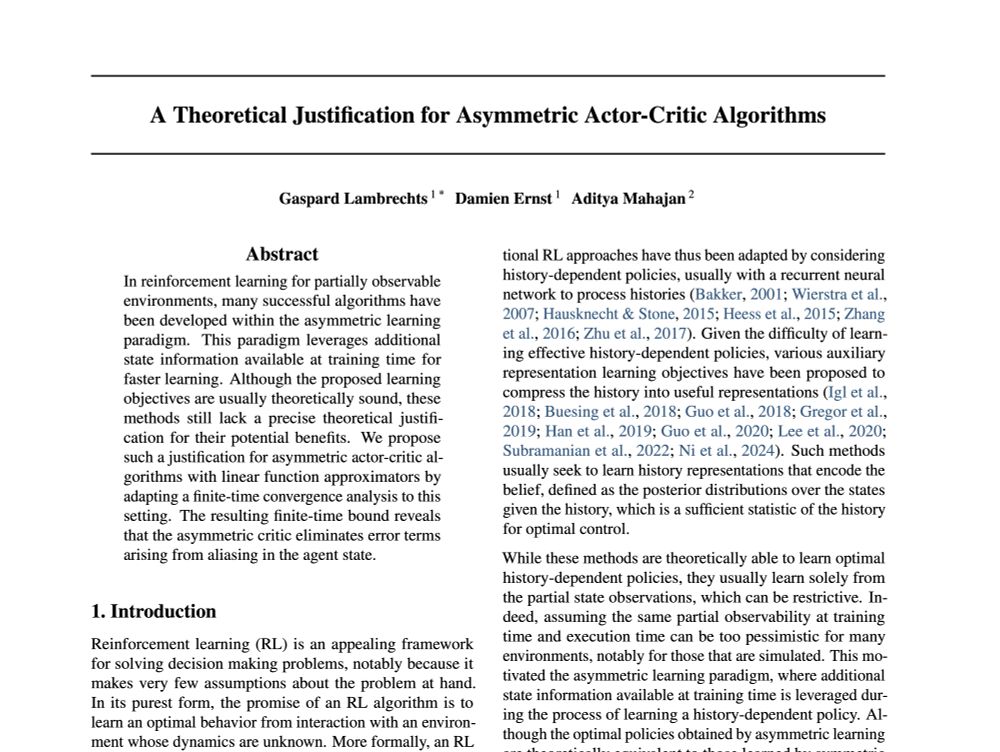

A Theoretical Justification for Asymmetric Actor-Critic Algorithms

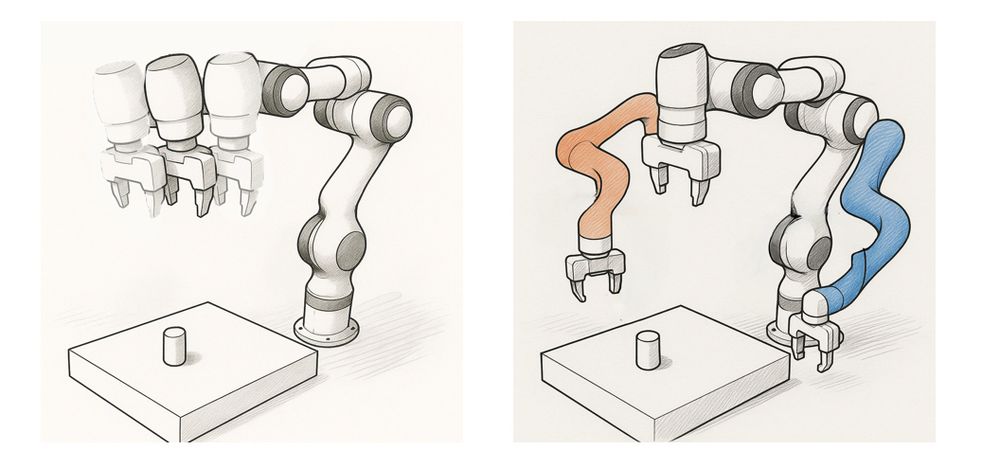

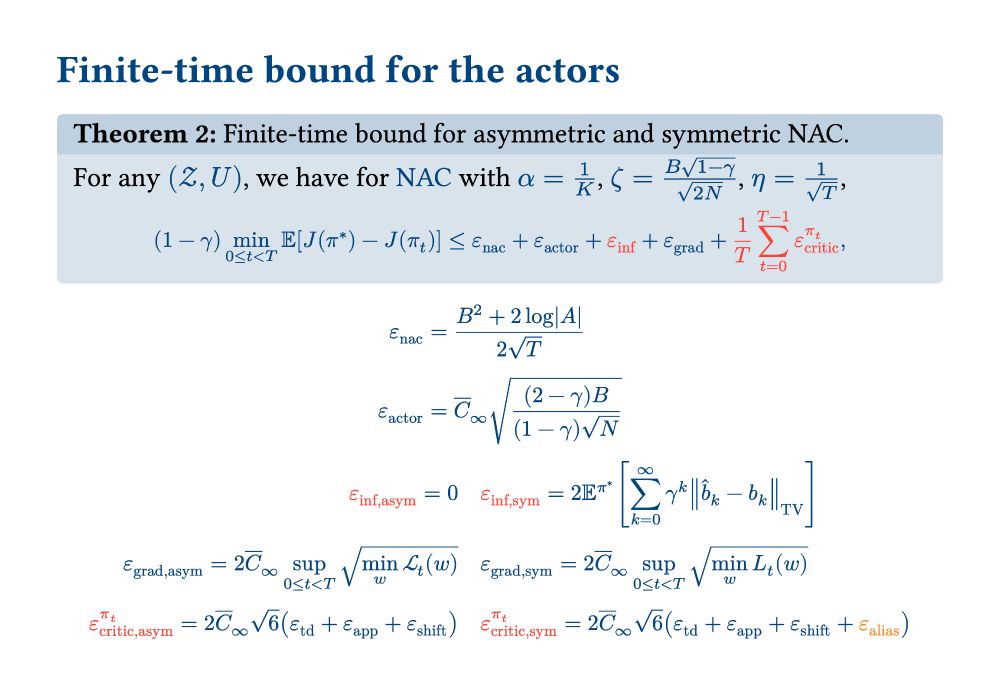

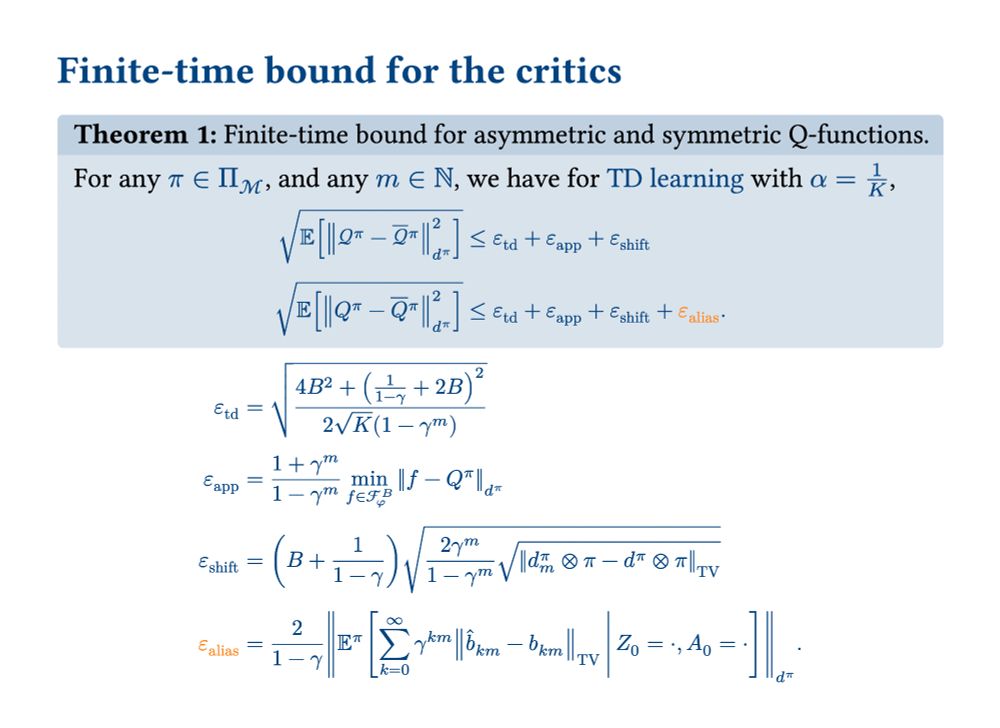

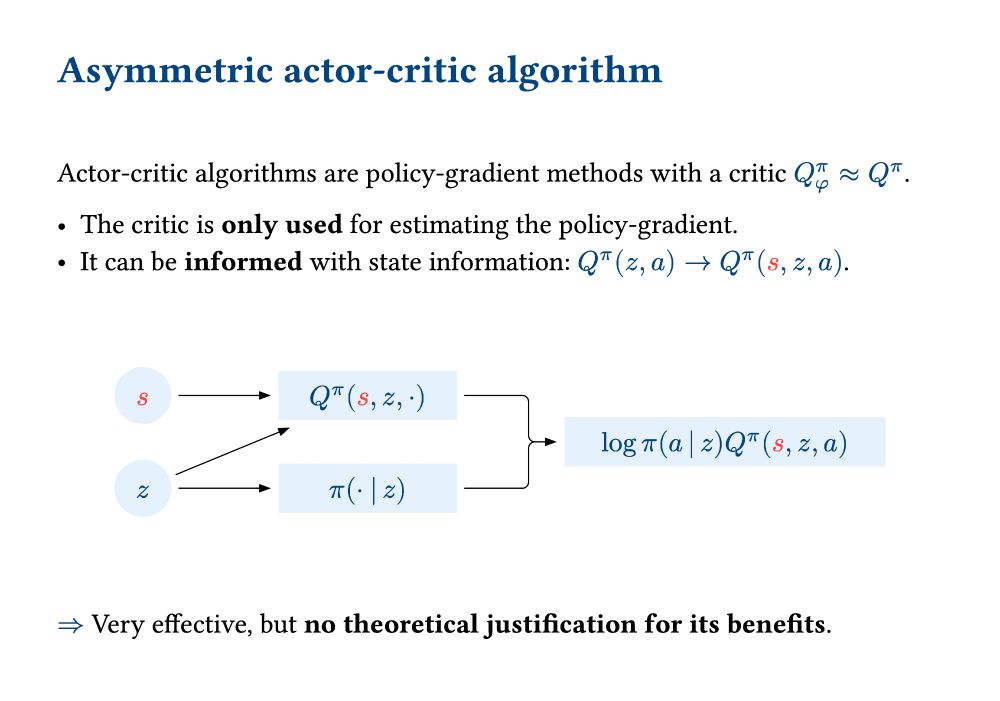

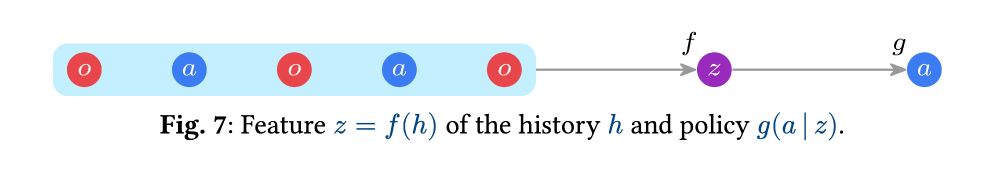

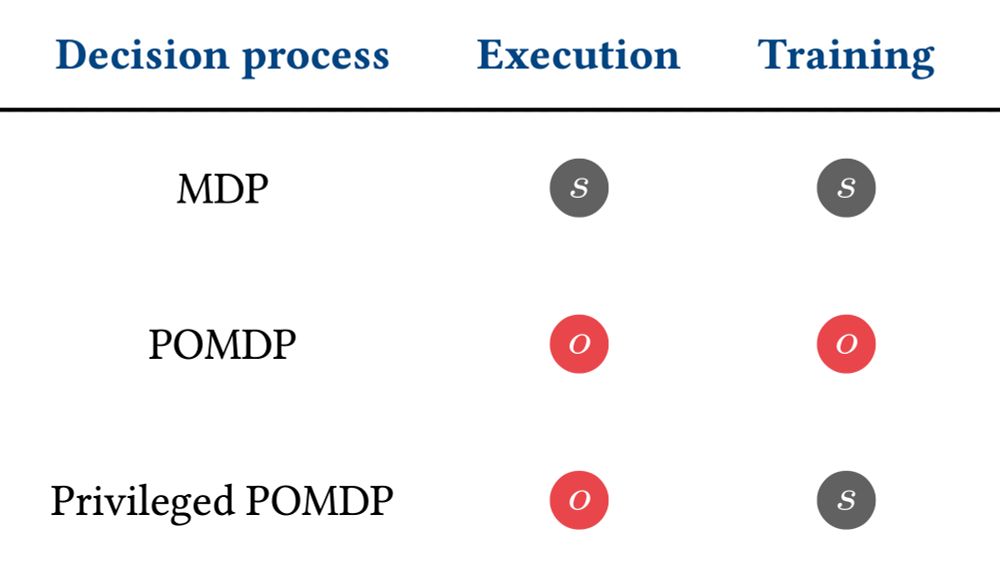

In reinforcement learning for partially observable environments, many successful algorithms have been developed within the asymmetric learning paradigm. This paradigm leverages additional state inform...

arxiv.org

Gaspard Lambrechts

@gsprd.be

· Jun 9

Gaspard Lambrechts

@gsprd.be

· Jun 9