hbaniecki.com

Check out the paper on arXiv: arxiv.org/abs/2508.05430

Code to be released soon

👆4/4

Check out the paper on arXiv: arxiv.org/abs/2508.05430

Code to be released soon

👆4/4

👇3/4

👇3/4

👇2/4

👇2/4

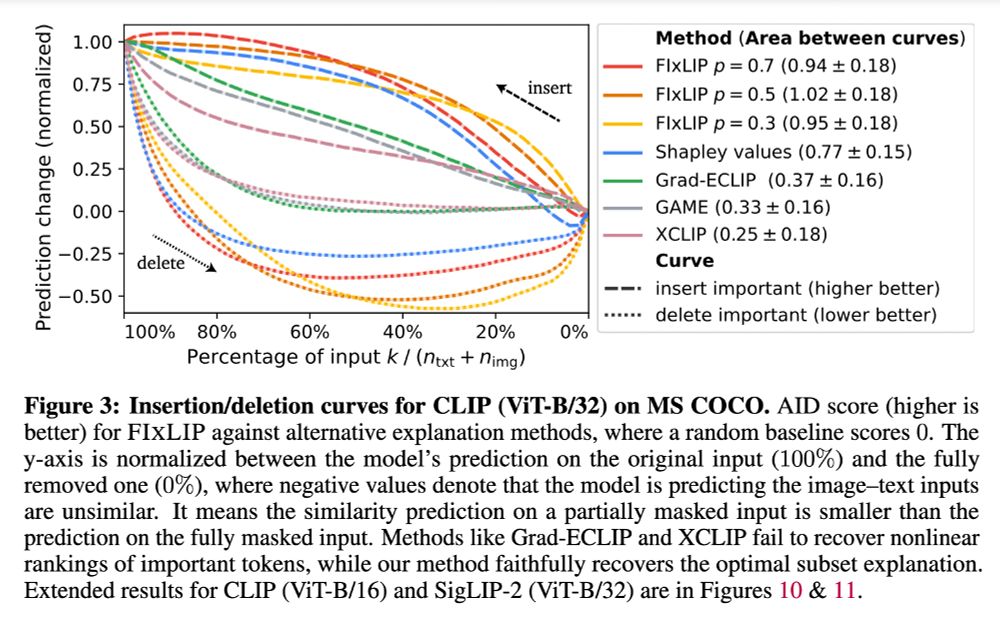

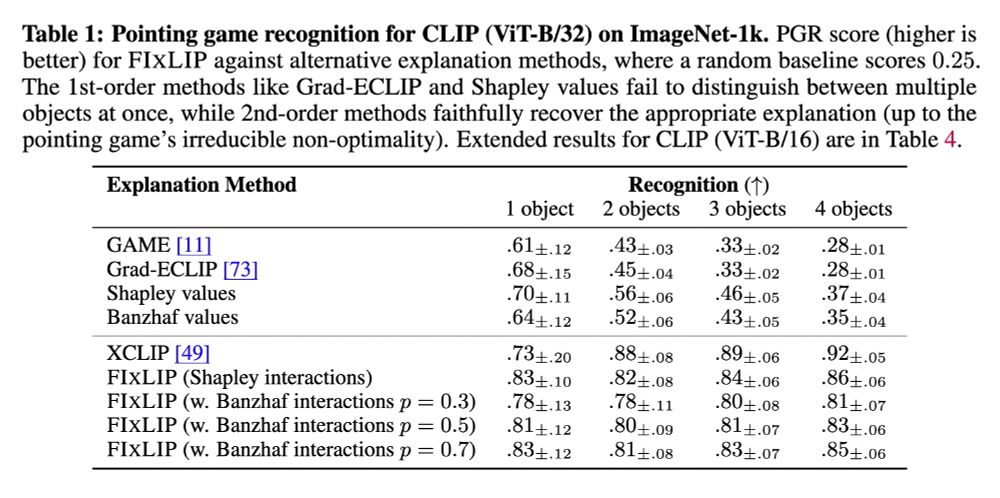

We introduce faithful interaction explanations of CLIP models (FIxLIP), offering a unique perspective on interpreting image–text similarity predictions.

👇1/4

We introduce faithful interaction explanations of CLIP models (FIxLIP), offering a unique perspective on interpreting image–text similarity predictions.

👇1/4

👇4/5

👇4/5

👇3/5

👇3/5

This is joint work with Giuseppe Casalicchio, Bernd Bischl & Przemyslaw Biecek, to be presented in Singapore 🇸🇬

👇1/5

This is joint work with Giuseppe Casalicchio, Bernd Bischl & Przemyslaw Biecek, to be presented in Singapore 🇸🇬

👇1/5