assistant professor @ Uni Bonn & Lamarr Institute

interested in self-learning & autonomous robots, likes all the messy hardware problems of real-world experiments

https://rpl.uni-bonn.de/

https://hermannblum.net/

16.30 - 17.00: @sattlertorsten.bsky.social on Vision Localization Across Modalities

full schedule: localizoo.com/workshop

16.30 - 17.00: @sattlertorsten.bsky.social on Vision Localization Across Modalities

full schedule: localizoo.com/workshop

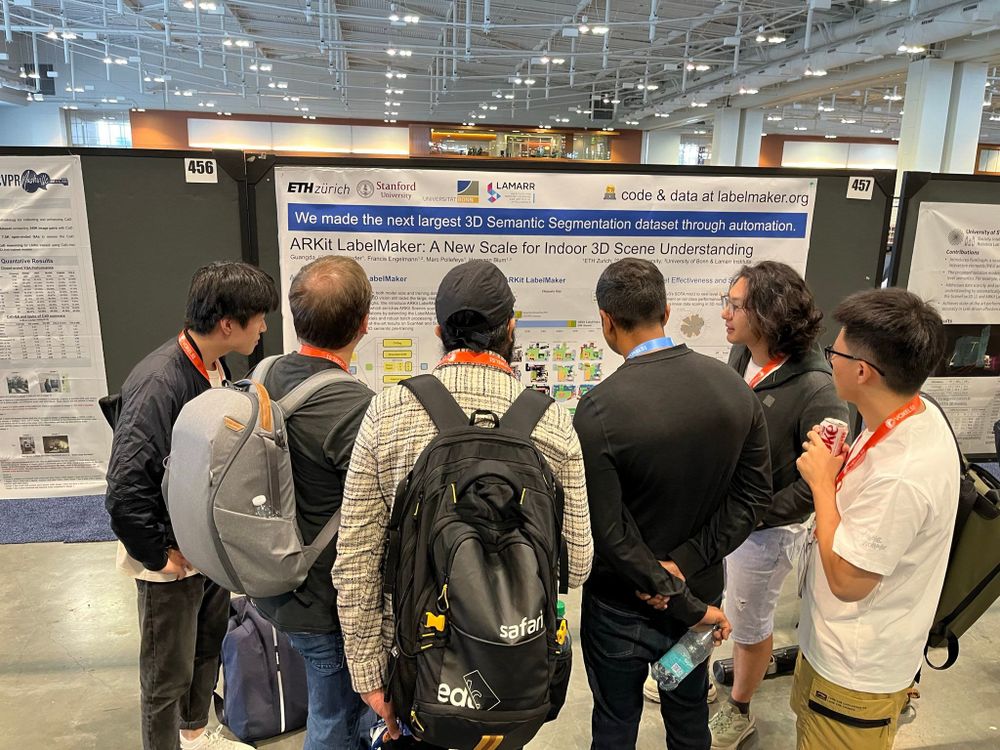

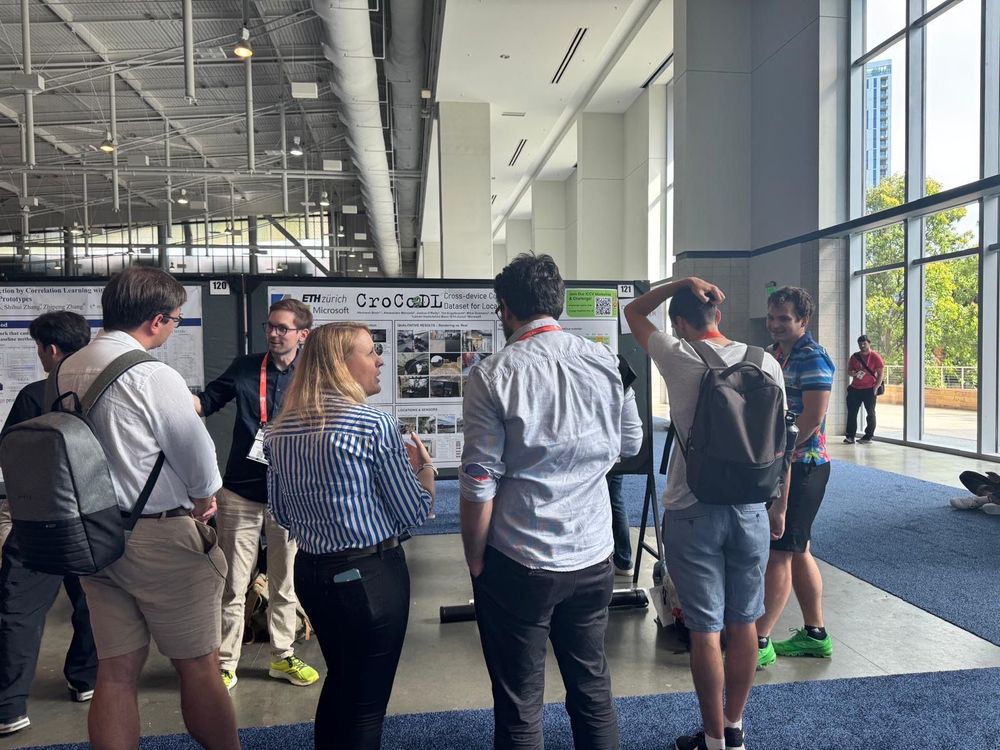

Contributed works cover Visual Localization, Visual Place Recognition, Room Layout Estimation, Novel View Synthesis, 3D Reconstruction

Contributed works cover Visual Localization, Visual Place Recognition, Room Layout Estimation, Novel View Synthesis, 3D Reconstruction

14.15 - 14.45: @ayoungk.bsky.social on Bridging heterogeneous sensors for robust and generalizable localization

full schedule: localizoo.com/workshop/

14.15 - 14.45: @ayoungk.bsky.social on Bridging heterogeneous sensors for robust and generalizable localization

full schedule: localizoo.com/workshop/

13.15 - 13.45: @gabrielacsurka.bsky.social on Privacy Preserving Visual Localization

full schedule: buff.ly/kM1Ompf

13.15 - 13.45: @gabrielacsurka.bsky.social on Privacy Preserving Visual Localization

full schedule: buff.ly/kM1Ompf

localizoo.com/workshop

Speakers: @gabrielacsurka.bsky.social, @ayoungk.bsky.social, David Caruso, @sattlertorsten.bsky.social

w/ @zbauer.bsky.social @mihaidusmanu.bsky.social @linfeipan.bsky.social @marcpollefeys.bsky.social

localizoo.com/workshop

Speakers: @gabrielacsurka.bsky.social, @ayoungk.bsky.social, David Caruso, @sattlertorsten.bsky.social

w/ @zbauer.bsky.social @mihaidusmanu.bsky.social @linfeipan.bsky.social @marcpollefeys.bsky.social

Key idea 💡Instead of detecting froniers in a map, we directly predict them from images. Hence, FrontierNet can implicitly learn visual semantic priors to estimate information gain. That speeds up exploration compared to geometric heuristics.

Key idea 💡Instead of detecting froniers in a map, we directly predict them from images. Hence, FrontierNet can implicitly learn visual semantic priors to estimate information gain. That speeds up exploration compared to geometric heuristics.

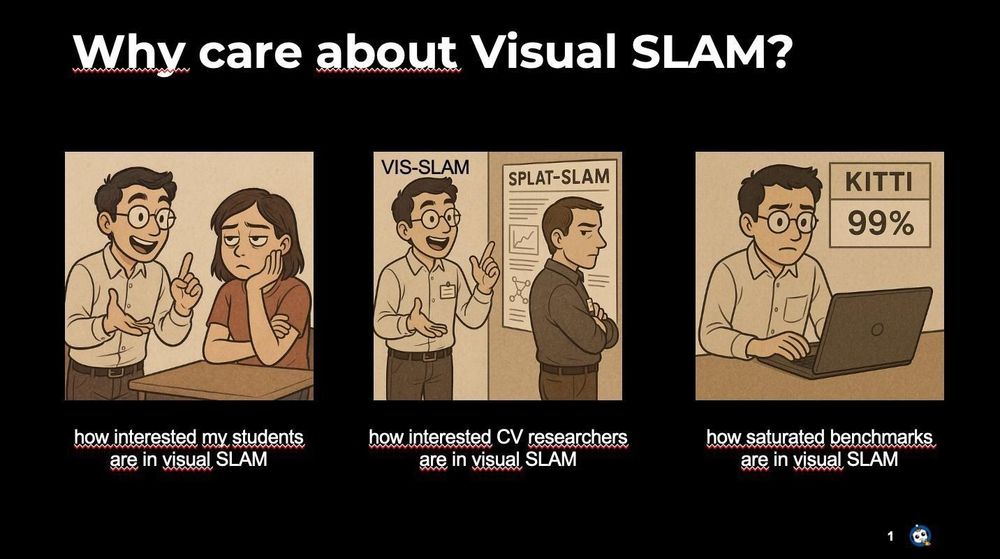

I'll be up later today at the Visual SLAM workshop at @roboticsscisys.bsky.social

buff.ly/ADHxPsX

I'll be up later today at the Visual SLAM workshop at @roboticsscisys.bsky.social

buff.ly/ADHxPsX

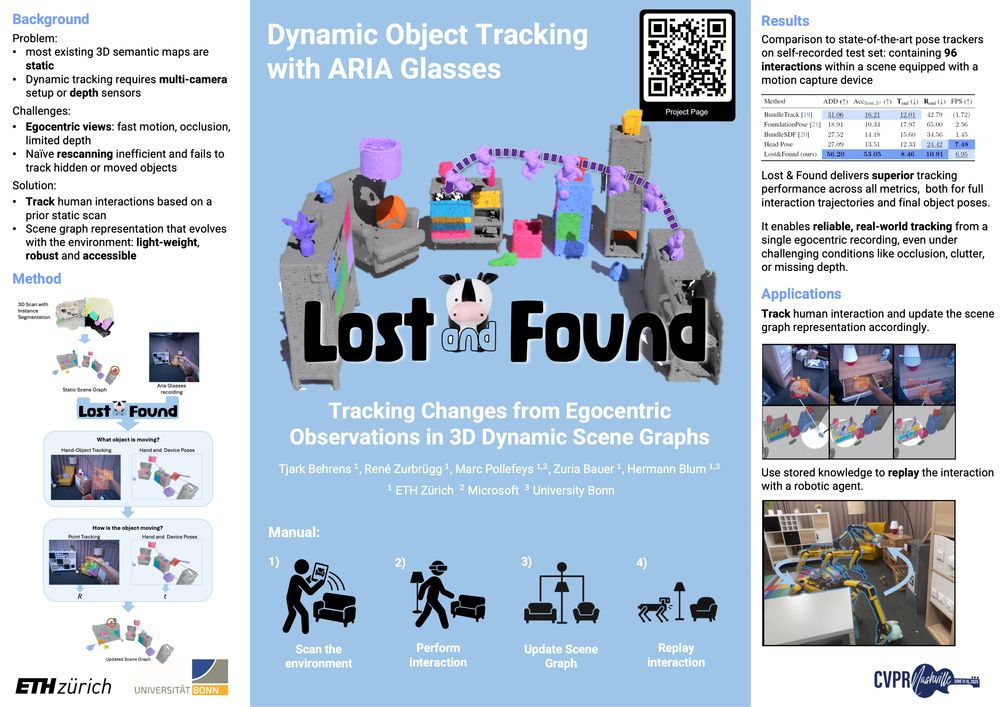

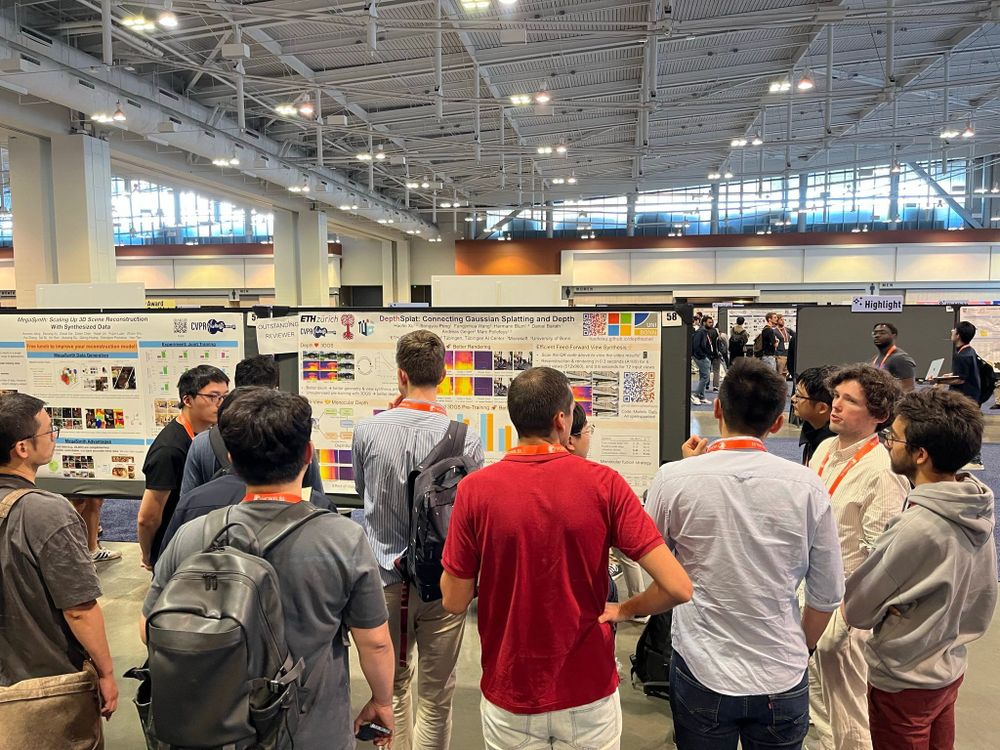

Really cool to see something I could work with during my PhD featured as a swiss highlight 🤖

Really cool to see something I could work with during my PhD featured as a swiss highlight 🤖

🔗 behretj.github.io/LostAndFound/

📄 arxiv.org/abs/2411.19162

📺 youtu.be/xxMsaBSeMXo

🔗 behretj.github.io/LostAndFound/

📄 arxiv.org/abs/2411.19162

📺 youtu.be/xxMsaBSeMXo

#ICRA

submission week? Let us brighten your day with a real "SpotLight" 💡

🔗 timengelbracht.github.io/SpotLight/

📄 arxiv.org/abs/2409.11870

We detect and generate interaction for almost any light switch and can then map which switch turns on which light

#Robotics

#ICRA

submission week? Let us brighten your day with a real "SpotLight" 💡

🔗 timengelbracht.github.io/SpotLight/

📄 arxiv.org/abs/2409.11870

We detect and generate interaction for almost any light switch and can then map which switch turns on which light

#Robotics