Helen Toner

@hlntnr.bsky.social

1K followers

590 following

51 posts

AI, national security, China. Part of the founding team at @csetgeorgetown.bsky.social (opinions my own). Author of Rising Tide on substack: helentoner.substack.com

Posts

Media

Videos

Starter Packs

Helen Toner

@hlntnr.bsky.social

· Jul 22

Helen Toner

@hlntnr.bsky.social

· Jul 22

Helen Toner

@hlntnr.bsky.social

· May 19

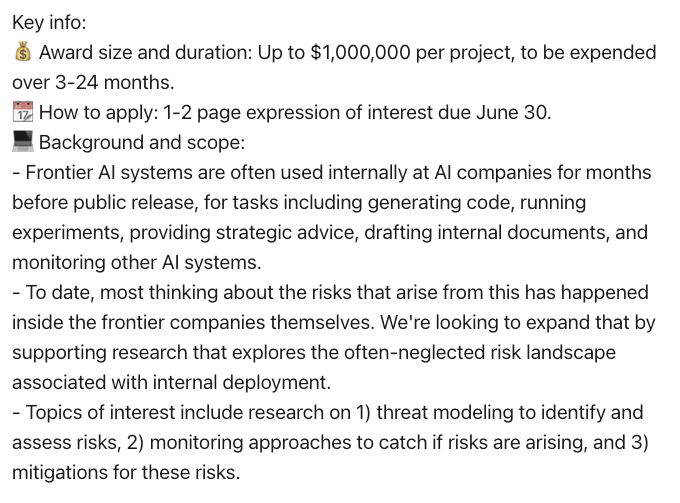

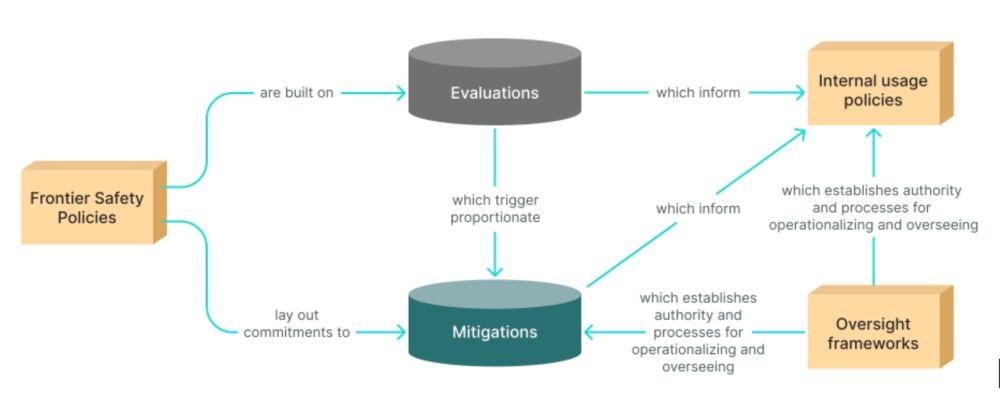

AI Behind Closed Doors: a Primer on The Governance of Internal Deployment — Apollo Research

In the race toward increasingly capable artificial intelligence (AI) systems, much attention has been focused on how these systems interact with the public. However, a critical blind spot exists in ou...

www.apolloresearch.ai

Helen Toner

@hlntnr.bsky.social

· May 19

Foundational Research Grants | Center for Security and Emerging Technology

Foundational Research Grants (FRG) supports the exploration of foundational technical topics that relate to the potential national security implications of AI over the long term. In contrast to most C...

cset.georgetown.edu

Helen Toner

@hlntnr.bsky.social

· May 12

The FUTURE AND ITS ENEMIES: The Growing Conflict Over Creativity, Enterprise, and Progress

The FUTURE AND ITS ENEMIES: The Growing Conflict Over Creativity, Enterprise, and Progress [Postrel, Virginia] on Amazon.com. *FREE* shipping on qualifying offers. The FUTURE AND ITS ENEMIES: The Growing Conflict Over Creativity, Enterprise, and Progress

amazon.com

Helen Toner

@hlntnr.bsky.social

· May 12

Helen Toner

@hlntnr.bsky.social

· Apr 5