Aditya Vashistha

@imadityav.bsky.social

1.5K followers

56 following

33 posts

Assistant Professor at Cornell. Research in HCI4D, Social Computing, Responsible AI, and Accessibility. https://www.adityavashistha.com/

Posts

Media

Videos

Starter Packs

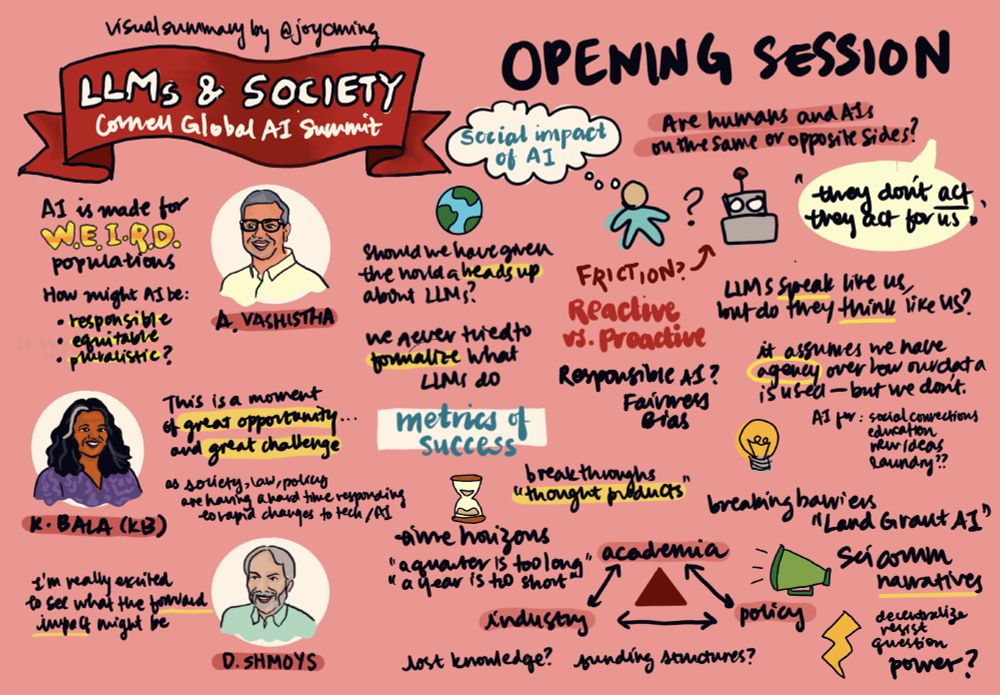

Aditya Vashistha

@imadityav.bsky.social

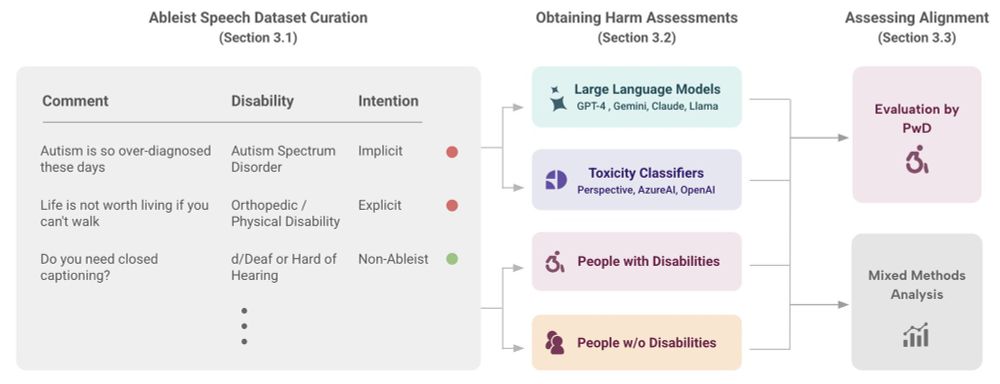

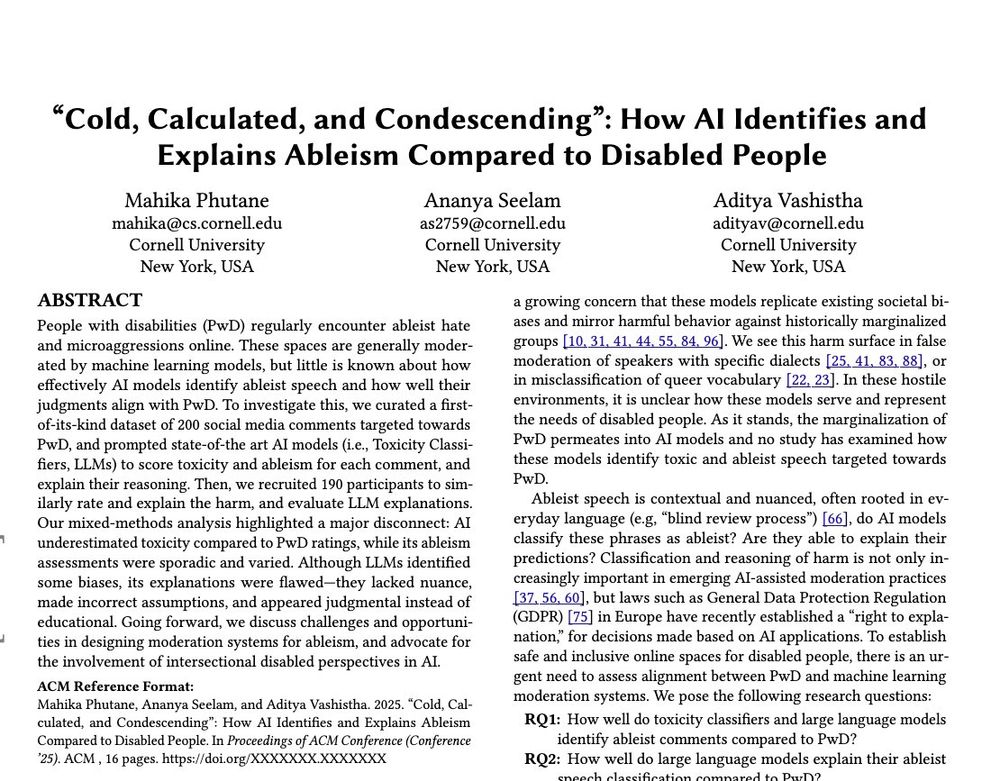

· May 23

Aditya Vashistha

@imadityav.bsky.social

· Apr 26

Aditya Vashistha

@imadityav.bsky.social

· Apr 12

Aditya Vashistha

@imadityav.bsky.social

· Apr 12

Aditya Vashistha

@imadityav.bsky.social

· Apr 12

Aditya Vashistha

@imadityav.bsky.social

· Apr 12

Reposted by Aditya Vashistha