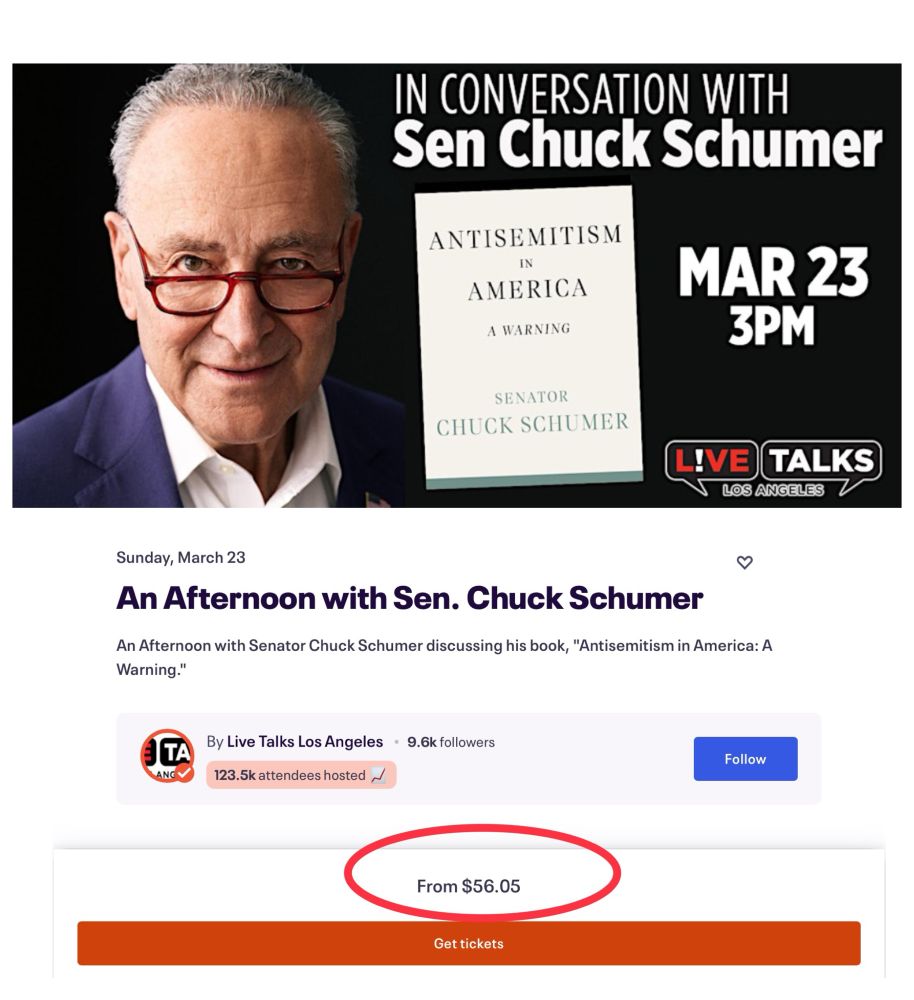

Absolute insanity. While Americans are scared and in crisis mode, he’s basically saying, let them eat cake.

@schumer.senate.gov Step Down.

Absolute insanity. While Americans are scared and in crisis mode, he’s basically saying, let them eat cake.

@schumer.senate.gov Step Down.

This is a simple small-scale replication of inference-time scaling

It was cheap: 16xH100 for 26 minutes (so what, ~$6?)

It replicates inference-time scaling using SFT only (no RL)

Extremely data frugal: 1000 samples

arxiv.org/abs/2501.19393

This is a simple small-scale replication of inference-time scaling

It was cheap: 16xH100 for 26 minutes (so what, ~$6?)

It replicates inference-time scaling using SFT only (no RL)

Extremely data frugal: 1000 samples

arxiv.org/abs/2501.19393

1. The realization that meditation can be a path to peace, happiness, and creativity.

2. An artistic representation of the surreal and violent undercurrents of modern American life.

-quoting Threads' michael.tucker_

1. The realization that meditation can be a path to peace, happiness, and creativity.

2. An artistic representation of the surreal and violent undercurrents of modern American life.

-quoting Threads' michael.tucker_

recent Chinese innovations were:

1. unique in that they avoided cost

2. the right way

US academic dialog was dominated by more expensive and complex methods that wouldn’t have worked

recent Chinese innovations were:

1. unique in that they avoided cost

2. the right way

US academic dialog was dominated by more expensive and complex methods that wouldn’t have worked

even today, mere *days* after the R1 release, there's already reproductions. combined with the incoming huggingface repro, it seems like R1 is legit

notable: they claim they developed in parallel and that most of their experiments were performed *prior to* the release of R1 and they came to the same conclusions

hkust-nlp.notion.site/simplerl-rea...

even today, mere *days* after the R1 release, there's already reproductions. combined with the incoming huggingface repro, it seems like R1 is legit

emergence = extrapolating questions from the data

emergence is basically “higher order learning”. i like to argue that LLMs aren’t machine learning because they go this extra step

emergence = extrapolating questions from the data

emergence is basically “higher order learning”. i like to argue that LLMs aren’t machine learning because they go this extra step

I think people have become far too concerned with the “correct” interpretations of media. Just experience it!!!

I think people have become far too concerned with the “correct” interpretations of media. Just experience it!!!

the raw duration of training, regardless of parallelization, is a huge risk, bc the cluster is saturated doing only one thing

so while you *could* build bigger models by training longer, that’s not feasible in practice due to risk

the raw duration of training, regardless of parallelization, is a huge risk, bc the cluster is saturated doing only one thing

so while you *could* build bigger models by training longer, that’s not feasible in practice due to risk

Tool innovations have always make the process of building software faster, cheaper: GenAI and AI agents will also do this.

But these are tools and efficiency gains.