James Tompkin

@jamestompkin.bsky.social

1.3K followers

230 following

16 posts

📸 jamestompkin.com and visual.cs.brown.edu 📸

Posts

Media

Videos

Starter Packs

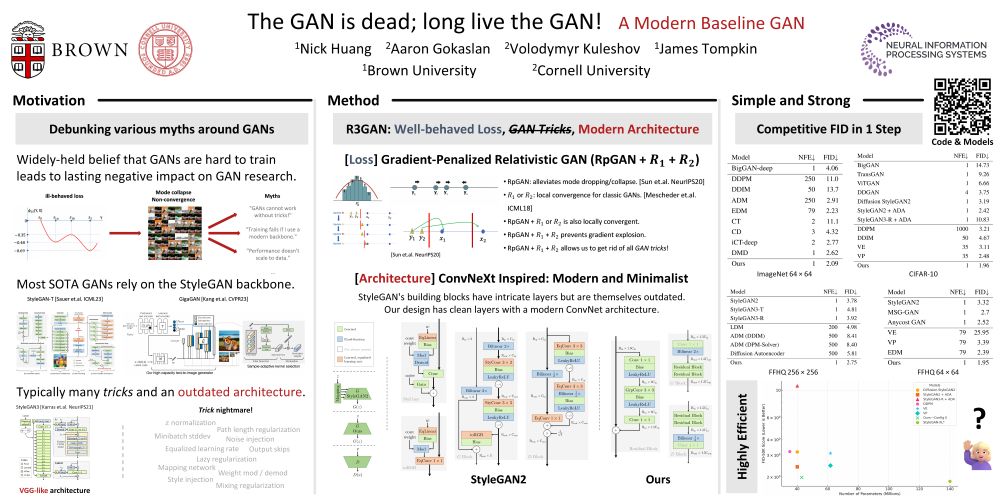

James Tompkin

@jamestompkin.bsky.social

· Jun 12

James Tompkin

@jamestompkin.bsky.social

· Jun 12

James Tompkin

@jamestompkin.bsky.social

· Mar 14

Reposted by James Tompkin

James Tompkin

@jamestompkin.bsky.social

· Jan 10

James Tompkin

@jamestompkin.bsky.social

· Jan 10

Reposted by James Tompkin

Reposted by James Tompkin

Chris Offner

@chrisoffner3d.bsky.social

· Nov 17

Reposted by James Tompkin