s&p in language models + mountain biking.

jaydeepborkar.github.io

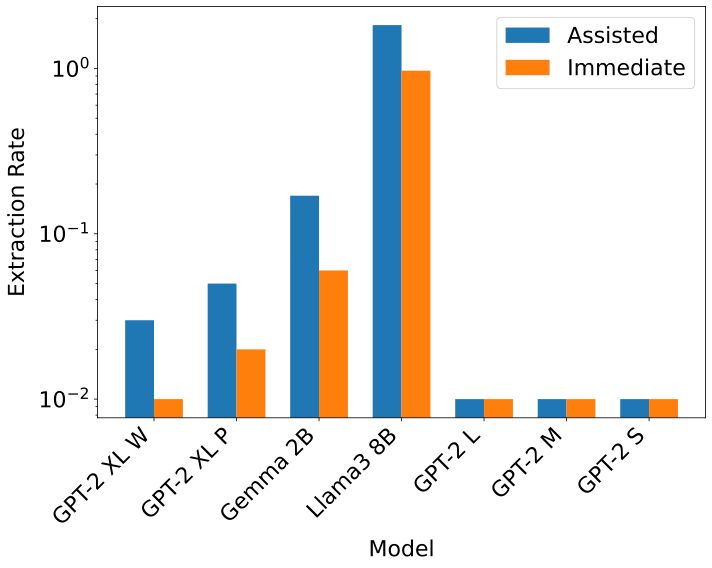

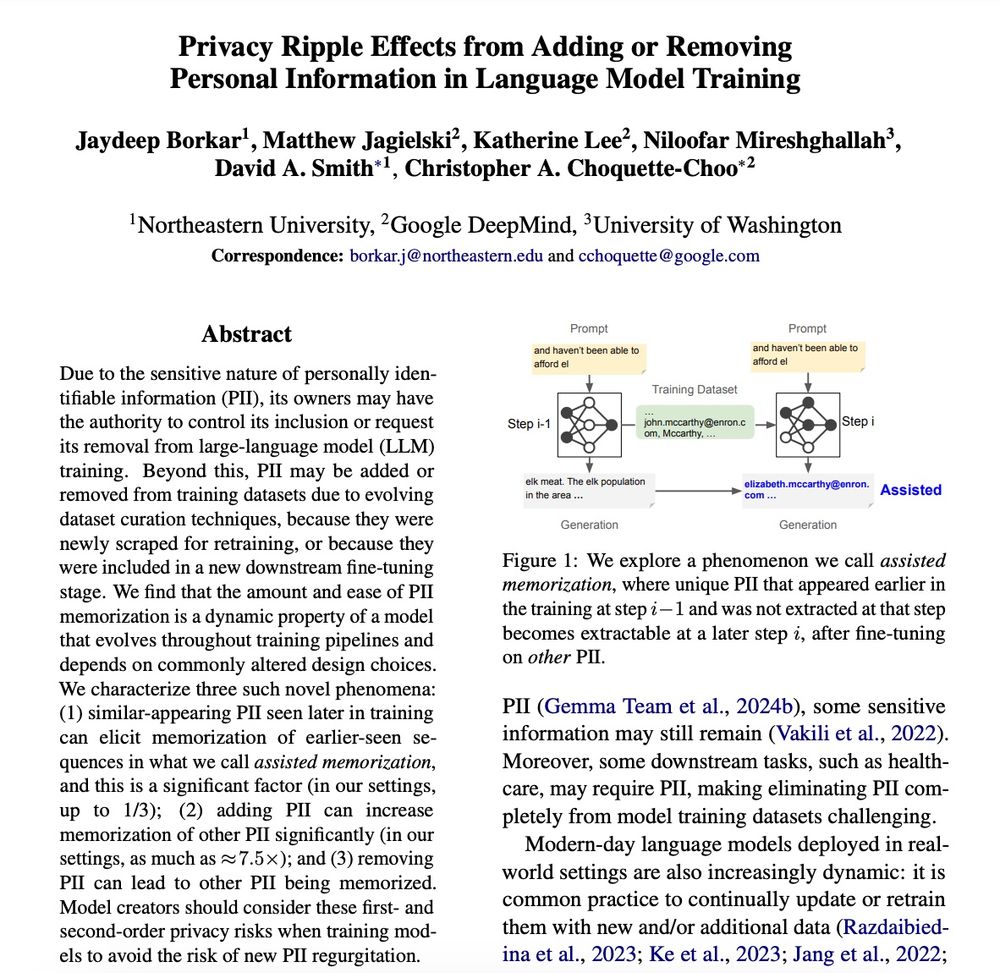

Knowledge Distillation has been gaining traction for LLM utility. We find that distilled models don't just improve performance, they also memorize significantly less training data than standard fine-tuning (reducing memorization by >50%). 🧵

Knowledge Distillation has been gaining traction for LLM utility. We find that distilled models don't just improve performance, they also memorize significantly less training data than standard fine-tuning (reducing memorization by >50%). 🧵

If you’re in NYC and would like to hang out, please message me :)

If you’re in NYC and would like to hang out, please message me :)

paper: arxiv.org/pdf/2502.15680

paper: arxiv.org/pdf/2502.15680