Jia-Bin Huang

@jbhuang0604.bsky.social

2.7K followers

32 following

180 posts

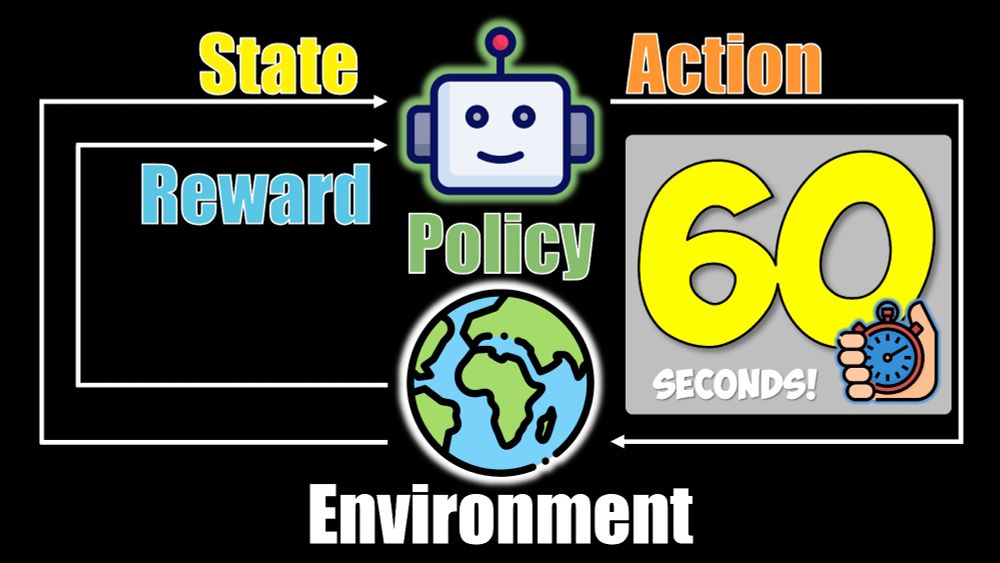

Associate Professor at UMD CS. YouTube: https://youtube.com/@jbhuang0604

Interested in how computers can learn and see.

Posts

Media

Videos

Starter Packs

Reposted by Jia-Bin Huang

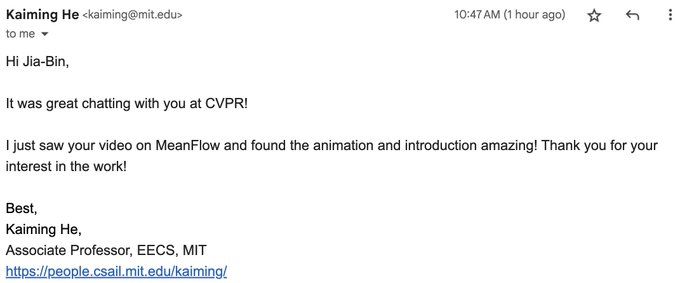

Jia-Bin Huang

@jbhuang0604.bsky.social

· May 21

Jia-Bin Huang

@jbhuang0604.bsky.social

· May 19