Dataset for vision-language reasoning where the model *generates images during the CoT*. Example: for geometry problems, it's helpful to draw lines in image space.

182K CoT labels: math, visual search, robot planning, and more.

Only downside: cc-by-nc license :(

Dataset for vision-language reasoning where the model *generates images during the CoT*. Example: for geometry problems, it's helpful to draw lines in image space.

182K CoT labels: math, visual search, robot planning, and more.

Only downside: cc-by-nc license :(

Fully open vision encoder. Masks image, encodes patches, then trains student to match teacher's clusters. Key advance: Matryoshka clustering. Each slice of the embedding gets its own projection head and clustering objective. Fewer features == fewer clusters to match.

Fully open vision encoder. Masks image, encodes patches, then trains student to match teacher's clusters. Key advance: Matryoshka clustering. Each slice of the embedding gets its own projection head and clustering objective. Fewer features == fewer clusters to match.

New benchmark of 1K videos, 1K captions, and 6K MCQs from accidents involving VRUs. Example: "why did the accident happen?" "(B): pedestrian moves or stays on the road."

Current VLMs get ~50-65% accuracy, much worse than humans (95%).

New benchmark of 1K videos, 1K captions, and 6K MCQs from accidents involving VRUs. Example: "why did the accident happen?" "(B): pedestrian moves or stays on the road."

Current VLMs get ~50-65% accuracy, much worse than humans (95%).

AMD paper: they find attention heads often have stereotyped sparsity patterns (e.g. only attending within an image, not across). They generate sparse attention variants for each prompt. Theoretically saves ~35% FLOPs for 1-2% worse on benches.

AMD paper: they find attention heads often have stereotyped sparsity patterns (e.g. only attending within an image, not across). They generate sparse attention variants for each prompt. Theoretically saves ~35% FLOPs for 1-2% worse on benches.

Nvidia paper scaling RL to long videos. First trains with SFT on a synthetic long CoT dataset, then does GRPO with up to 512 video frames. Uses cached image embeddings + sequence parallelism, speeding up rollouts >2X.

Bonus: code is already up!

Nvidia paper scaling RL to long videos. First trains with SFT on a synthetic long CoT dataset, then does GRPO with up to 512 video frames. Uses cached image embeddings + sequence parallelism, speeding up rollouts >2X.

Bonus: code is already up!

GRPO is pretty standard, interesting that they just did math instead of math, grounding, other possible RLVR tasks. Qwen-2.5-Instruct 32B to judges the accuracy of the answer in addition to rule-based verification.

GRPO is pretty standard, interesting that they just did math instead of math, grounding, other possible RLVR tasks. Qwen-2.5-Instruct 32B to judges the accuracy of the answer in addition to rule-based verification.

InternViT-6B stitched with QwQ-32B. SFT warmup, GRPO on math, then a small SFT fine-tune at the end.

Good benches, actual ablations, and interesting discussion.

Details: 🧵

InternViT-6B stitched with QwQ-32B. SFT warmup, GRPO on math, then a small SFT fine-tune at the end.

Good benches, actual ablations, and interesting discussion.

Details: 🧵

Results: +18 points better on V* compared to Qwen2.5-VL, and +5 points better than GRPO alone.

Results: +18 points better on V* compared to Qwen2.5-VL, and +5 points better than GRPO alone.

Data: training subset of MME-RealWorld. Evaluate on V*.

Data: training subset of MME-RealWorld. Evaluate on V*.

I've been waiting for a paper like this! Trains the LLM to iteratively crop regions of interest to answer a question, and the only reward is the final answer.

Details in thread 👇

I've been waiting for a paper like this! Trains the LLM to iteratively crop regions of interest to answer a question, and the only reward is the final answer.

Details in thread 👇

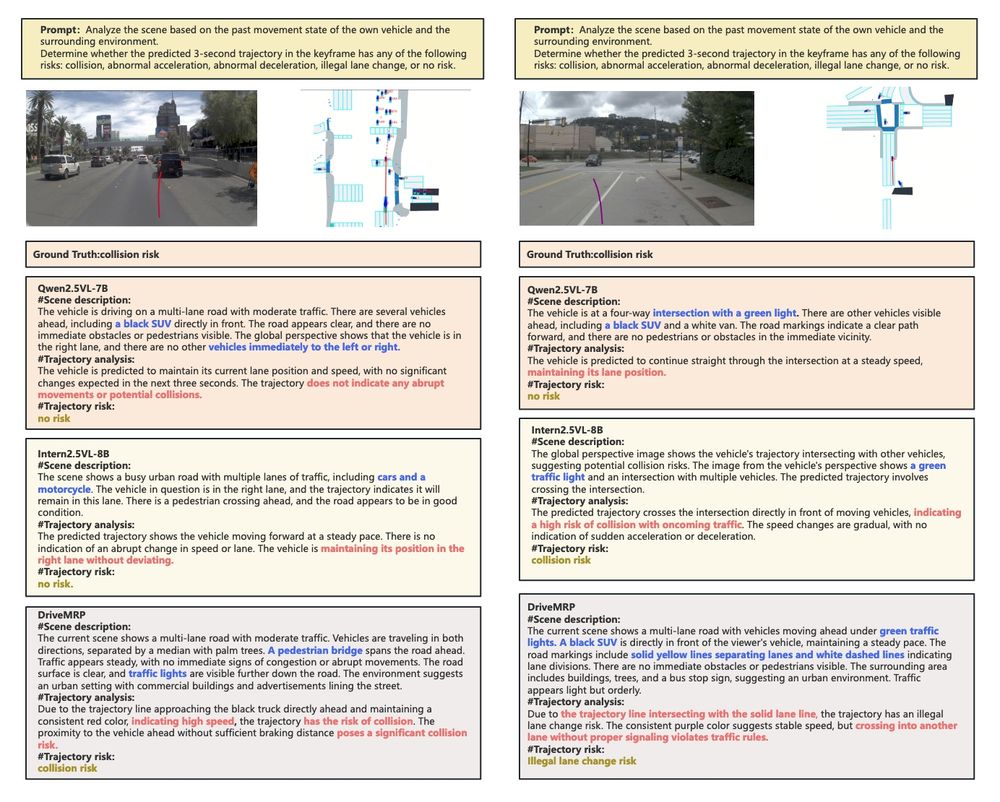

They synthesize high-risk scenes derived from NuPlan. They render it as both a bird's eye view image and a front camera view.

👇

They synthesize high-risk scenes derived from NuPlan. They render it as both a bird's eye view image and a front camera view.

👇

Instead of segment + postprocess, generate lane graphs autoregressively. Node == vertex in BEV space, edge == control point for Bezier curves. At each step, a vertex is added and the adjacency matrix adds one row + column.

They formulate this process as next token prediction. Neat!

Instead of segment + postprocess, generate lane graphs autoregressively. Node == vertex in BEV space, edge == control point for Bezier curves. At each step, a vertex is added and the adjacency matrix adds one row + column.

They formulate this process as next token prediction. Neat!

When training with many RL tasks, they found weakness in any one task leads to model collapse for all tasks: "effective RL demands finely tuned, hack-resistant verifiers in every domain"

When training with many RL tasks, they found weakness in any one task leads to model collapse for all tasks: "effective RL demands finely tuned, hack-resistant verifiers in every domain"

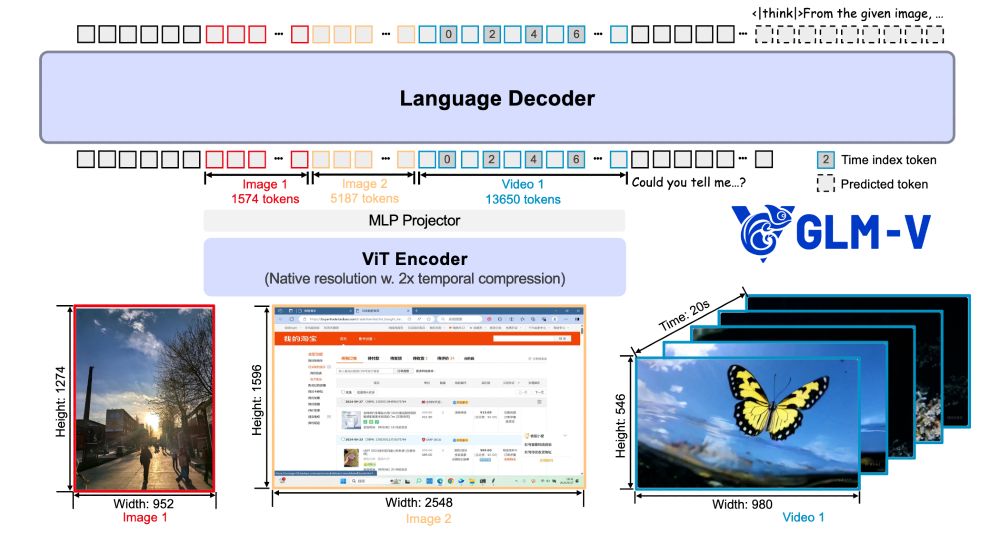

* Captioning: 10B image-text pairs from the web

* Interleaved data: websites, papers, and 100 million digitized books

* OCR: 220 million images

* Grounding: use GLIPv2 for images, playwright for GUIs

* Video: "academic, web, and proprietary sources"

* Instruction: 50M samples

* Captioning: 10B image-text pairs from the web

* Interleaved data: websites, papers, and 100 million digitized books

* OCR: 220 million images

* Grounding: use GLIPv2 for images, playwright for GUIs

* Video: "academic, web, and proprietary sources"

* Instruction: 50M samples

Tons of hints but few ablations 😞 eg they upweight difficult-but-learnable samples every iteration, but don't show how it compares to baseline.

9B variant beats Qwen2.5-VL-7B on many standard benchmarks.

Details in thread 👇

Tons of hints but few ablations 😞 eg they upweight difficult-but-learnable samples every iteration, but don't show how it compares to baseline.

9B variant beats Qwen2.5-VL-7B on many standard benchmarks.

Details in thread 👇