Jenelle Feather

@jfeather.bsky.social

1.2K followers

510 following

51 posts

Flatiron Research Fellow #FlatironCCN. PhD from #mitbrainandcog. Incoming Asst Prof #CarnegieMellon in Fall 2025. I study how humans and computers hear and see.

Posts

Media

Videos

Starter Packs

Reposted by Jenelle Feather

Reposted by Jenelle Feather

Reposted by Jenelle Feather

Reposted by Jenelle Feather

Reposted by Jenelle Feather

Sonica Saraf

@sonicasaraf.bsky.social

· Jun 29

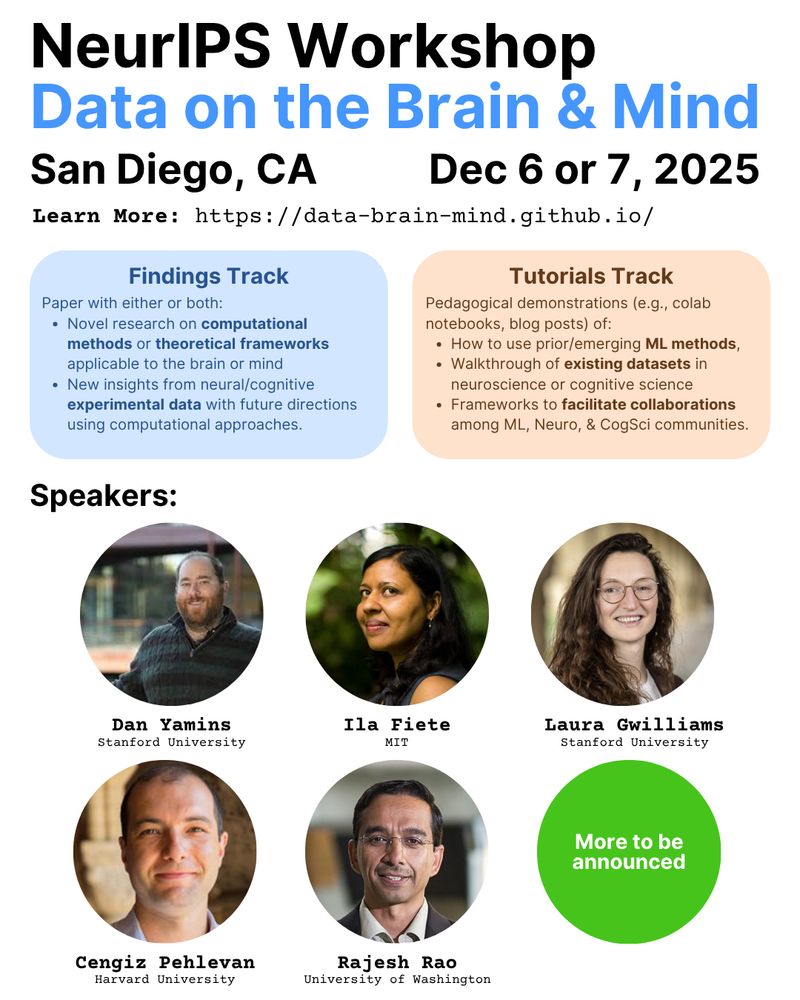

Variations in neuronal selectivity create efficient representational geometries for perception

Our visual capabilities depend on neural response properties in visual areas of our brains. Neurons exhibit a wide variety of selective response properties, but the reasons for this diversity are unkn...

www.biorxiv.org

Jenelle Feather

@jfeather.bsky.social

· Jun 18

Reposted by Jenelle Feather

Reposted by Jenelle Feather

Reposted by Jenelle Feather

Jenelle Feather

@jfeather.bsky.social

· Apr 24

Jenelle Feather

@jfeather.bsky.social

· Apr 24

Jenelle Feather

@jfeather.bsky.social

· Apr 24