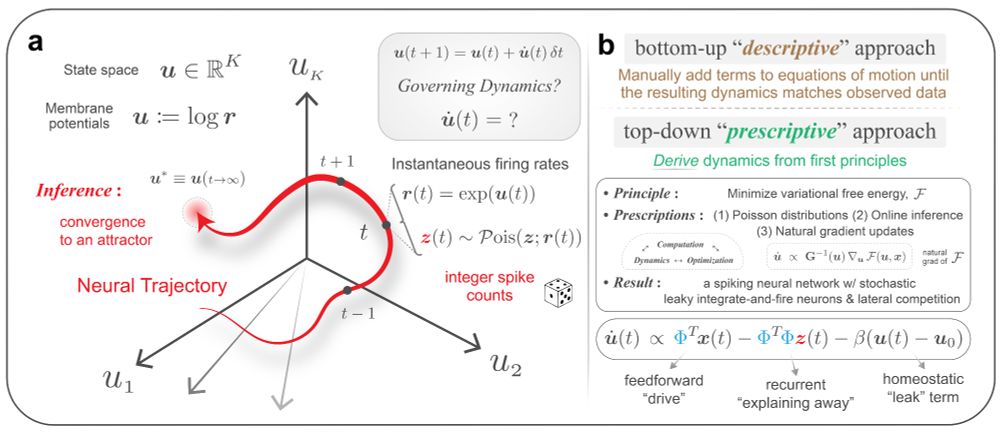

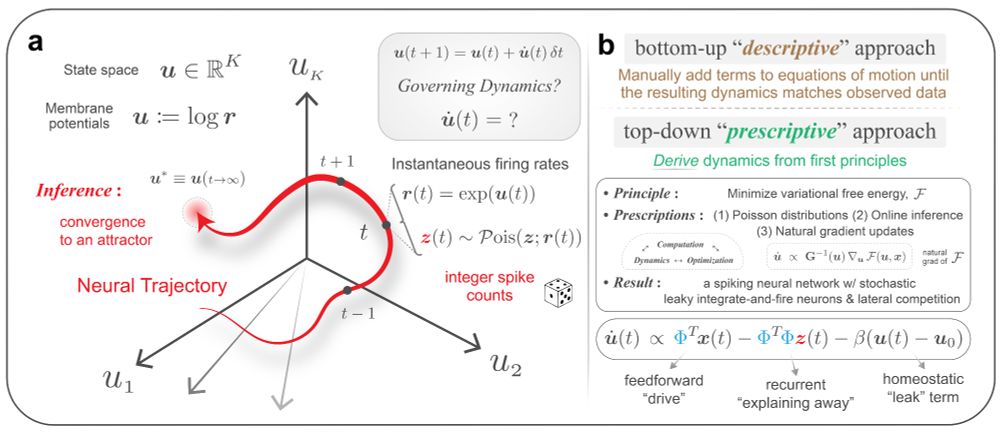

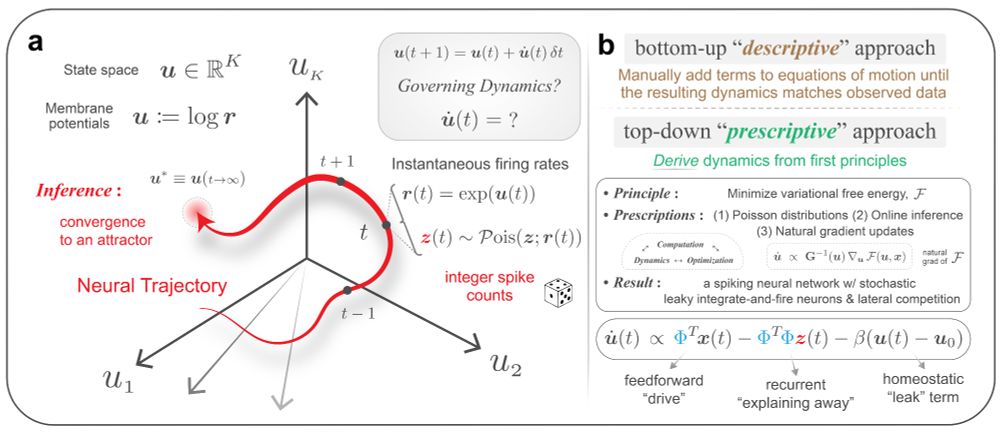

In a new preprint, we:

✅ "Derive" a spiking recurrent network from variational principles

✅ Show it does amazing things like out-of-distribution generalization

👉[1/n]🧵

w/ co-lead Dekel Galor & PI @jcbyts.bsky.social

🧠🤖🧠📈

In our latest Journal Club session, Thelonious Cooper presented "The neuron as a direct data-driven controller" by Moore et al., with special commentary from Mitya Chklovskii.

🎥 www.youtube.com/watch?v=0P7k...

🧠🤖🧠📈

In our latest Journal Club session, Thelonious Cooper presented "The neuron as a direct data-driven controller" by Moore et al., with special commentary from Mitya Chklovskii.

🎥 www.youtube.com/watch?v=0P7k...

🧠🤖🧠📈

Anne delivered an amazing synthesis of her extensive work on how working memory shapes reward-based learning in humans.

📜 Read the paper: nature.com/articles/s41...

📽️ Watch the full presentation: www.youtube.com/watch?v=eEqZ...

🧠🤖🧠📈

Computational modeling of error patterns during reward-based learning show evidence that habit learning (value free!) supplements working memory in 7 human data sets.

rdcu.be/eQjLN

Anne delivered an amazing synthesis of her extensive work on how working memory shapes reward-based learning in humans.

📜 Read the paper: nature.com/articles/s41...

📽️ Watch the full presentation: www.youtube.com/watch?v=eEqZ...

🧠🤖🧠📈

Adam's talk covered a lot of ground — from his recent work on distributional RL (nature.com/articles/s41...) to a broader discussion of RL & the brain.

📽️ Watch the full meeting here: www.youtube.com/watch?v=Xe7B...

🧠🤖🧠📈

Adam's talk covered a lot of ground — from his recent work on distributional RL (nature.com/articles/s41...) to a broader discussion of RL & the brain.

📽️ Watch the full meeting here: www.youtube.com/watch?v=Xe7B...

🧠🤖🧠📈

“The thesis of this book is that the dominant ideas that have shaped #neuroscience are best understood as attempts to simplify the brain.” 🧠

…which is itself a simplification 😂

“The thesis of this book is that the dominant ideas that have shaped #neuroscience are best understood as attempts to simplify the brain.” 🧠

…which is itself a simplification 😂

See how Monty (a cortex-inspired model), crushes powerful ViTs in out-of-distribution & continual learning tasks—all while being millions of times more efficient in data & FLOPs!

📽️ Watch here: www.youtube.com/watch?v=Q0FF...

🧠🤖🧠📈

See how Monty (a cortex-inspired model), crushes powerful ViTs in out-of-distribution & continual learning tasks—all while being millions of times more efficient in data & FLOPs!

📽️ Watch here: www.youtube.com/watch?v=Q0FF...

🧠🤖🧠📈

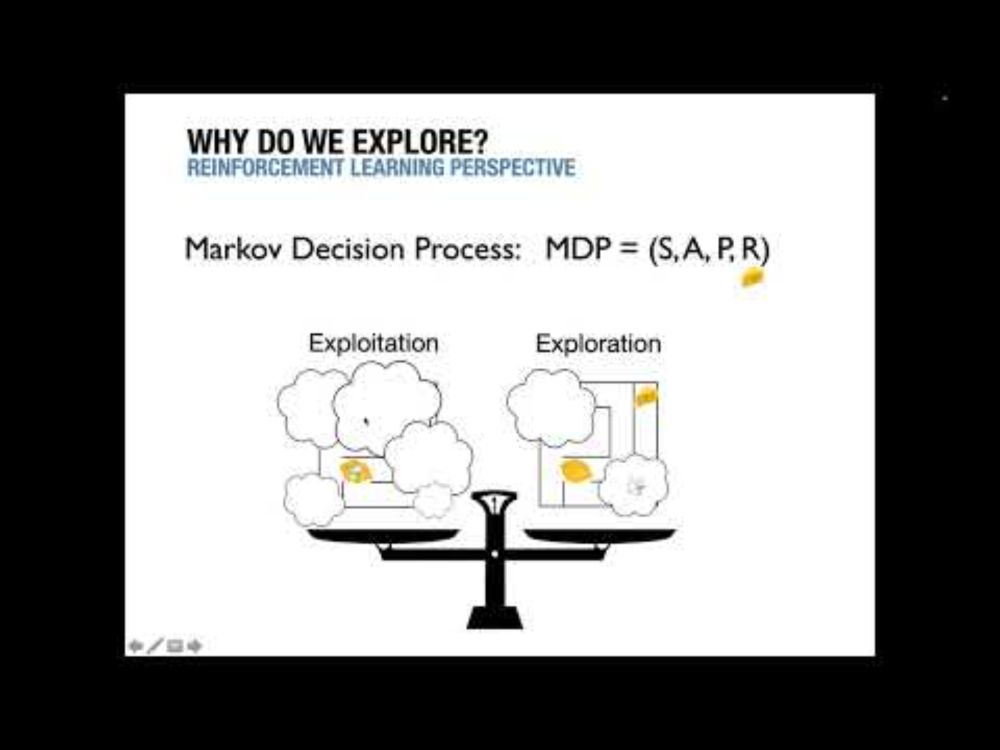

Fritz introduced an information-theoretic, first-principles approach to modeling exploration through the maximization of "predicted information gain."

📽️ Watch the full presentation here: www.youtube.com/watch?v=rlF-...

🧠🤖🧠📈

Fritz introduced an information-theoretic, first-principles approach to modeling exploration through the maximization of "predicted information gain."

📽️ Watch the full presentation here: www.youtube.com/watch?v=rlF-...

🧠🤖🧠📈

go.bsky.app/2LmKSDN

go.bsky.app/2LmKSDN

www.youtube.com/watch?v=E0A0...

www.youtube.com/watch?v=E0A0...

- Anne Collins (Professor @ucberkeleyofficial.bsky.social)

- Niels Leadholm (Research Manager @thousandbrains.org)

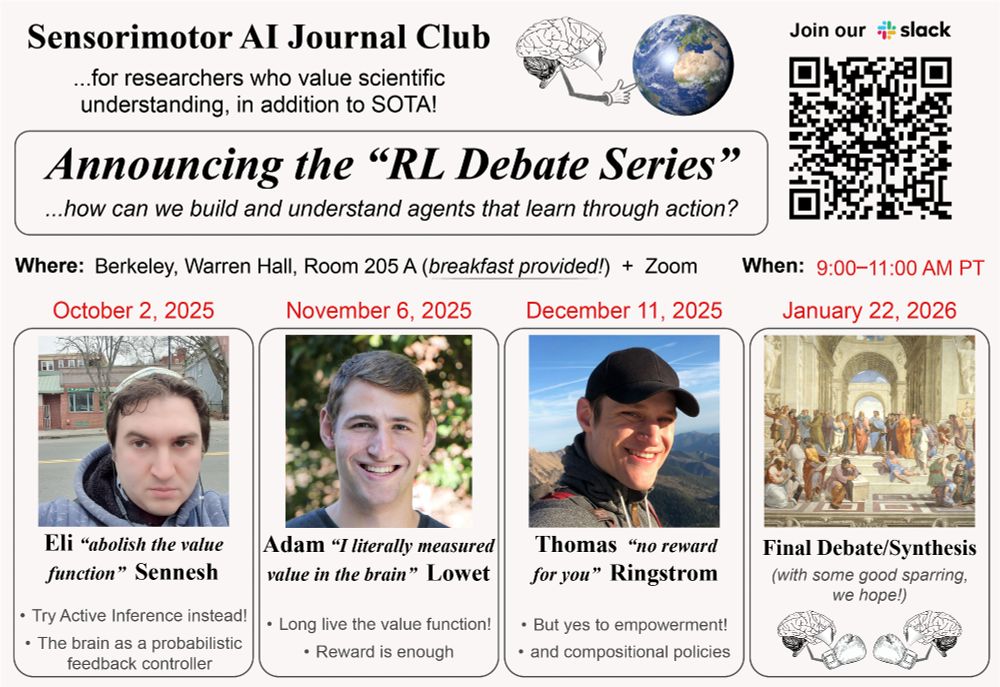

Things are heating up!!

See the flyer for details👇

🌎 sensorimotorai.github.io/debates/

🧠🤖🧠📈

- Anne Collins (Professor @ucberkeleyofficial.bsky.social)

- Niels Leadholm (Research Manager @thousandbrains.org)

Things are heating up!!

See the flyer for details👇

🌎 sensorimotorai.github.io/debates/

🧠🤖🧠📈

Watch @elisennesh.bsky.social abolish the value function:

youtube.com/watch?v=E0A0...

🧠🤖🧠📈

Watch @elisennesh.bsky.social abolish the value function:

youtube.com/watch?v=E0A0...

🧠🤖🧠📈

Most of those cortical connections are recurrent, inside each area. What do they do?

New paper from me in Annual Reviews: 🧪 🧠📈 1/

www.annualreviews.org/content/jour...

Most of those cortical connections are recurrent, inside each area. What do they do?

New paper from me in Annual Reviews: 🧪 🧠📈 1/

www.annualreviews.org/content/jour...

Also, big thanks to our reviewers. Their deep reading of the paper and thoughtful feedback really helped sharpen our contributions.

Stay tuned for a much-enhanced final version in a couple weeks!

In a new preprint, we:

✅ "Derive" a spiking recurrent network from variational principles

✅ Show it does amazing things like out-of-distribution generalization

👉[1/n]🧵

w/ co-lead Dekel Galor & PI @jcbyts.bsky.social

🧠🤖🧠📈

Also, big thanks to our reviewers. Their deep reading of the paper and thoughtful feedback really helped sharpen our contributions.

Stay tuned for a much-enhanced final version in a couple weeks!

📢 To address these, the Sensorimotor AI Journal Club is launching the "RL Debate Series"👇

w/ @elisennesh.bsky.social, @noreward4u.bsky.social, @tommasosalvatori.bsky.social

🧵[1/5]

🧠🤖🧠📈

📢 To address these, the Sensorimotor AI Journal Club is launching the "RL Debate Series"👇

w/ @elisennesh.bsky.social, @noreward4u.bsky.social, @tommasosalvatori.bsky.social

🧵[1/5]

🧠🤖🧠📈

📜 (B)ayesian (O)nline learning in (N)on-stationary (E)nvironments (BONE; openreview.net/forum?id=ose...)

Many thanks to the first author Gerardo for an amazing presentation!

🎥 watch it here: www.youtube.com/watch?v=49PP...

🧠🤖🧠📈

📜 (B)ayesian (O)nline learning in (N)on-stationary (E)nvironments (BONE; openreview.net/forum?id=ose...)

Many thanks to the first author Gerardo for an amazing presentation!

🎥 watch it here: www.youtube.com/watch?v=49PP...

🧠🤖🧠📈

archive.org/details/Redw...

archive.org/details/Redw...

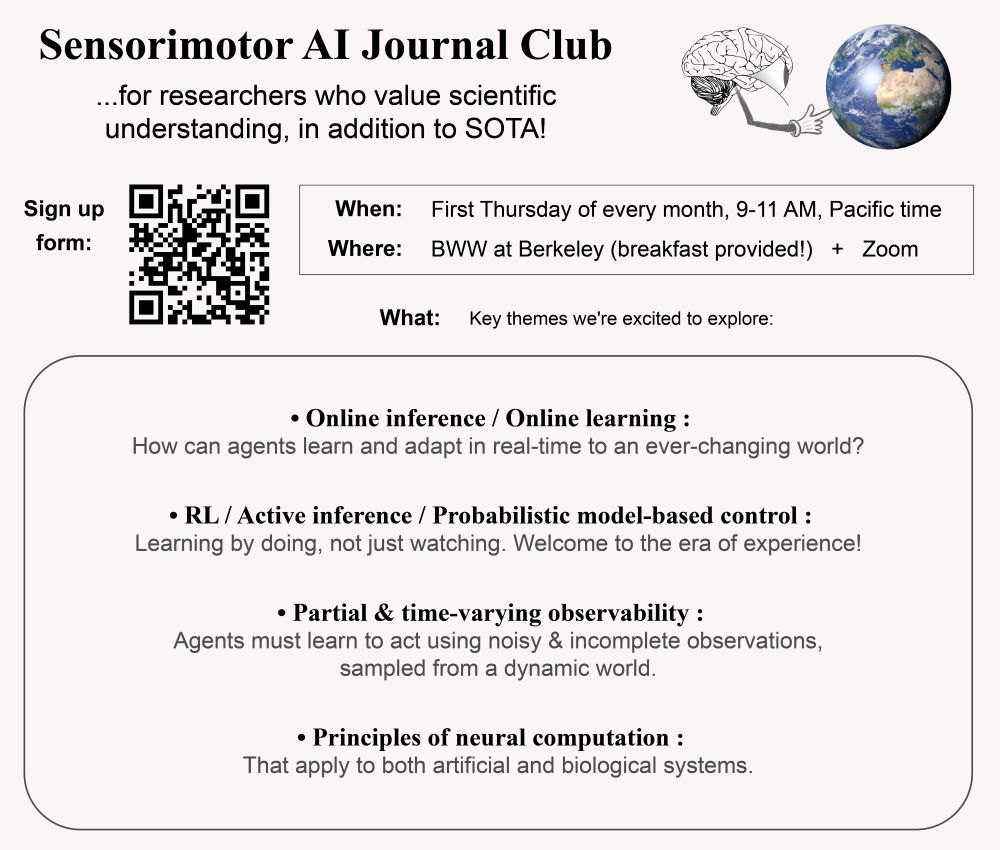

(...which, we might as well just call the "AI Club for Non-conformists"👇😎)

📽️ full presentation: youtube.com/watch?v=efc7...

🧵[1/4]

🧠🤖🧠📈

(...which, we might as well just call the "AI Club for Non-conformists"👇😎)

📽️ full presentation: youtube.com/watch?v=efc7...

🧵[1/4]

🧠🤖🧠📈

w/ Kaylene Stocking, Tommaso Salvatori, and @elisennesh.bsky.social

Sign up link: forms.gle/o5DXD4WMdhTg...

More details below 🧵[1/5]

🧠🤖🧠📈

w/ Kaylene Stocking, Tommaso Salvatori, and @elisennesh.bsky.social

Sign up link: forms.gle/o5DXD4WMdhTg...

More details below 🧵[1/5]

🧠🤖🧠📈

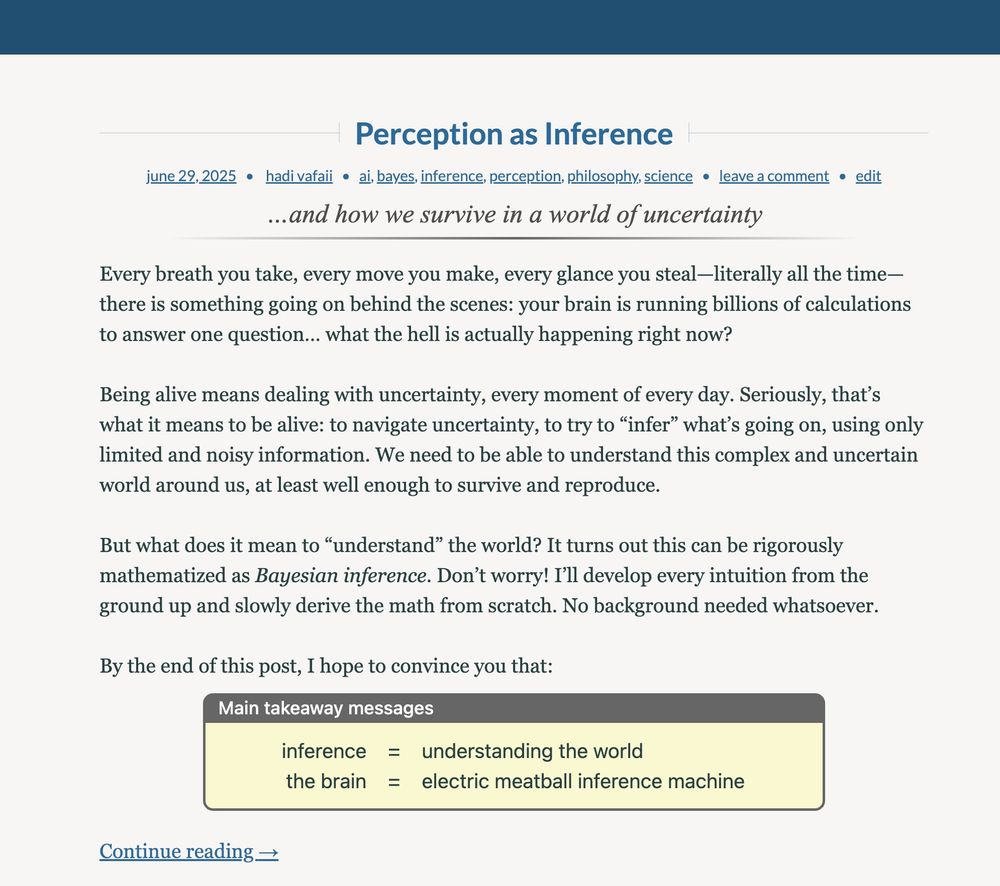

✅ Sparse Coding

✅ Predictive Coding

✅ Free Energy Principle

& more!

In my new blog post, I build the intuition behind this idea from ground up 👉[1/6]🧵

🧠🤖🧠📈

✅ Sparse Coding

✅ Predictive Coding

✅ Free Energy Principle

& more!

In my new blog post, I build the intuition behind this idea from ground up 👉[1/6]🧵

🧠🤖🧠📈

In a new preprint, we:

✅ "Derive" a spiking recurrent network from variational principles

✅ Show it does amazing things like out-of-distribution generalization

👉[1/n]🧵

w/ co-lead Dekel Galor & PI @jcbyts.bsky.social

🧠🤖🧠📈

In a new preprint, we:

✅ "Derive" a spiking recurrent network from variational principles

✅ Show it does amazing things like out-of-distribution generalization

👉[1/n]🧵

w/ co-lead Dekel Galor & PI @jcbyts.bsky.social

🧠🤖🧠📈

In my first blog post, I show how this fundamental principle can be mathematized:

✅ Brains adapt, and adaptation is about KL divergence minimization.

Let's unpack the main insights 🧵[1/n]

Link: mysterioustune.com/2025/01/13/w...

🧠🤖🧠📈 #AI

In my first blog post, I show how this fundamental principle can be mathematized:

✅ Brains adapt, and adaptation is about KL divergence minimization.

Let's unpack the main insights 🧵[1/n]

Link: mysterioustune.com/2025/01/13/w...

🧠🤖🧠📈 #AI