Joachim Baumann

@joachimbaumann.bsky.social

130 followers

190 following

15 posts

Postdoc @milanlp.bsky.social / Incoming Postdoc @stanfordnlp.bsky.social / Computational social science, LLMs, algorithmic fairness

Posts

Media

Videos

Starter Packs

Pinned

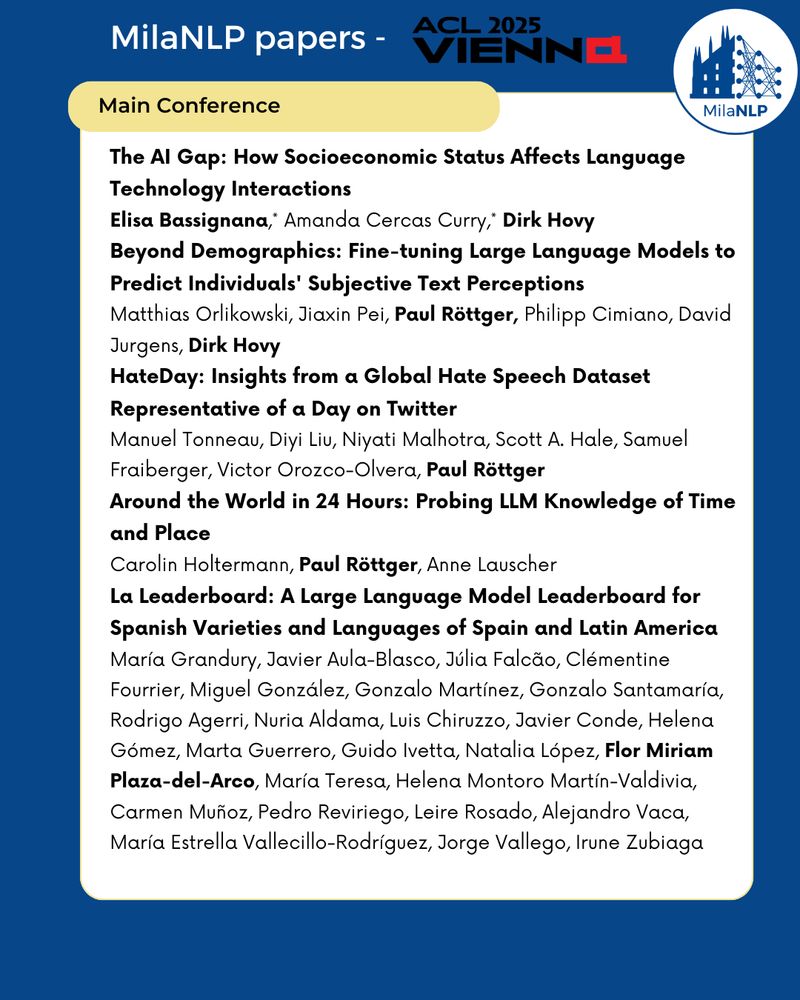

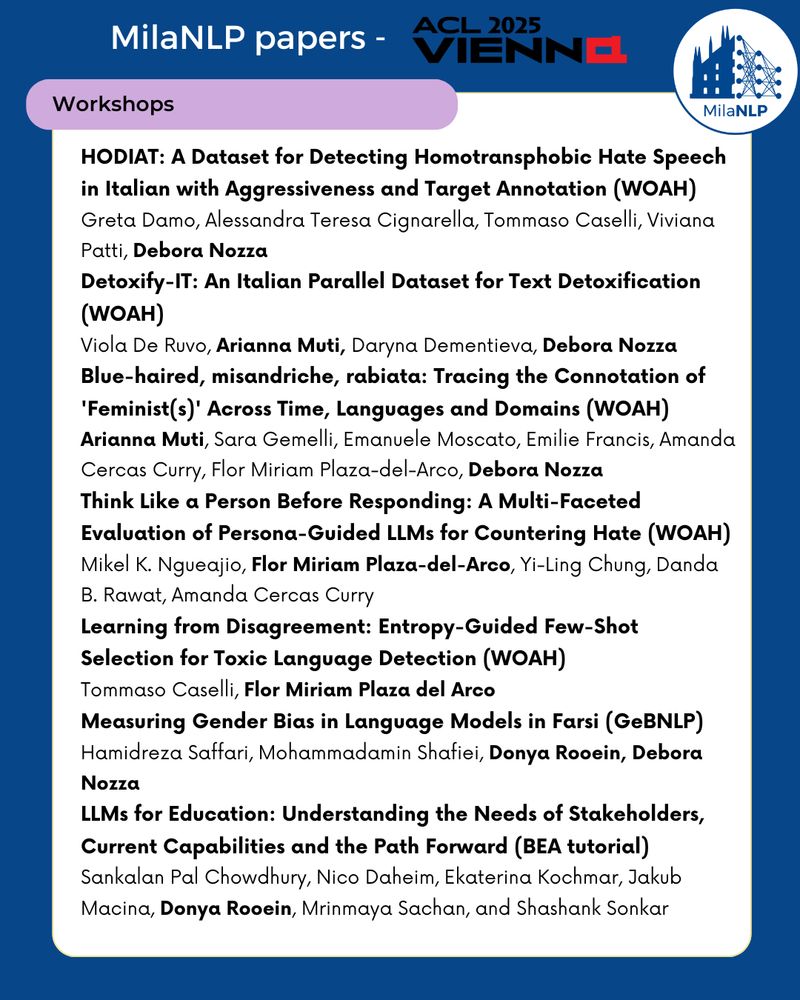

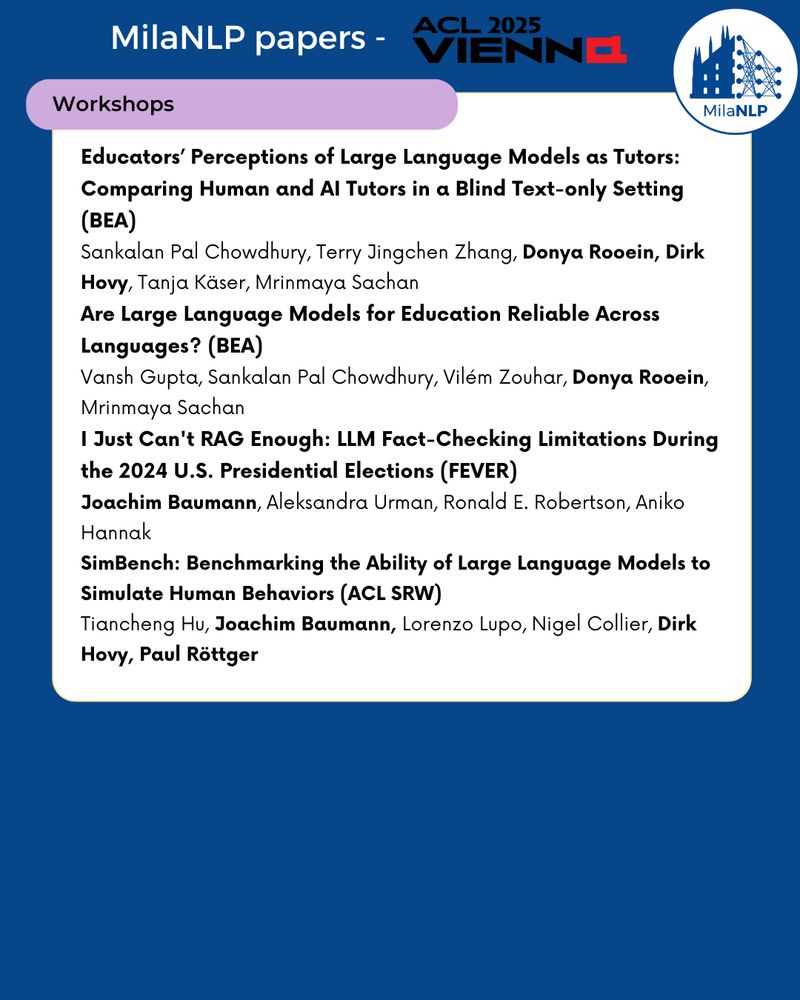

Reposted by Joachim Baumann

Reposted by Joachim Baumann

Reposted by Joachim Baumann

Tiancheng Hu

@tiancheng.bsky.social

· Jul 26

Reposted by Joachim Baumann