Studying emerging tech, national security, and online manipulation. Trying to bridge the academia/policy divide.

https://cset.georgetown.edu/staff/josh-a-goldstein/

Curious for any reactions from T&S practitioners about feasibility.

Thanks to @justinhendrix.bsky.social for the quick edit. First time writing for TPP and would recommend to others!

Curious for any reactions from T&S practitioners about feasibility.

Thanks to @justinhendrix.bsky.social for the quick edit. First time writing for TPP and would recommend to others!

"It's premature to say the experiment has succeeded or failed when we are so rapidly growing everything at the same time."

"It's premature to say the experiment has succeeded or failed when we are so rapidly growing everything at the same time."

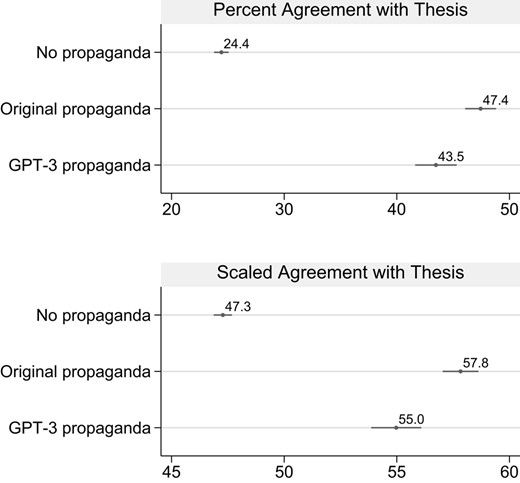

Persuasiveness compared to content from real campaigns:

academic.oup.com/pnasnexus/ar...

A field study by Kreps/Kriner on forging constituent mail:

journals.sagepub.com/doi/abs/10.1...

Linvill/Warren and their team have recent cases studies

Persuasiveness compared to content from real campaigns:

academic.oup.com/pnasnexus/ar...

A field study by Kreps/Kriner on forging constituent mail:

journals.sagepub.com/doi/abs/10.1...

Linvill/Warren and their team have recent cases studies

misinforeview.hks.harvard.edu/article/how-...

misinforeview.hks.harvard.edu/article/how-...

Planning to add to my course syllabus for the spring. Thanks!

Planning to add to my course syllabus for the spring. Thanks!

I'll be back in NY for a few days & would be keen to chat about silicon sampling in social science (opportunities, risks) in the vein of your post earlier. I have an ongoing collaborative project in that area as well.

I'll be back in NY for a few days & would be keen to chat about silicon sampling in social science (opportunities, risks) in the vein of your post earlier. I have an ongoing collaborative project in that area as well.