- RLT significantly reduces fine-tuning time (up to 40%) without compromising accuracy

- RLT shows greater token reduction on datasets with higher frames per second (FPS)

...

- RLT significantly reduces fine-tuning time (up to 40%) without compromising accuracy

- RLT shows greater token reduction on datasets with higher frames per second (FPS)

...

.

.

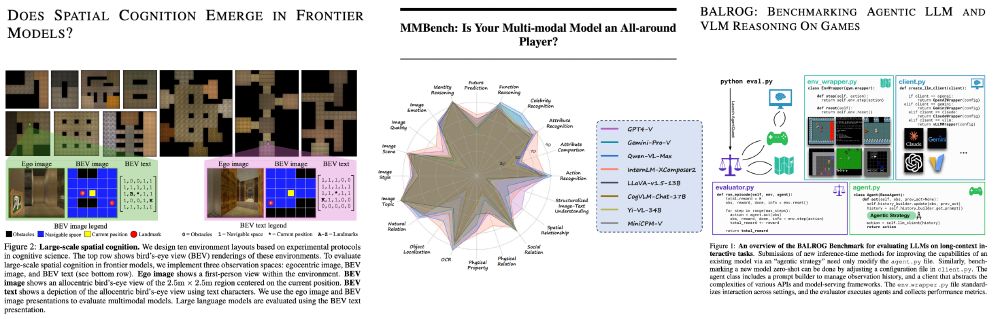

- arxiv.org/abs/2410.06468 (does spatial cognition ...)

- arxiv.org/abs/2307.06281 (MMBench)

- arxiv.org/abs/2411.13543 (BALROG) - additional points for the LOTR ref.

- arxiv.org/abs/2410.06468 (does spatial cognition ...)

- arxiv.org/abs/2307.06281 (MMBench)

- arxiv.org/abs/2411.13543 (BALROG) - additional points for the LOTR ref.

It shows that good old fashioned appearance and motion cues are enough for a SOTA tracker (if done right)

It shows that good old fashioned appearance and motion cues are enough for a SOTA tracker (if done right)