Karen Ullrich (s/h) ✈️ COLM

@karen-ullrich.bsky.social

4.2K followers

120 following

26 posts

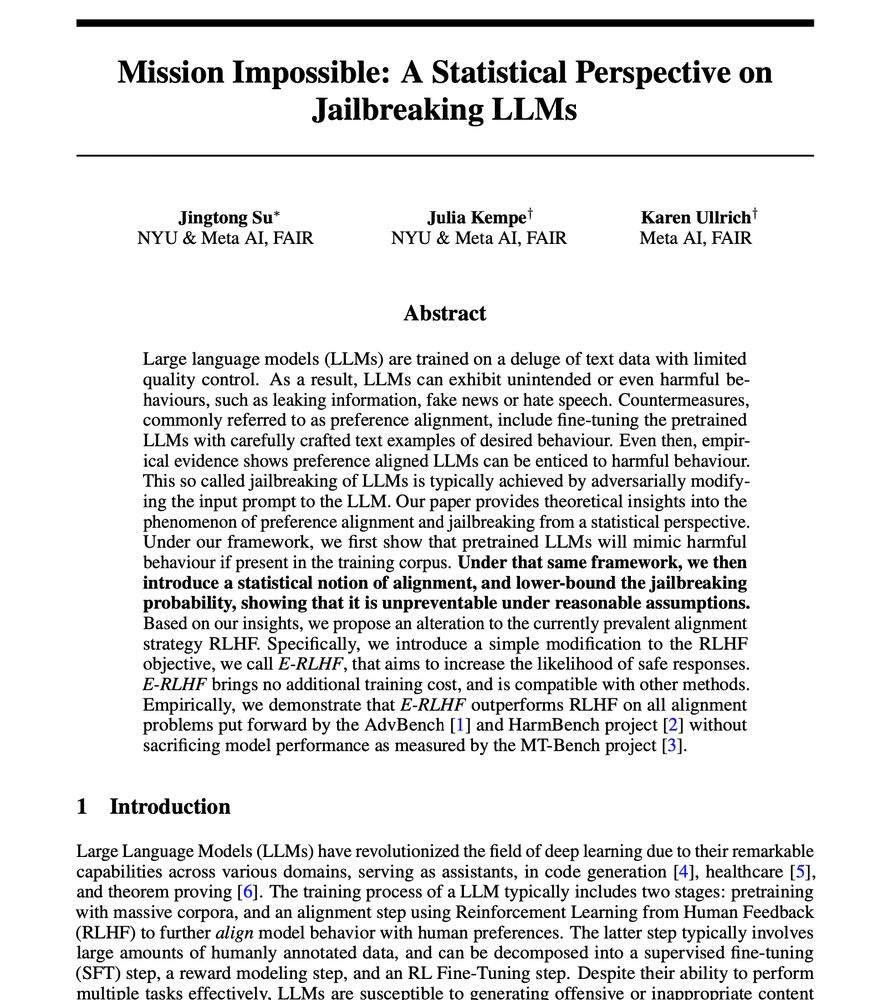

Research scientist at FAIR NY ❤️ LLMs + Information Theory. Previously, PhD at UoAmsterdam, intern at DeepMind + MSRC.

Posts

Media

Videos

Starter Packs

Pinned