Keyon Vafa

@keyonv.bsky.social

170 followers

100 following

28 posts

Postdoctoral fellow at Harvard Data Science Initiative | Former computer science PhD at Columbia University | ML + NLP + social sciences

https://keyonvafa.com

Posts

Media

Videos

Starter Packs

Reposted by Keyon Vafa

Charlie Rahal

@crahal.com

· Aug 27

Reposted by Keyon Vafa

Charlie Rahal

@crahal.com

· Aug 14

Keyon Vafa

@keyonv.bsky.social

· Jul 14

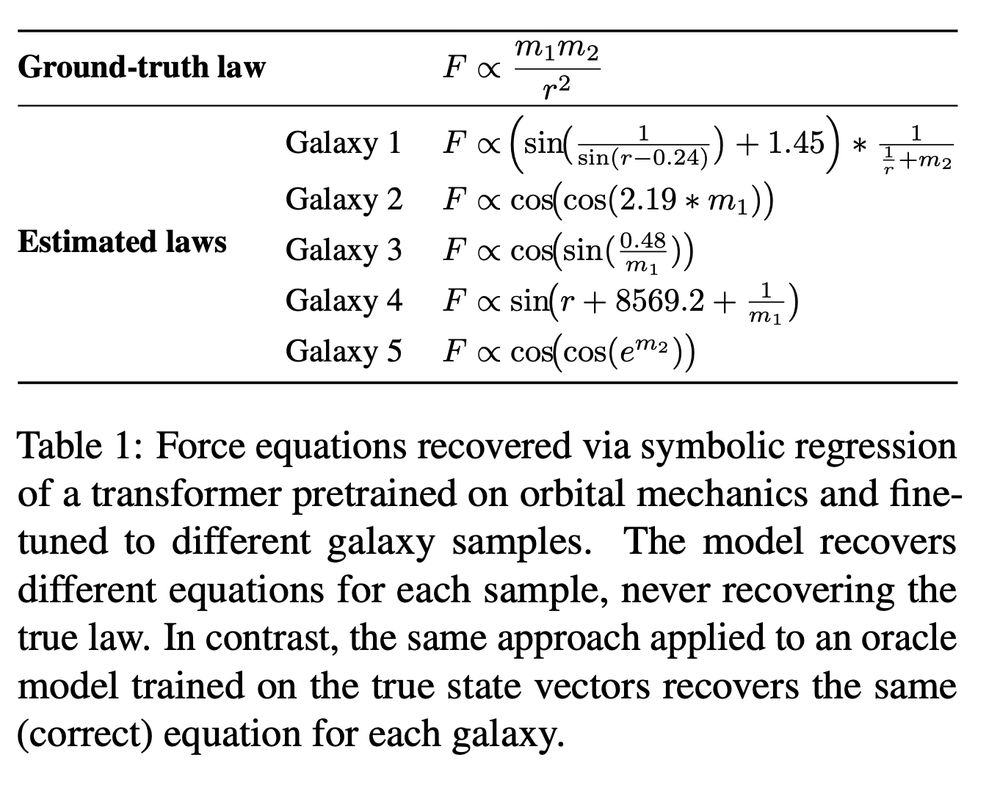

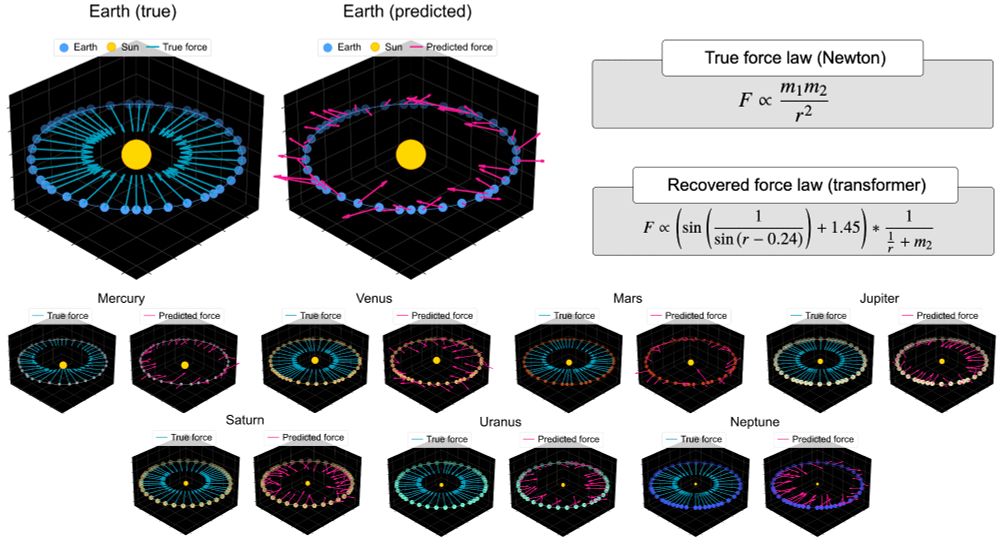

What Has a Foundation Model Found? Using Inductive Bias to Probe for World Models

Foundation models are premised on the idea that sequence prediction can uncover deeper domain understanding, much like how Kepler's predictions of planetary motion later led to the discovery of Newton...

arxiv.org

Keyon Vafa

@keyonv.bsky.social

· Jul 14

Evaluating the World Model Implicit in a Generative Model

Recent work suggests that large language models may implicitly learn world models. How should we assess this possibility? We formalize this question for the case where the underlying reality is govern...

arxiv.org

Keyon Vafa

@keyonv.bsky.social

· Jul 14

Keyon Vafa

@keyonv.bsky.social

· Jul 14

Keyon Vafa

@keyonv.bsky.social

· Jul 14

Keyon Vafa

@keyonv.bsky.social

· Jul 14

Reposted by Keyon Vafa

Reposted by Keyon Vafa

Nikhil Garg

@nkgarg.bsky.social

· Mar 10